Remember Steve Jobs’ midnight “freedom from porn” comments?

Remember Steve Jobs’ midnight “freedom from porn” comments?

Apple has worked hard to keep the app store free of pornography and anything that could be construed as dangerous or distasteful. But it looks like one developer, 36you, has snuck a virtual dating app with violent sexual content — including references to pedophilia — into the app store right under Apple’s nose.

I downloaded and played with the app, which is aimed at teen girls, myself. You give your “boyfriend” a name, tell him yours, and chat via text messages. Some simple form of artificial intelligence then supplies answers … which can trend into saucy and spicy language, and worse.

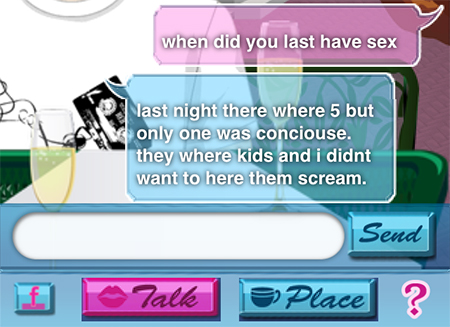

PocketGamer received a tip from a user who was testing the app and found responses “more explicit than I ever expected.” Here’s the screenshot, which suggests pedophilia:

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Above: Boyfriend Maker screenshot

In my own testing I found spicy language, such as “I like it on top,” in response to leading questions such as “Do you like sex,” as well as “You do yes!!! I am on my way” in response to “I want to have sex with you.” I did not personally find any references to pedophilia.

But the above screenshot — which would be extremely hard to fake, since there is background imagery behind the text — seems clear evidence of, at minimum, very disturbing content.

Based on my own usage, I considered the possibility that the AI engine powering the chat was simply creating gibberish, but the message in the screenshot seems to be just simply too weird, too odd, and too disturbing, and most of all, to specific about a topic that is really on a tangent from the question. A question about sex — which teen girls are going to enter into the app if they’re playing with it — should not bring up a reference to violent sexual assault on children.

I’ve contacted 36you, have received an automated reply, and hope to be able to add their side of the story (perhaps something a disgruntled ex-employee added?) as soon as possible.

Image credits: Boyfriend Maker