Microsoft today said that starting in early 2017, its Word and PowerPoint applications will be able to automatically come up with descriptions of photos that users can add into documents. Office 365 subscribers will see this first in Word and PowerPoint for Windows PCs.

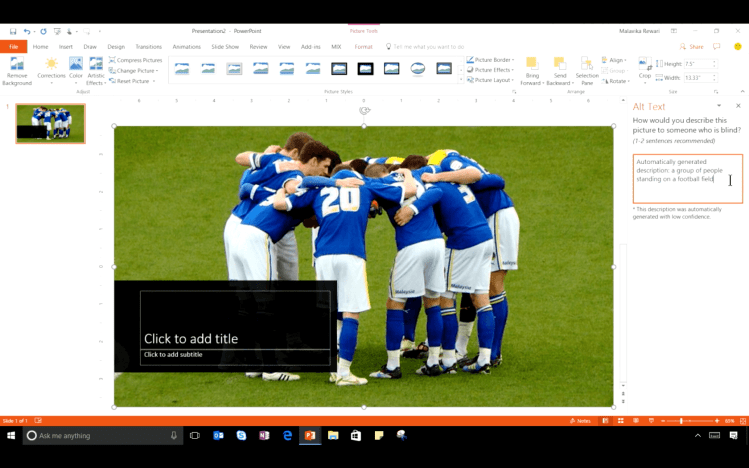

Ordinarily, if you drop a photo into PowerPoint, you can type out an “Alt Text” title and description for the photo. But not everyone does that when they’re making slide decks. Then, when a blind person opens the slide deck, they aren’t able to understand what’s going on in the picture, which could make the slide or the entire deck more difficult to fully grasp.

Microsoft wants to change that. So it has chosen to automate the process of making Alt Text for photos, drawing on its Cognitive Services Computer Vision application programming interface (API). “Through machine learning, this service will keep improving as more people use it, saving you significant time to make media-rich presentations accessible,” John Jendrezak, accessibility lead and partner director of program management for Microsoft’s Office Engineering team, wrote in a blog post. To use this feature, you’ll simply have to right-click on a photo and select “Automatic Alt Text.”

The technology uses a type of artificial intelligence (AI) called deep learning to recognize objects in photos and then figure out the best words to explain the photo in its entirety. From there, operating systems’ screen readers can read the captions aloud.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Deep learning generally involves training artificial neural networks on lots of data, such as photos, and then getting the neural networks to make inferences about new data. This is a method that has caught on at Apple, Facebook, Google, and Twitter, as well as at Microsoft.

In fact, earlier this year Facebook did something similar to what Microsoft is doing. It started automatically generating captions of photos that people share so that when blind people are scrolling through the News Feed on iOS, the VoiceOver screen reader embedded in iOS can quickly read out automatically generated captions. As a result, blind users can understand the text that people include with the pictures they post, as well as getting the comments that users make.

Twitter recently made it possible for users to manually write captions for images they post. While Twitter does do deep learning research, it hasn’t offered to automatically generate captions for images.

Also in today’s blog post, Jendrezak noted that Microsoft will be merging the separate fields for title and description. That way, he wrote, “you have no confusion about where to enter alt-text.” Currently, to add that information to an image, you have to open the Format pane, choose the Size & Properties tab (in PowerPoint — if you’re using Word, the tab is called Layout & Properties), and select the Alt Text dropdown.