testsetset

Google today is providing more information about its tensor processing unit (TPU), the server chip that it uses in house to perform artificial intelligence (AI) computing workloads more efficiently. Google has been using TPUs internally since 2015. The company disclosed the existence of the TPU last year but hadn’t said anything more about the technology until now.

In addition to publishing a paper on the TPU, Google’s David Patterson is also giving a talk about it today at a National Academy of Engineering event in Mountain View, California. Patterson is one of the paper’s whopping 75 co-authors.

Google relies on TPUs for the second of two phases when operating artificial neural networks. First, Google trains the neural networks on lots of data, typically on servers that are accelerated with graphics processing units (GPUs). After that, Google has begun using TPUs to more efficiently make inferences about new data. Google says that compares very well with inference performance directly on GPUs or basic x86 chips.

“Despite low utilization for some applications, the TPU is on average about 15X-30X faster than its contemporary GPU or CPU,” the authors write in the paper. Those contemporary systems are specifically Nvidia’s Tesla K80 GPU and Intel’s Haswell-generation Xeon E5-2699 v3 chips, the latter of which can be the underlying platform for a TPU.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

For the past five years, GPUs from companies like AMD and Nvidia have become the default infrastructure for economical deep learning, and Google has contributed to that. But Google, Microsoft, and other companies have also explored other types of chips, including field-programmable gate arrays (FPGAs), for various AI workloads. Google’s project is notable for its origins, as well as its uses in production; the system is already powering Google Image Search, Google Photos, and the Google Cloud Vision application programming interface (API), among other things, Google distinguished hardware engineer Norm Jouppi wrote in a blog post.

One TPU has 3.5 times more memory on chip than a K80, while also being smaller, the authors write. And performance per watt is 30-80 times better. Currently Google can pack two TPUs into a single server, but that’s not to say that it won’t build other hardware systems that lead to better performance or economics.

Google has embraced artificial neural networks more strongly than many other technology companies, and in 2013 the company concluded that they were so popular that their use could double the computational demands of its data centers. Doing it all on standard chips would have been unbearably expensive. And so Google began working on its own technology with an eye toward getting cost-performance that was 10 times better than GPUs.

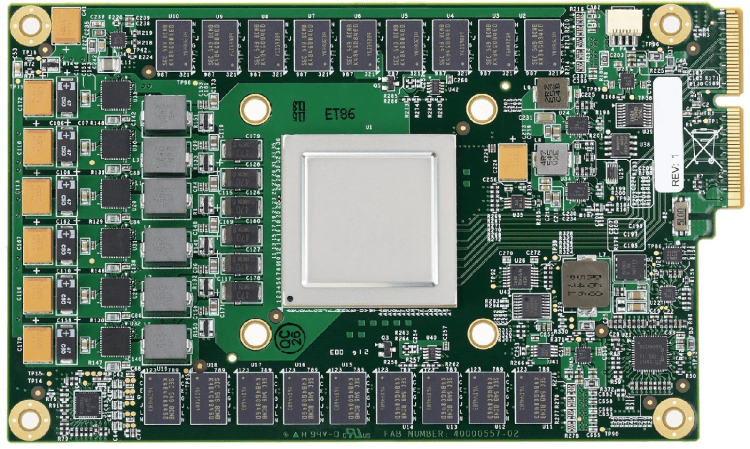

“Rather than be tightly integrated with a CPU, to reduce the chances of delaying deployment, the TPU was designed to be a coprocessor on the PCIe I/O bus, allowing it to plug into existing servers just as a GPU does,” the authors write. “Moreover, to simplify hardware design and debugging, the host server sends TPU instructions for it to execute rather than fetching them itself. Hence, the TPU is closer in spirit to an FPU (floating-point unit) coprocessor than it is to a GPU. The goal was to run whole inference models in the TPU to reduce interactions with the host CPU and to be flexible enough to match the NN needs of 2015 and beyond, instead of just what was required for 2013 NNs.”

Typically, running code on the TPU is as simple as using the Google-led TensorFlow open source deep learning framework, the authors write.

For much more detail on the TPU, read the full paper here.