Watch all the Transform 2020 sessions on-demand here.

During a keynote speech at the second day of the annual Facebook developer conference F8, CTO Mike Schroepfer said bots need to answer more questions that are pertinent to people’s lives and the things they do everyday.

“How do I connect with my community? Which friends should I reach out to? How do I find a club? How do I go volunteer? What should I do in my career? These are the human questions that matter, and bots are pretty far away from being able to do that, and that’s why we’re so excited about investing in not just computer vision but all of the fields of AI that come together to build intelligent systems,” he said.

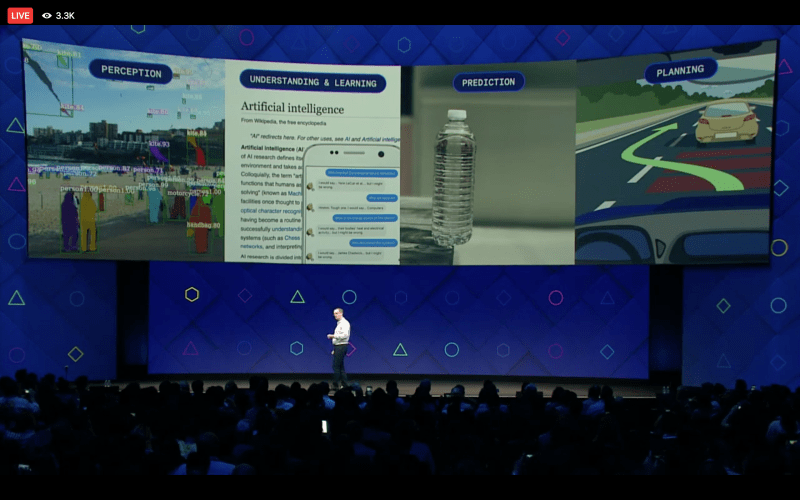

Schroepfer spoke ahead of a series of augmented reality and AI initiatives, from the use of helicopters and fiber optic cables for spreading internet access to the ability to think and feel words, announced at day two of F8. Much of the 90-minute keynote was centered on AI tech like computer vision, but Schroepfer said Facebook is developing AI to answer these kinds of life questions, as well as a bot named Dr. Wiki that answers questions based on Wikipedia knowledge.

“We’re now training systems that can ingest all of the English articles within Wikipedia, five million articles, and answer any question that may be answerable from Wikipedia. This is not referencing any other sources. This is not humans hand coding and putting that data into a structured form. It’s taking unstructured text and then answering any questions you may be able to get from that text, kind of like a reading comprehension test you might get in school,” he said.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Bots on Messenger and intelligent assistant M have been the object of criticism for their inability to answer questions in chat.

Above: Facebook CTO Mike Schroepfer describes initiatives the company is taking in artificial intelligence beyond computer vision.

On Tuesday Facebook launched Messenger Platform 2.0, which included a series of additional features to make bots and local businesses easier to find in Messenger. Chat extensions will bring bots into group settings, but will not allow bots to use natural language understanding within groups. Messenger gave developers the ability to remove the text input field of their bots in March.

While onstage, Schroepfer also discussed how Facebook uses NLU to automatically caption images for the visually impaired and spent the better part of a minute asking the audience about whether a pepperoni pizza is vegetarian.

“Computers are confounded by these [kinds of] questions because they don’t understand the world around us. They have to learn and put this information together to answer questions. This is why with all the promise of AI, so many of the bots and assistants we have seen to not be able to answer most of the questions we ask them. And so we need to go beyond computer vision. We need to teach computers to understand the world.”

Schroepfer also took time to discuss work being done by Facebook and AI researchers to understand and answer questions. He first referenced the training, called Memory Networks, to answer such questions in 2015.

“Solving these problems is critical to making our bots and assistants helpful in the tasks that are really important. I ask you right now or any of you at home, ask your bot of choice any one of these questions and tell me if you get satisfactory answers. Simple questions [like] ‘Who should I go see Fast and the Furious 8 with?’ Can’t tell you. But these are the sort of questions that are important,” he said.