Watch all the Transform 2020 sessions on-demand here.

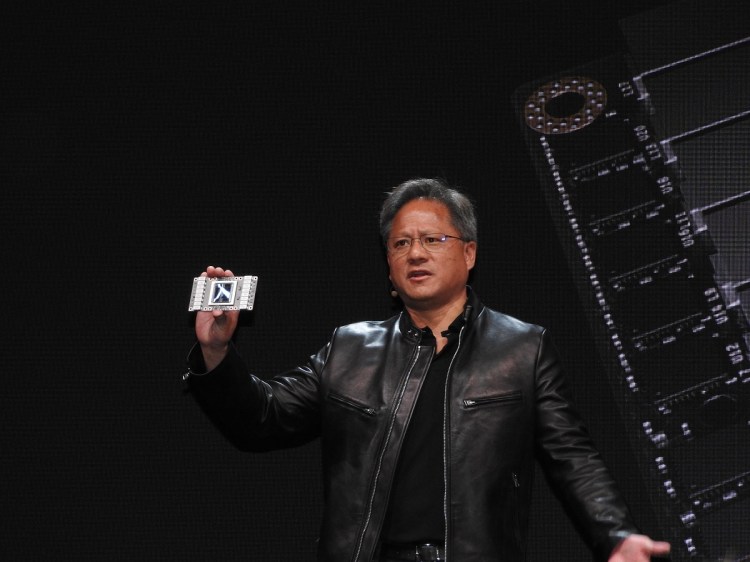

Nvidia CEO Jen-Hsun Huang unveiled an ambitious new processor for artificial intelligence applications, the Tesla V100.

The new chip has 21 billion transistors, and it is an order of magnitude more powerful than the 15-billion transistor Pascal-based processor that Nvidia announced a year ago. It is a huge chip — 815 square millimeters, or about as big as an Apple Watch face. It has 5,120 CUDA processing cores, and it performs at 7.5 FP64 teraflops. The performance is about three times as fast as last year’s product.

Santa Clara, California-based Nvidia, the biggest maker of graphics and AI chips, made the announcement at its GPU Technology Conference in San Jose, California.

Learn how developers and brand marketers are using AI to grow their businesses, at MB 2017 on July 11-12 in SF. We cut through the hype to show how marketers are achieving real ROI. Get your 50% off Early Bird ticket by May 19!

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Before he introduced the chip, Huang traced the recent history of AI. He noted that deep learning neural network research started to pay off about five years ago, when researchers started using graphics processing units (GPUs) to process data in parallel to train neural networks quickly. Since that time, deep learning has moved at an accelerated pace. And this year, Nvidia plans to train 100,000 developers to use deep learning.

For deep learning, the Tesla V100, also called Volta, can handle 120 Tensor teraflops. It can transfer data at 300 gigabits per second, or 20 times faster than other processors today. It is manufactured by Samsung for Nvidia.

Volta has 12 times the Tensor FLOPs for deep learning training compared to last year’s Pascal-based processor. This kind of processing speed is necessary because of the amazing advance of deep learning algorithms.

In 2015, Microsoft created a deep learning program dubbed ResNet that was so complex it required 7 exaflops of processing power. Baidu’s Deep Speech 2 AI required 20 exaflops in 2016, and Google’s NMT required 105 exaflops in 2017.

Microsoft is working on a new version of ResNet that chains 64 Volta chips together for processing. It’s not yet clear when the Volta will ship in large volumes.