testsetset

Google today announced that the company has created a second generation of its tensor processing unit (TPU) chip, a change the company calls a massive leap forward in its ability to train AI models.

Available through the Google Cloud platform, the second generation TPU promises faster training and running of AI models for researchers and businesses, as well as for Google, who now use TPUs to power its AI — from the Google.com search engine to translation, computer vision, and other services.

The news was announced onstage by CEO Sundar Pichai at the first day of Google I/O, the tech giant’s annual developer conference.

A second generation TPU chip is able to process up to 180 teraflops, a rate of speed that Jeff Dean, leader of the Google Brain team, says changes the time it takes to train some AI from weeks to a matter of hours or days.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“This long cycle time for experimentation means that some of the scarcest and most valuable time for machine learning engineers and research scientists are waiting days and weeks for the results of their experiments,” Dean said. “To put this in perspective, our new large-scale translation model takes a full day to train on 32 of the world’s fast commercially available GPUs, while one-eighth of a TPU pod can do the job in just six hours.” (TPU pods are collections of TPU chips in supercomputers.)

Little was known about tensor processing units made by Google especially for machine learning until last month, when 75 co-authors published a paper about the chip.

After debuting at I/O one year ago, first generation TPUs are now used in every Google search query and played a role in the system that helped devise a win in the game AlphaGo. TPUs have been part of AI services at Google for two years now, having operated out of public view for the first year.

Unlike the first generation TPUs, the second generation TPUs will be able to run machine learning training and inference, playing a role in both machine learning processes. Second generation tensor processing units (TPUs) will work with TensorFlow, as well as CPU or GPU systems that are able to process AI models like Caffe2, Intel Skylake, or Nvidia’s Volta.

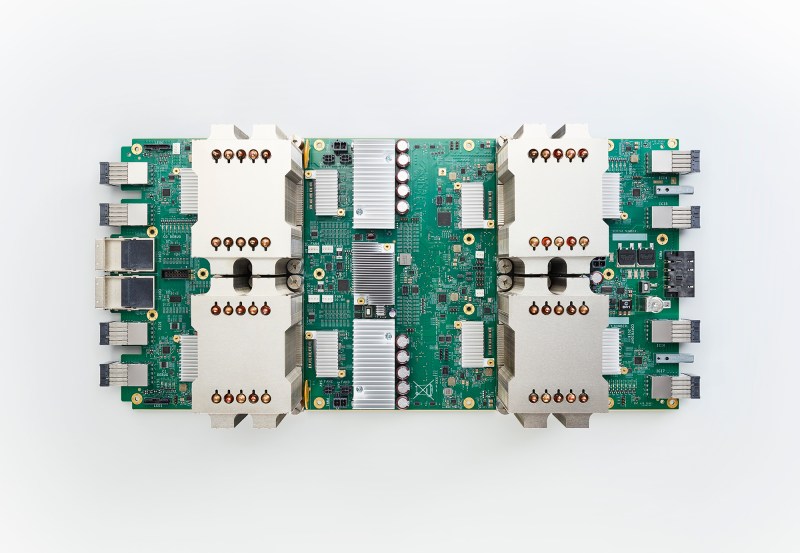

Above: Google’s second generation tensor processing unit chip

Today also marks the launch of the TensorFlow Research Cloud, an initiative to give top research institutions and scientists free second generation TPU access in exchange for sharing their work.

“Researchers given access to these free computational resources must be willing to openly publish the results of their research and perhaps even open source the code associated with that research,” Dean said.

Like first generation TPUs, Dean said second generation TPU chips will play a role in the range of AI services Google provides, from translation to using computer vision to identifying a product for sale.

“For our own internal use we’re still obviously using CPUs and GPUs internally for training some kinds of models, but over time I would expect us to use these TPU devices more and more for most of our training needs because we feel they have a really good trade-off in terms of making our fundamental site go faster,” Dean explained. I think the TPU is more specialized, so there are certain kinds of workloads, not necessarily machine learning ones but other kinds of workloads that run well on GPUs that don’t necessarily run on a TPU. But for most of our machine learning workloads, we see TPUs as something we’ll be using more and more often.”

Fei-fei Li, chief scientist of AI/ML at Google Cloud, is excited about second generation TPUs supporting Google’s efforts to democratize AI.

“We do see this burden as a vital part of democratizing AI,” Li said, talking about the growing size of AI models and the computational challenge it presents. “This is why I joined Google just a few months ago, to ensure that everyone can leverage AI to innovate, stay competitive, and solve the problems that matter most to them, regardless of technological proficiencies. We call this ‘democratizing AI’. It means supporting user choices and meeting our customers where they are with easy tools to take advantage of AI now.”

Earlier this month, Li joined Melinda Gates and NVIDIA CEO Jensen Huang to create AI4All, an organization dedicated to encouraging AI adoption among communities underrepresented in AI.

To get access to the Could TPU Alpha program, fill out this form.