Watch all the Transform 2020 sessions on-demand here.

Nvidia wants its graphics processing units (GPUs) to handle the artificial intelligence computing and other heavy-duty parallel processing tasks in giant data centers. To that end, the company is launching a program that makes it easy for its big manufacturing partners to package its GPUs in AI supercomputers.

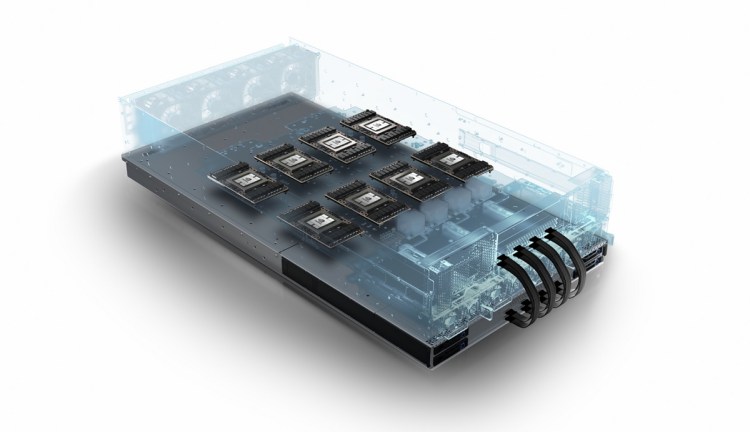

Nvidia HGX is a kind of starter recipe for original design manufacturers (ODMs) — Foxconn, Inventec, Quanta, and Wistron — to package GPUs in data center computers, said Ian Buck, general manager of accelerated computing at Nvidia, in an interview with VentureBeat. Nvidia CEO Jen-Hsun Huang is announcing HGX at the Computex tech trade show in Taiwan today.

HGX has already been used as the basis for the Microsoft Project Olympus initiative, Facebook’s Big Basin systems, and Nvidia DGX-1TM AI supercomputers. Using the recipe, ODMs can quickly design GPU-based systems for hyperscale data centers. Nvidia engineers will work closely with ODMs to minimize the amount of time to deployment.

As the overall demand for AI computing resources has risen sharply over the past year, so has the market adoption and performance of Nvidia’s GPU computing platform. Today, 10 of the world’s top 10 hyperscale businesses are using Nvidia GPU accelerators in their data centers.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

And soon Nvidia will ship its Volta-based AI GPUs with three times the performance of the predecessor chips.

“The growth of AI is really happening, and that is driving interest in GPUs in the cloud,” said Buck. “Every major cloud computing provider is adopting GPUs, including Google, Amazon Web Services, Tencent, Alibaba, and Microsoft Azure. We’re going to work closely with the Taiwanese ODMs to build servers that power the data centers for the cloud.”

Nvidia built the HGX platform to meet massive scaling tasks. HGX can combine GPUs and central processing units (CPUs) in a variety of ways for high-performance computing, deep learning training, and deep learning inferencing. All of those are important in modern AI processing.

“Working more closely with Nvidia will help us infuse a new level of innovation into data center infrastructure worldwide,” said Evan Chien, head of IEC China operations at Inventec, in a statement. “Through our close collaboration, we will be able to more effectively address the compute-intensive AI needs of companies managing hyperscale cloud environments.”

The standard HGX design includes eight Nvidia Tesla GPUs, connected in a mesh using Nvidia’s NVLink high-speed interconnect system. Both Nvidia Tesla P100 and V100 (Volta-based) GPU accelerators are compatible with HGX. This allows for immediate upgrades of all HGX-based products once V100 GPUs become available later this year. A typical server with the Nvidia technology will soon be able to process 960 teraflops in deep learning applications, compared to two teraflops for typical CPU servers today, Buck said.

“We are defining the server architecture for AI in the cloud that can standardize across everybody,” Buck said. “Taiwan builds the world’s servers, and this is a standard server platform. It’s going to enable a massive performance difference.”