Virtual reality makes you feel like you’re in another world. But as soon as you try to use your hands, the illusion breaks. That’s why ManoMotion has used computer vision technology to create a low-cost way to inject your hands and the gestures you make into animated scenes in real time.

The company is releasing a software development kit (SDK) today that provides developers and users with the tools to incorporate hand gestures into animated applications for virtual reality, augmented reality, and mixed reality.

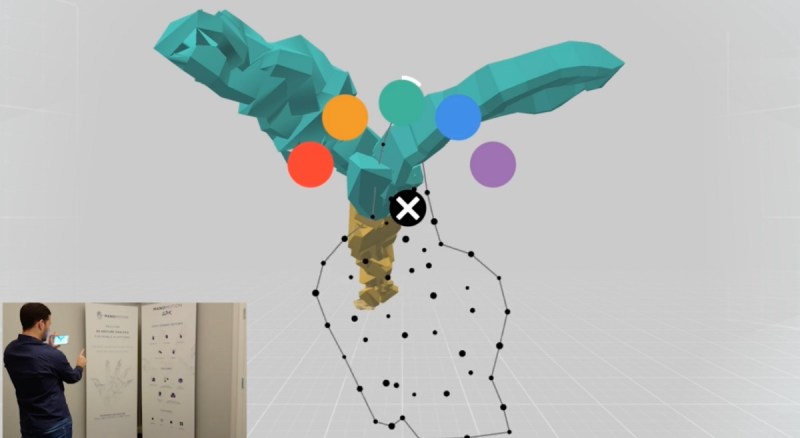

Daniel Carlman, CEO of Stockholm, Sweden-based ManoMotion, showed me a demo of the technology working (see the video). The single two-dimensional camera on his smartphone was able to detect the movement of his hands and inject them into an animation built with the Unity game engine. This effect is possible with other technologies, such as multiple cameras, depth cameras, and motion-capture studios. But those technologies are expensive, and ManoMotion has figured out how to do what it calls “hand presence” on a budget.

Above: ManoMotion may let you use your hands in VR.

“We want you to reach out in a VR space and manipulate those objects,” Carlman said in an interview. “This is a stepping stone to a better user interface. Ultimately, we think that VR will need to command voice, eye-tracking, and gesture interfaces.”

ManoMotion’s 3D gesture recognition technology tracks many of the 27 degrees of freedom (DOF) of motion in a hand, Carlman said. It also tracks depth and handles dynamic gestures (such as swipes, clicking, tapping, grab, and release), all with an extremely small footprint in using the resources of a central processing unit, memory, and battery. The latency, or delay in interaction, is less than 10 milliseconds, and it only uses about 30 percent of the processing power of an iPhone 6s.

By comparison, rival technology from Leap Motion uses 3D sensors. And VR headsets such as Oculus Rift and HTC Vive require you to hold controllers in your hands that don’t completely capture your finger movements.

Above: ManoMotion can detect what your finger is pointing at in VR.

“We can understand if you are grabbing or pushing something,” Carlman said. “When it comes to depth, we have centimeter precision. And we think we can do this at a fraction of the costs of the alternatives.”

Until now, ManoMotion has been working with customers on a one-on-one basis. The new SDK will enable far more developers to get their hands in and on the technology. ManoMotion is offering its SDK in a freemium model, tiered to fit different customer needs.

With the software, you will be able to see your actual hands and move objects in VR/AR/MR spaces. You can manipulate objects with your right hand or left hand. The software recognizes if you are spreading your fingers, pinching, or pointing at a particular object. It can also detect dynamic gestures, such as swipes and clicks. That means you can use your fingers to push buttons or pull levers in a VR app. ManoMotion supports both iOS and Android, and it comes with a Unity plug-in.

ManoMotion has 14 employees and it was founded in 2015. Its headquarters is in Stockholm and it has an office in Palo Alto, California. The team started out with the goal of measuring hand gestures in a precise way with the smallest technical requirements. The company is releasing the SDK at the Augmented World Expo event in Santa Clara, California. Carlman said the first applications will be coming soon.

To date, ManoMotion has raised $2 million. The cofounders include Carlman; Shahrouz Yousefi, chief technology officer; and Haibo Li, an adviser and a professor of media and technology at KTH Royal Institute of Technology.

Above: ManoMotion