testsetset

Qualcomm is eager for virtual reality and augmented reality to succeed, since it will supply the chips that power the hardware behind “extended reality,” delivered by ordinary-looking glasses that can be used for virtual reality or augmented reality applications.

Tim Leland, vice president of product management at Qualcomm, gave a talk this week at Augmented World Expo in Santa Clara, Calif., where all the major tech companies — Google, Intel, Qualcomm, Microsoft — and many others had augmented reality technology on display. Leland explained that there are plenty of obstacles to extended reality at present and urged the industry to work collectively to bring the future into focus faster.

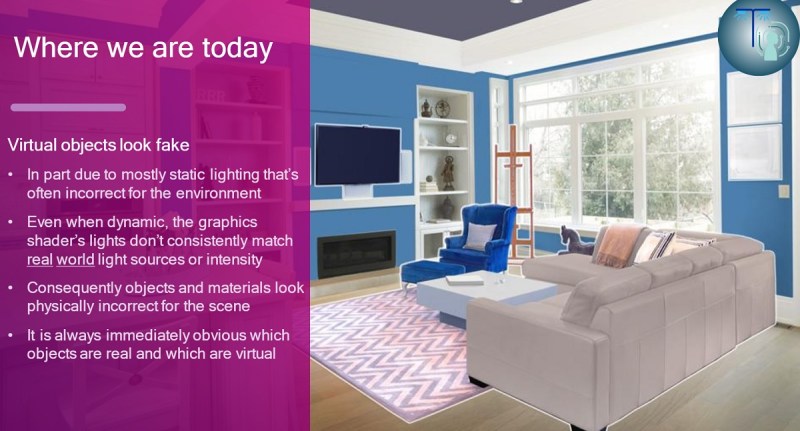

He said that AR animations, overlaid on the real world, still look fake. For instance, if AR objects are inserted into the real world but don’t cast shadows, they clearly won’t fit seamlessly in the landscape. But enabling accurate lighting and shadows will require a lot more horsepower, and that will tax battery life.

In his talk, Leland showed what kind of hardware the future will require and laid out all of the problems the technology is facing. We talked further about these challenges in an interview. Here’s an edited transcript of our conversation.

Above: Tim Leland of Qualcomm talks about extended reality.

VB: What kind of outlook do you have for mixed reality, extended reality?

Tim Leland: We’ve hit a milestone. We mentioned we were building Snapdragon 835 VR HMD dev kits with full tool support. We’re bringing those up right now. We’re still on schedule to ship out to developers. We’ll go wide with that program this summer. We’re still executing on our HMD accelerator program. We have all these reference designs available for OEMs if they want to build an HMD based on Snapdragon 835.

As far as AR, we’re going full speed ahead. ODG has been a great partner to us. The first time we’d ever done an announcement of a new flagship product with a non-smartphone customer was ODG, here, at CES. That was a vote of confidence. We believe in their technology. We believe in AR.

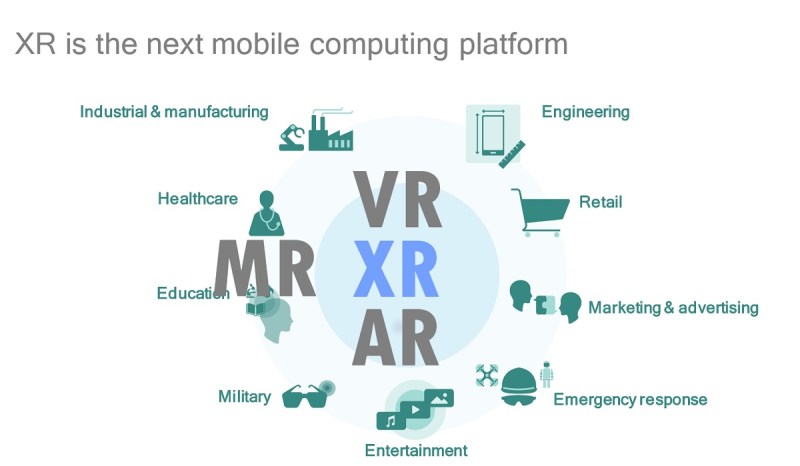

At this event I’m going to be presenting on what we’re calling “extended reality,” or “XR.” It’s an umbrella term to encapsulate VR, AR, and MR. It’s everything. Where we see things going in the future is a converged device that does all of it. It can do VR, with a display that goes completely opaque for that. It can do AR in the same mass-market consumer device. Six degrees of freedom. Inside out tracking for all the head movement. Support for more advanced use cases in eye tracking, and also hand tracking.

Looking further down the road, we have this partnership with Leap Motion. Their technology makes VR much more fun and easy to use. We’ll continue to work with them, accelerating some of their algorithms in our heterogeneous compute units inside of Snapdragon to get even better performance. We’re still working with SMI on the eye tracking side. We have a lot of good partnerships on the algorithmic development of the computer vision aspects of AR and VR. We’ll keep pushing those, especially now that we have these dev kits. We’ll have a great platform to continue to refine some of these algorithms with partners.

GamesBeat: Where are you making progress?

Leland: I don’t know if you were at Google I/O, but there was the announcement there. We’ve been working with Google. There were multiple Daydream smartphone announcements, and two HMDs were announced. One was Lenovo and the other was HTC. I can’t go into too many details on what we’re doing with Google, but we’re very close partners on different form factors of VR devices. Also, we’re working with some unannounced partners on very influential HMD designs going forward. All of which we believe will ship at the end of the year or early next year, with new products on our side being announced toward the end of the year as well.

We’ve been very busy on SDKs to make sure we have better development tools. We’ve been refining our six degrees of freedom head motion tracking algorithm, so it works with binocular cameras instead of just a mono camera. It’s even more accurate and uses lower power.

For the rest of the presentation I’ll introduce a few problems that I think the ecosystem needs to solve together. There will be areas where all these companies compete, but there are common areas where we should be working to solve some difficult problems. One of those areas I have broken out into five pieces here.

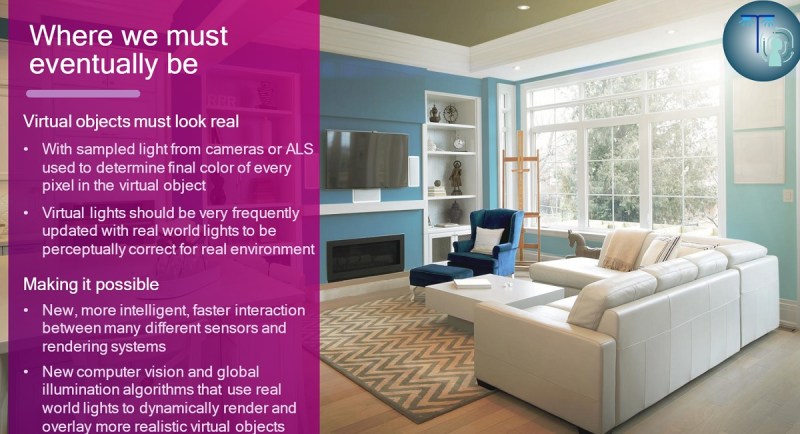

If you have a virtual object that you’re placing in the real world, you might draw it correctly. It might be anchored correctly. But if it’s not lit correctly, it’s still going to look fake. If you look at the top one here, that’s the same model as the bottom one, but the bottom is using the real light as the light source. That’s an area where we’ll be working on all aspects of foveation, whether it’s for standardizing and improving foveated graphics rendering to improve visual quality at lower power consumption, and also foveation of video, the view portal for adaptive streaming. When the upstream video service knows you’ve turned your head, it’ll then start refocusing the bitrate to that part of the environment map so you get the best visual quality with reductions in power consumption.

It’s a lot of stuff. This is a brand new market and a huge amount of work. The smartphone could eventually become a large segment of the market in XR wearables. There will be no cables in this type of market. Any type of cable is just a non-starter. Fans will not exist. We think there might be a niche market for glasses that maybe stream to a PC, but that’s a small part of it. The big part is everything self-contained in a mobile device. All the visual processing systems are very close to the inertial sampling systems, so everything is very fast, very low motion to photon latency, or for AR, very low photon to photon latency. We’ll just have to solve some of the power problems together with the ecosystem. I list some of those in the presentation.

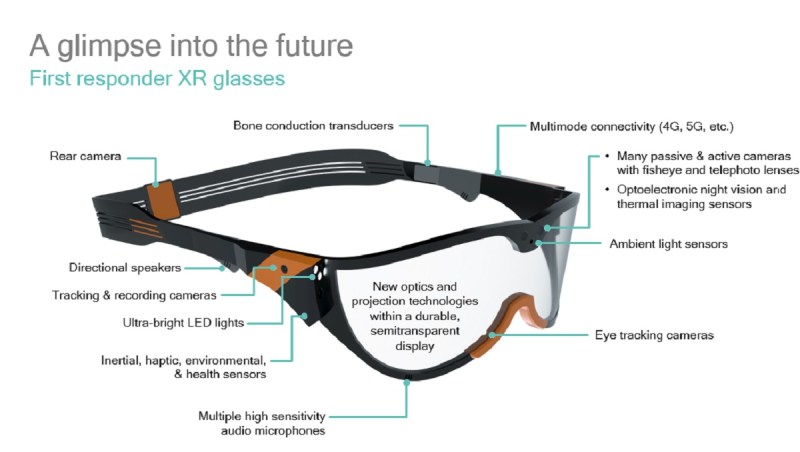

Above: Qualcomm’s vision for the “extended reality” glasses of the future.

GB: The lighting issue seems like a big problem, how to make things look real. But then you have to know where the lighting is in the real world. You have to sense that somehow.

Leland: It’s a worthy problem for a company that spends a lot of time on visual processing like we do. We’ll be working with middleware companies. I’ve talked to a couple already, operating system guys. At events like this sometimes we just like to throw out one of the difficult problems that we think is worth solving. It gets the debate started. Those that are willing to jump in with us and do some joint R&D sometimes — that’s how we get things moving forward.

GB: Are you guys making any predictions as far as how soon things will happen?

Leland: I really do think the analogy to smartphones is a good one. Unfortunately, it’s been copied so much, but we came up with this first slide maybe two years ago. We’ve been updating it over time. If you think of this as a 30-year cycle, for phones this would have been about 1987. We’re somewhere in the ‘90s in this analogy. By 2020, somewhere in that time, we think we will enter this phase of the technology — rapid evolution, surging consumer adoption — as these devices become more socially acceptable. They’ll be smaller. They’ll work better. The content is coming. That’ll be the steep part of the hockey stick, where we keep marching along the next 15-20 years after that.

There’s still money to be made along the way. There will be volumes in here. But out in this time frame we’re talking hundreds of millions of units per year. We think that’s possible. It’s especially possible if you bring different experiences to the same device, more value to the same device, which is sub-$1,000. It can do VR and AR. It doesn’t require a lot of setup. It just works.

It’s awesome to see Facebook put a bunch of effort into what they’re building. Google is doing a great job of funding content for VR. They just acquired a company we invested in, Owlchemy. That was a good acquisition on their side. All arrows are pointing in the direction that mobile is the future and convergence is happening. That’s how we see the world right now.

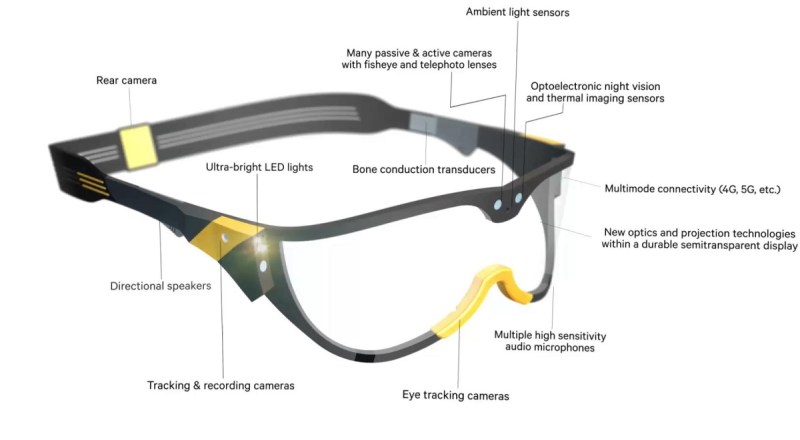

Above: All the sensors for extended reality glasses.

GB: Do you have a splintering as well, when you get to other kinds of devices that could happen once the tech was better?

Leland: Even within HMDs — there will be HMDs that are specific to different types of jobs or tasks. They could be purpose-built for a particular job. We go through an example in here about a first responder, a firefighter. It shows what that might look like. We had an early mockup of this for the New York event and we’ve been refining it. You wouldn’t imagine that you’d just wear these glasses around, but if it was a mission critical part of your job, something that made your job easier and safer, you might.

But the mass market will be glasses that look more like what we wear around anyway. Maybe a bit bigger and heavier, but it can’t be too much. This is the kind of thing where people decide that they don’t need a smartphone. They can just get these glasses. Much like people started deciding they didn’t need a laptop when they could do everything on their phone. Not everybody did that, but a big swathe of consumers did. That may be the case as mobile evolves and changes form factor.

Above: The can without a shadow doesn’t look real.

GB: On the game side, are you envisioning anything in particular?

Leland: It’ll be interesting to see where AR gaming goes. It’s been around a while. I got a Nintendo 3DS a while ago, and that was fun. As the ability to more appropriately place and draw and light virtual objects in real-world settings — it could be a lot of fun for kids too. You don’t have to get out all these different board games. Put on the glasses, load a program, there it is. The game is all set up right in front of you. You can movie pieces and draw cards. We might even see a revival of the old games we used to play as kids. Now it’s just an augmented version.

For VR there’s going to be — all the PC and console games will probably be rejigged for VR. Part of the market will go crazy for that. We make an SDK. We have a team that supports game developers. We’re working with Tencent. We’re pretty active in the gaming space. There’s another big publisher over in China that we can’t talk about yet, but we’re working with them. We’re very closely partnered with Unity to make sure that engine — which is the foundation of almost every VR game in development, it feels like — is well-optimized for Snapdragon.

Above: Augmented reality looks fake today.

GB: In that 30-year cycle, where do you see something like 5G coming into it? It seems to make it much more useful.

Leland: It sure does. Where that will come into play is most likely for video. If you look where we are today, 4K Ultra HD, 30fps, 360 degrees… We’re probably not completely there. It’s just starting to come online for broadcast video and streaming from other services. In the next decade, though, it’s going to go crazy. It’ll be 8K, up to 120fps, all high dynamic range. We’ll have the concept of the free viewpoint, where all the video has been captured from multiple angles, so it’s almost like computer graphics. You can walk around and interact with video. The major sports franchises are very interested in this part.

This is going to require multiple gigabits per second. Importantly, because this is interactive, you want low latency. With 5G, we’re improving the latency, the responsiveness with other applications, by 10 times. It’s down to a millisecond. The other important thing is you don’t have to stand right next to the cell tower to get that. The 5G experience is much more uniform as you’re walking away from the cell tower. You don’t see the same kind of reduction in throughput and latency.

Above: This is how real augmented reality should look.

GB: It also seems to have the effect of lightening up the glasses, if you don’t need as much processing or hardware in them.

Leland: For video, that’s more latency-tolerant. The video content will be from Netflix, YouTube, somebody else’s server connected to their drones, somebody else’s glasses. But for graphics, we believe most of that will be rendered on the device. Graphics is more latency-intolerant. Outside of the view frustum, nothing exists in graphics. You wouldn’t do that. You wouldn’t burn unnecessary power.

But for video, you’re taking a scene and wrapping it around a sphere or a rectangular projection. The benefit of this interaction latency — even if you can’t interact with the video, if you have lower latency as you turn your head, you can alert the system that’s streaming to you. “Okay, focus the highest bitrate on this part of the video for the best visual quality.” That’s called viewport aware adaptive streaming. Google is investing big on that, and others as well.

There’s going to be a mix of a lot of processing, particularly visual processing, in the HMD itself, but you want to have high connectivity over 5G to these internet services for the stuff that’s less latency-intolerant. It’s going to be killer. XR video is going to be the killer application for 5G.

GB: What do you think is the answer once people get to a point where they can’t necessarily tell what’s real and what’s not?

Leland: I do think it’s going to happen. “When?” is a good question. Part of that depends on the viewer. If you’re like me and you do this every day and you know what flaws to look for, we may be the guys who take longer. For the average consumer, though, when do they say it’s just like real life? I don’t know. It won’t be for a few more years. Sometime in the middle of the next decade, maybe, we could get to a point where quite a few applications are indistinguishable from reality.

Above: Qualcomm believes extended reality will unfold over decades.

GB: Tony Parisi of Unity was saying, “If you think we have a problem with fake news now, wait until you can actually fake what people see.”

Leland: [laughs] We’re swimming in the same direction as Tony. We’re pretty closely partnered with those guys. We have a common view of the world. It’s moving toward mobile. The different markets that graphics could service within VR are pretty immense. I don’t think a week goes by that we’re not talking to Unity in some fashion. We’re pretty heavily engaged with them, just like Google and other similar companies.

GB: Any other key conclusions in your talk?

Leland: These problems we’re talking about, there is no one company that can solve them. There’s no half a dozen companies. They’re not even just hardware problems. Even on power consumption, there’s a software element. There are times when we’ll compete with different companies, but there’s a lot of room to come together. It’s time we start agreeing on some of the goalposts for these difficult problems. Like field of view – do we go after more than 190 degrees? Do we just stick with 190 degrees on the horizontal but optimize the heck out of everything in the middle?

It’s time for the industry to start agreeing on the fundamentals as best we can. We can still compete in areas where we agree, but if we don’t start working together it’s going to stagnate the evolution of these high-volume devices that are waiting out there. Make products that consumers want to buy and they’ll want to buy them. Volumes will go up. If we just try to repurpose old PC-era antiquated architectures and create a market out of what we have, don’t be surprised when consumers don’t react and you have this gap of disappointment.

We’re focused on trying to make products with our partners that consumers want to buy. We’re focusing on some of these problems and we’re eventually going to fix them.

Above: The vision for extended reality.

GB: I could see definite problems in the input systems. There’s going to be so many ways to do input. Whether or not you’ll have touch feedback or detect one finger or detect all 10 fingers–

Leland: Our take on that — in the near term there are six degrees of freedom controllers that are ramping up at various stages. There’s some nice consortium activity going on in the open XR community at Khronos to standardize the APIs that those different controllers will work with, so there’s not this fragmentation problem for developers. But in the long term, as we’ve been saying, the best controller is no controller, using computer vision and machine learning technologies to predict and interpret gestures rapidly. For VR, using 3D reconstruction brings your own hands overlaying these models that are being used in VR, so it looks like — it’s even more immersive.

GB: Does that then create a new problem, though, where you can’t feel it?

Leland: There will probably be some accessories related to the tactile aspect. I’m hearing about people working on smells, as well. A lot of companies are working on sound-related technologies that you could relate to haptic feedback. A year ago, the ability to move and see your hands seemed impossible, but now that’s normal in the virtual environment.

GB: Does anything seem like it’s going to be too difficult? I get that feeling about eye tracking, whether you can control things with your eyes.

Leland: It feels like it’s more of a complementary technology. You’d have certain interfaces that would not completely rely on eye tracking to complete the entire interaction. It would be more assistive. I’m looking around a room; it’ll keep track of where I’m looking. Therefore, if I just put out my hand, it knows I’m looking at the door handle over there. It simplifies that interaction, but it doesn’t rely solely on gaze. The eye tracking complements the body and hand motion tracking and interaction.