Watch all the Transform 2020 sessions on-demand here.

The Chinese are racing ahead with artificial intelligence research, and Nvidia is helping make that possible, as evidenced by several announcements the graphics chip maker’s CEO, Jen-Hsun Huang, made today at a big AI event in Beijing, China.

Santa Clara, California-based Nvidia has pivoted over the past decade, adapting its graphics chips to do artificial intelligence processing. And a number of big Chinese companies — from Baidu to Tencent — are endorsing Nvidia’s approach. That’s important in a world where 60 billion video frames are uploaded per day to YouTube, and where Google Translate translates 140 billion words per day.

Of the multiple announcements made at Nvidia’s GPU Technology conference in Beijing, the most interesting could be about Nvidia’s TensorRT software, which dramatically accelerates AI inferencing, or the process by which a neural network learns how to recognize an object or pattern. Inferencing can take a huge amount of computing power, but Nvidia’s TensorRT enables inferencing to be done as much as 40 times faster, slashing the cost of the inferencing on Nvidia-based AI hardware.

“AI inferencing is exploding,” said Paresh Kharya, Nvidia’s product marketer for accelerated computing, in an interview with VentureBeat.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Above: JD.com is getting into the drone business with Nvidia.

With better inferencing, we’ll get much faster image and speech recognition, which in turn will improve everything from self-driving cars to robots. With Nvidia Tesla graphics processing units, the TensorRT software can accelerate processing up to 40 times faster than central processing units (CPUs) at a tenth of the cost.

“Internet companies are racing to infuse AI into services used by billions of people. As a result, AI inference workloads are growing exponentially,” said Nvidia’s Huang, in a statement. “Nvidia TensorRT is the world’s first programmable inference accelerator. With CUDA programmability, TensorRT will be able to accelerate the growing diversity and complexity of deep neural networks. And with TensorRT’s dramatic speed-up, service providers can affordably deploy these compute intensive AI workloads.”

More than 1,200 companies have already begun using Nvidia’s inference platform across a wide spectrum of industries to discover new insights from data and deploy intelligent services to businesses and consumers. Among them are Amazon, Microsoft, Facebook and Google; as well as leading Chinese enterprise companies like Alibaba, Baidu, JD.com, iFflytek, Hikvision, Tencent and WeChat.

TensorRT 3 is a high-performance optimizing compiler and runtime engine for production deployment of AI applications. It can rapidly optimize, validate, and deploy trained neural networks for inference to big data centers and other AI platforms.

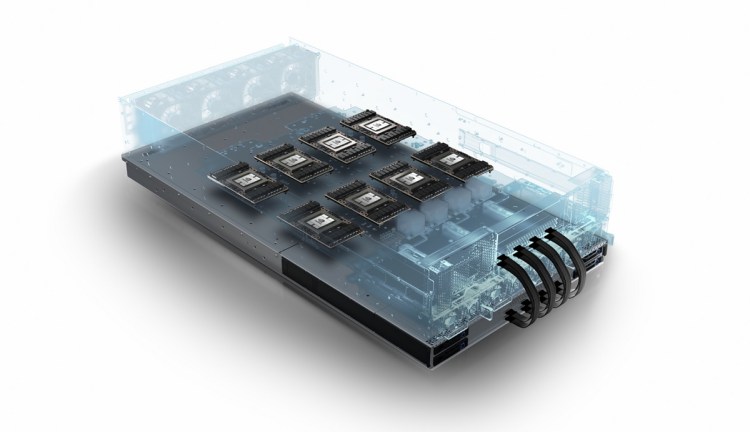

In other news, Huang said that China’s big hardware makers — Huawei, Inspur, and Lenovo — are using the Nvidia HGX AI server technology across their data centers. Nvidia is providing those companies with early access to its HGX reference designs for data centers. Those companies will use HGX as a starter recipe for using graphics processing units (GPUs) in the data center. The HGX has eight Nvidia Tesla V100 GPUs on a single server board.

“The V100 deployment in Baidu, Alibaba and Tencent are extremely remarkable,” said analyst Chirag Dekate at Gartner, in an email. “Hyperscale/webscale companies like Baidu, AWS, Azure, Tencent, Alibaba, and others are working aggressively to provision [service] solutions for deep learning environments. And users are increasingly exploring clouds as a means of starting their AI journeys. Nvidia through its global strategy of engaging leaders across cloud service providers is embedding itself to be the main architecture of choice for deep learning workloads.”

Above: A delivery fan of the future from JD.com

Nvidia also announced today that Alibaba, Baidu, and Tencent are incorporating new Volta architecture-based Nvidia Tesla V100 GPU accelerators into their data centers and cloud-service infrastructures. All three of the major companies are upgrading their data centers with the new Nvidia Tesla V100 GPUs.

In addition, Nvidia is announcing that its Jetson-based robotics platform will be used by ecommerce firm JD.com in its JDrone and JDrover automated delivery drones and robots to delivery packages to consumers. Nvidia and JD.com are collaborating on using AI in logistics and delivery machines. JD.com’s JD X lab is doing pilot testing of drones and robots to deliver packages to consumers. JD X estimates it will release more than 1 million drones over the next five years, and it plans to use Nvidia’s Jetson platform.

“AI is the most important technology development of our time, with the greatest potential to help society,” Huang said. “As the world’s leading cloud providers deploy the world’s best AI platform, with Volta GPUs and Nvidia software, we’ll see amazing breakthroughs in medicine, autonomous transportation, precision manufacturing, and much more.”

Lastly, Nvidia said that Alibaba and Huawei are adopting Nvidia’s Metropolis AI Smart Cities platform, which simplifies the process for getting data from Internet of Things sensors, such as smart traffic lights, to data centers where the information can be analyzed and used to improve overall traffic. A total of 50 companies are currenty using Nvidia’s Metropolis platform.