testsetset

Google today announced that its Lens AI is being rolled out for Google Assistant in Pixel and Pixel 2 smartphones. Lens uses computer vision to do things like detect landmarks or extract phone numbers, email addresses, and text from real-world objects like billboards or marquees. Lens can also scan barcodes and recognize video games, movies, books, and other works of art.

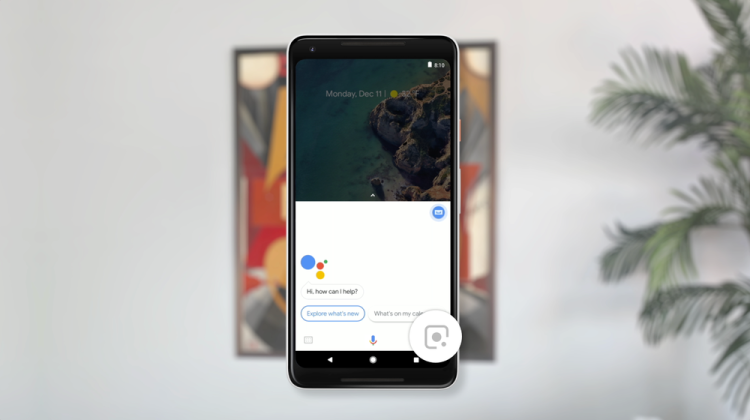

In the weeks ahead, Lens will roll out in Google Assistant for English speakers in Canada, India, Singapore, the U.K. and the United States, Google Assistant product manager Ibrahim Badr said today in a blog post. Lens with Google Assistant was pointed out by Android Police in recent days. While Google Assistant is in use, Lens can be activated by tapping the photo icon in the bottom right hand corner.

Lens for Google Photos with Pixel smartphones was first made available last month. At that time, a VentureBeat review of the Pixel 2 found Lens had trouble recognizing some fairly well-known landmarks, but did a pretty good job of capturing text from the sides of vehicles.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

A demonstration by Google engineer Behshad Behzadi in September of features on the way showed that Lens with Google Assistant delivers information in real time and will soon be able to perform tasks like currency conversion or telling you how many calories in an apple.

While users can expect to see a host of new Google Assistant features in the weeks ahead, Lens, like the Now Playing feature that detect songs similar to Shazam, is one of a handful of perks Google is currently sharing exclusively with users of its Pixel or Pixel 2 smartphones.