Watch all the Transform 2020 sessions on-demand here.

Nvidia launched hardware and software improvements to its deep learning computing platform that deliver a 10 times performance boost on deep learning workloads compared with the previous generation six months ago.

In the past five years, programmers have made huge advances in AI, first by training deep learning neural networks based on existing data. This allows a neural network to recognize an image of a cat, for instance. The second step is inferencing, or applying the learning capability to new data that has never been seen before, like spotting a cat in a picture that the neural network has never been shown.

At the GPU Technology Conference (GTC) event in San Jose, California, Nvidia CEO Jensen Huang didn’t announce a new graphics processing unit (GPU). Rather, he described improvements to the overall system that deliver the better results.

They include a twofold memory boost for the Nvidia Tesla V100, Nvidia’s datacenter GPU. Nvidia also created a kind of freeway cloverleaf data transfer system — a GPU interconnect fabric dubbed the Nvidia NVSwitch — that enables 16 Tesla V100 GPUs to communicate with each other simultaneously at a speed of 2.4 terabytes per second. And, finally, Nvidia launched an optimized software stack.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

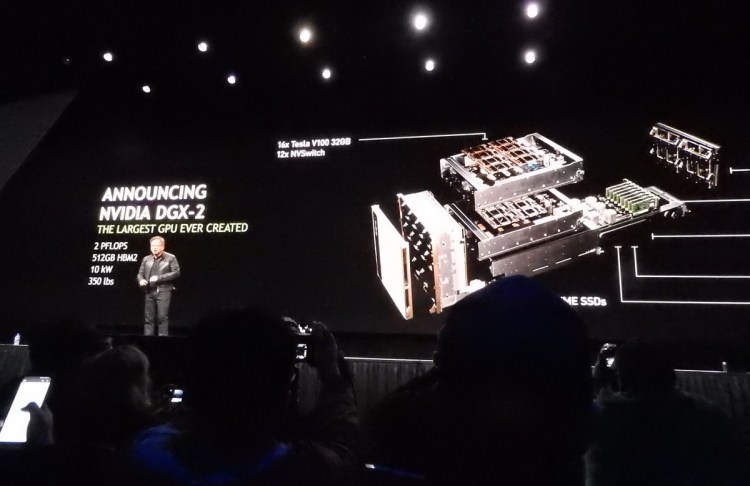

Huang also announced a major breakthrough in deep learning computing with Nvidia DGX-2, the first single server capable of delivering two petaflops of computational power. DGX-2 has the deep learning processing power of 300 central processing unit (CPU) servers occupying 15 racks of datacenter space, while being 60 times smaller and 18 times more power efficient.

“The extraordinary advances of deep learning only hint at what is still to come,” said Huang. “Many of these advances stand on Nvidia’s deep learning platform, which has quickly become the world’s standard. We are dramatically enhancing our platform’s performance at a pace far exceeding Moore’s Law, enabling breakthroughs that will help revolutionize health care, transportation, science exploration, and countless other areas.”

Now equipped with 32GB of memory, Tesla V100 GPUs will help data scientists train deeper and larger deep learning models that are more accurate than ever. They can also improve the performance of memory-constrained HPC applications by up to 50 percent compared with the previous 16GB version.

The Tesla V100 32GB GPU is immediately available across the complete Nvidia DGX system portfolio. Additionally, major computer manufacturers Cray, Dell EMC, Hewlett Packard Enterprise, IBM, Lenovo, Supermicro, and Tyan announced they will begin rolling out their new Tesla V100 32GB systems within the second quarter.

Oracle Cloud Infrastructure also announced plans to offer Tesla V100 32GB in the cloud in the second half of the year.

“We are clearly outstripping Moore’s Law with deep learning advances,” said Ian Buck, vice president and general manager of accelerated computing at Nvidia, during a press briefing.

The NVSwitch offers five times higher bandwidth than the best PCIe switch, allowing developers to build systems with more GPUs hyperconnected to each other. It will help developers break through previous system limitations and run much larger datasets. It also opens the door to larger, more complex workloads, including modeling parallel training of neural networks.

NVSwitch extends the innovations made available through Nvidia NVLink, the first high-speed interconnect technology developed by Nvidia. NVSwitch allows system designers to build even more advanced systems that can flexibly connect any topology of NVLink-based GPUs.

The updates to Nvidia’s deep learning and HPC software stack are available at no charge to its developer community, which now totals more than 820,000 registered users, compared with about 480,000 a year ago.

Nvidia’s new DGX-2 system, which will also have twice as much memory, reached the two-petaflop milestone by drawing from a wide range of industry-leading technology advances developed by Nvidia at all levels of the computing stack.

DGX-2 is the first system to debut NVSwitch, which enables all 16 GPUs in the system to share a unified memory space. Developers now have the deep learning training power to tackle the largest datasets and most complex deep learning models.

The DGX-2 supercomputer weighs 350 pounds, and it replaces 300 dual-CPU servers. Huang said the first unit will sell for $399,000 and it will be available in the first quarter.

“It’s just an incredible friend-to-friend price,” Huang said on stage. “The more you buy, the more you save.”