Watch all the Transform 2020 sessions on-demand here.

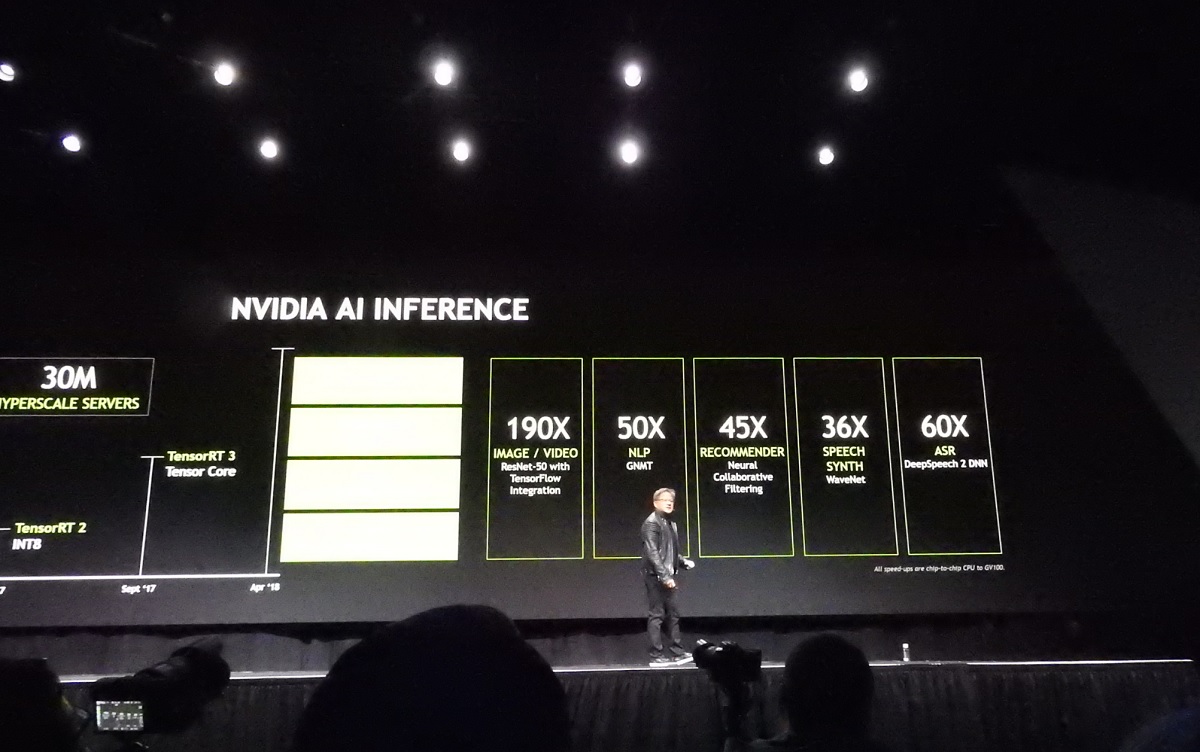

Nvidia announced today that it has launched a number of efforts to speed deep learning inferencing, a form of logical reasoning that is critical for artificial intelligence applications. Some of its advances will be able to cut data center costs by up to 70 percent, and its graphics processing unit (GPU) will be able to perform deep learning inferencing up to 190 times faster than central processing units (CPUs).

In the past five years, programmers have made huge advances in AI, first by training deep learning neural networks based on existing data. This allows a neural network to recognize an image of a cat, for instance. The second step is inferencing, or applying the learning capability to new data that has never been seen before, like spotting a cat in a picture that the neural network has never been shown.

Nvidia’s efforts are aimed at improving inferencing while slashing the cost of deep learning-powered services, said Jensen Huang, CEO of Nvidia, in a keynote speech at the GTC event in San Jose, California. Thanks to these improvements, tech companies are making strides in speech recognition, natural language processing, recommendation systems, and image recognition.

Nvidia announced a new version of its TensorRT inference software, and the integration of TensorRT into Google’s popular TensorFlow 1.7 AI framework. Nvidia also said that Kaldi, the most popular framework for speech recognition, is now optimized for GPUs. Nvidia has also collaborated with Amazon, Facebook, and Microsoft to make it easier to take advantage of GPU acceleration.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“We are experiencing a meteoric rise in GPU accelerated computing,” said Ian Buck, vice president and general manager of accelerated computing at Nvidia, in a press event.

Above: Nvidia’s inferencing efforts are paying off.

He said GPU acceleration has moved far beyond what central processing units (CPUs) are capable of. He said Nvidia’s Volta chips are 20 times faster than comparable CPU servers at high-performance computing. Nvidia said its TensorRT 4 software offers highly accurate INT8 and FP16 network execution, which can cut datacenter costs by up to 70 percent. And the TensorRT 4 software delivers up to 190 times faster deep learning inference compared with CPUs for common applications such as computer vision, neural machine translation, automatic speech recognition, speech synthesis, and recommendation systems.

“The TensorFlow team is collaborating very closely with Nvidia to bring the best performance possible on Nvidia GPUs to the deep learning community,” said Rajat Monga, engineering director at Google, in a statement. “TensorFlow’s integration with Nvidia TensorRT now delivers up to eight times higher inference throughput (compared to regular GPU execution within a low-latency target) on Nvidia deep learning platforms with Volta Tensor Core technology, enabling the highest performance for GPU inference within TensorFlow.”

TensorRT can also be deployed on Nvidia Drive autonomous vehicles and Nvidia Jetson embedded platforms. Deep neural networks on every framework can be trained on Nvidia DGX systems in the datacenter, and then be deployed into all types of devices — from robots to autonomous vehicles — for real-time inferencing at the edge.

Buck said the city of Kansas City, Missouri, is using deep learning to predict where the potholes will appear in the city, allowing it to dispatch crews ahead of time to patch up the holes. The city tested six major intersections in the city and analyzed them with a neural network. They got about 76 percent accuracy for predicting when a pothole would form on the roads. They are accurate within five to 10 feet, and they let crews fix cracks before the holes form.

“Deep learning is truly transforming our world,” Buck said. “They can make potholes a thing of the past.”