testsetset

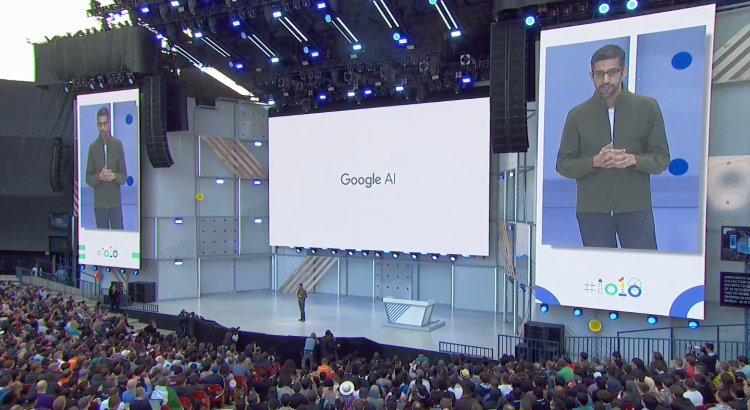

At Google’s I/O developer conference this week there was a lot of news to unpack, but the story that seems to have captured people’s imagination — or fueled their nightmares — is Duplex, the AI that enables Google Assistant to make phone calls for you.

In the demo, Google Assistant goes back and forth in conversation with a person for about a minute to make a haircut reservation. In another demonstration, Google Assistant made a reservation at a restaurant.

Duplex is still an experiment, CEO Sundar Pichai explained onstage Tuesday, an experiment that will continue with user testing this summer.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Despite taking up less than two minutes in more than two hours of product rollouts and upgrades, its debut may mark a noteworthy moment in the history of conversational AI, and perhaps the history of communication, as one of the first publicly known instances of a bot proactively working on a human’s behalf in the world.

The reality beyond the demo

Strangely enough, Duplex is not yet publicly available and barely 48 hours old and there already appears to be a series of misconceptions about it.

First, it’s not the phone call that bothers people. It’s the tone of Duplex’s voice.

If Duplex had the same robotic voice we’ve heard for decades from automated systems handling calls for banks and the like, I don’t think its debut would have made as many waves. The reaction comes up because Duplex uses DeepMind’s WaveNet AI for speech synthesis and natural pitch and intonation. Companies like Baidu and Microsoft are working on similar forms of conversational AI that can mimic voices or add human effects like pauses or saying “hm” or “uh.”

Overall, the voice you hear from Duplex seems more human than not, entering an auditory “uncanny valley.”

Duplex evokes a response from people for the same reason certain avatars or robots can conjure strangely emotional reactions. I’ve had a Jibo robot in my house for the past couple of months, and I’ve been really surprised by the negative feelings it brings out of people because it rotates its head to look at you when you speak and has a natural-sounding voice.

I’m sure people freaked out in decades past when human operators went away in exchange for automated touch-tone systems. To ease that fear and add some levity, enter John Legend. WaveNet is also being used to give Google Assistant a new voice that mimics the singer for special moments like when you say “Ok Google, tell me about my day.”

Second, despite how the demo played out, in the real world Duplex may not begin a call with the obligatory “Hello” or “Hi” that would come out of a human’s mouth. Duplex calls will be preceded by a message telling the human on the other end that they’re on the phone with a robot. The system will also allow businesses to opt out of receiving such robo-calls, a person familiar with the matter told VentureBeat.

Third, Duplex may not require user involvement after they ask Google Assistant to schedule an appointment, but it’s not a fully autonomous system. In instances in which the AI fails in attempts to converse with the human on the other line when making a reservation, Duplex will transfer the call to a human operator.

Fourth, and most importantly, Duplex is the start of something much bigger than phone calls. It’s the beginning of the idea of an AI assistant acting on a user’s behalf in the world. Someday, through a combination of WaveNet and Duplex, Google Assistant could act on your behalf while mimicking your voice.

The impact of bot stand-ins

This idea might seem strange to the uninitiated, but the makers of automated bots for chat apps like Facebook Messenger or Skype have talked about this idea for years.

Last year, in a story told exclusively by VentureBeat, bot platform Gupshup launched Interbot, a testing ground for training bots how to interact and interoperate with other bots. That’s a scenario worth considering today since Google may make Duplex available to both consumers and businesses, the company said in a blog post to better explain how Duplex was made.

At the time, CEO Beerud Sheth said he believed InterBot to be a necessity because he envisions a day when bots will work on our behalf. Things may begin with shopping, but bots will evolve to carry consumer-to-business and business-to-business interactions.

“When I do comparison [shopping], I check at most two to three websites, but my bot can look at 1,000 merchants and there’s no problem whatsoever because the bot can handle infinite capacity,” Sheth told us in April 2017. “Very soon, instead of me using bots, a shopping bot can go talk to all the merchant bots and get me the best price.”

Bot-to-bot communication was also key to research Facebook released last year that showed bots beginning to create their own rudimentary form of communication. News of Facebook’s bots speaking to each other became one of the most-shared AI-related stories of 2017, and, like Duplex, led to a lot of misperceptions in the public sphere.

OpenAI researchers are also working on bot-to-bot communication, while Amazon and Microsoft are both attempting to make bots that are conversationalists capable of chit-chat with humans for minutes at a time.

Impacts to people and the businesses they frequent are important, but the behavioral scientist in me has questions.

Are we ready for bots to speak for us?

Will people who schedule appointments with bots be less likely to show up than those who make the phone calls and verbal commitment to show up?

Could less person-to-person communication result in a decline in trust, as some have suggested?

Are we nearing a point when a business that answers its own phone is seen as colloquial or quaint?

Duplex-like services for consumers and businesses might make cancellations and rebookings easier, but will automated appointments lead to more or less economic activity?

Will people mind that interaction with their local business just got less personal, even if the automated bot they’re speaking with sounds like mom from your local mom-and-pop?

The future, coming this summer

The technology behind Duplex has been years or decades in the making, and in the days following its release the AI has sparked important questions and conversations around the idea of AI acting on your behalf in the world, and the interesting ways this tool might be used when placed in people’s hands.

First thing I’m programming for Duplex is to answer all those scammer IRS calls and talk for about an hour. Then call them back hourly pretending to be the IRS, asking them the same questions they asked me. But in Hulk voice. #IO2018

— Patrick Moorhead (@PatrickMoorhead) May 10, 2018

As I mentioned earlier, there was probably a group of people who screamed about the sky falling when human operators were replaced with touch-tone operators. As uncanny as Duplex may feel today, those goalposts can move as Google continues its experiment, or as people gain trust and see the value in a tool.

The day may come when calls with an AI assistant completing tasks for you is considered just as routine as calls with humans, or when people wonder how they ever got shopping done without the help of their AI assistant.

We’ll find out more about the actual performance of Duplex when it arrives this summer.

The day when Duplex actually works could be years down the road, but this is the beginning of automated AI reaching out into the world to work on behalf of consumers and businesses.