Microsoft’s Build developer conference kicked off Monday, and the tech titan spent its time addressing one of the key problems with cloud transformation: How do you deal with the 30 percent of enterprise workloads that can’t move into a public cloud datacenter?

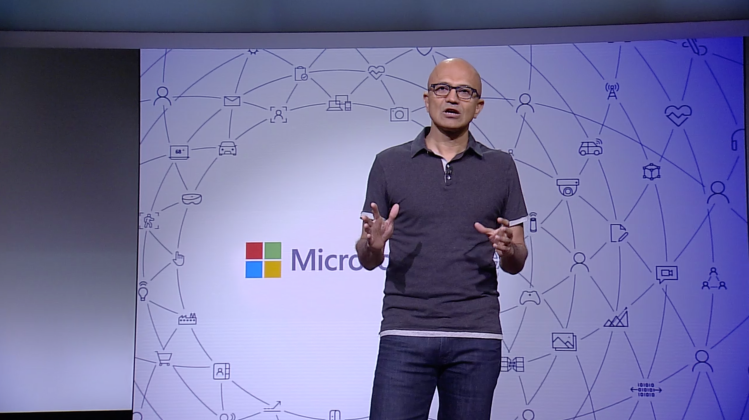

For CEO Satya Nadella, it’s all about the “Intelligent Edge” – bringing applications at the core of an enterprise’s digital transformation from the cloud to local devices, where they can drive business results in real time. Microsoft’s idea of the edge encompasses a wide variety of hardware, including private enterprise datacenters, PCs, mobile devices, and less powerful internet of things (IoT) hardware.

Research and advisory firm ISG (where I work) expects 70 percent of all enterprise workloads will move to a cloud delivery model in time. The other 30 percent must stay in on-premises environments for reasons like security, compliance, latency, or network connectivity. Artificial intelligence (AI) workloads, for example, have the potential to transform the way we work across all manner of industries, but to get truly real-time insights, some of those workloads will need to run at the edge.

Microsoft’s new Intelligent Edge offerings don’t mean organizations should hold off on taking advantage of the cloud. But they’re a stark reminder of how CIOs and CTOs must be thoughtful about the way they go about it.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Choosing the home for enterprise applications – whether at an enterprise’s edge or in the cloud – requires a shift in mindset and tooling to take advantage of the technologies available in the market today. Companies that intelligently harness the edge will likely be on better footing than those who don’t.

Bringing AI to the edge

AI is at the core of this strategy. Machine learning is critical to the future of any organization, but driving business value from intelligent predictions requires connecting AI systems to business processes, wherever they are. For example, a manufacturer using machine learning-based defect detection needs low-latency results – without sending data out of the plant.

Microsoft announced an update to its Azure Custom Vision service that could apply to scenarios like this. The service allows companies to build image classifiers based on a set of proprietary images. Developers don’t need to understand the underlying machine learning systems to deploy them, and they can now export the resulting algorithms for execution on the edge in TensorFlow, Core ML, and ONNX formats.

Connecting apps to IoT hardware

Building and deploying applications for these edge environments will involve modern developer tools, like containers and Kubernetes. Microsoft announced the imminent general availability of Azure Kubernetes Service (AKS), which provides a host of automated management capabilities for Kubernetes, the popular open source software that helps unify cloud and edge use cases by abstracting out the underlying hardware that hosts applications. In the future, Microsoft will also integrate AKS with Azure IoT Edge, its service for building and deploying software to less powerful computing hardware like Raspberry Pis.

Innovation remains in the cloud

Even with its focus on the edge, Microsoft is still pumping out plenty of cloud innovation as part of its latest round of announcements. Its new Azure Blockchain Workbench service, for example, is designed to simplify the process of building applications on top of blockchain technology. At this point, that sort of managed service exists only in the cloud, but Microsoft has a path for bringing it to the edge using its Azure Stack system, which fuses hardware and software for companies to run a node that works like Azure within their private environments.

The company also debuted a preview of Project Brainwave for developers who want to run machine learning algorithms at high speed in the cloud. Brainwave uses field-programmable gate arrays (FPGAs) to provide high-speed compute for AI. Right now, the system only works for ResNet-50-based computer vision algorithms, but it’s designed to be useful in a general-purpose context. The preview is currently available only through Azure, but Microsoft is working to make it available on the edge for customers deploying FPGAs in their datacenters.

[A version of this story originally appeared on ISG’s website.]

Blair Hanley Frank is a technology analyst at ISG covering cloud computing, application development modernization, AI, and the modern workplace. He was previously a staff writer at VentureBeat.