Watch all the Transform 2020 sessions on-demand here.

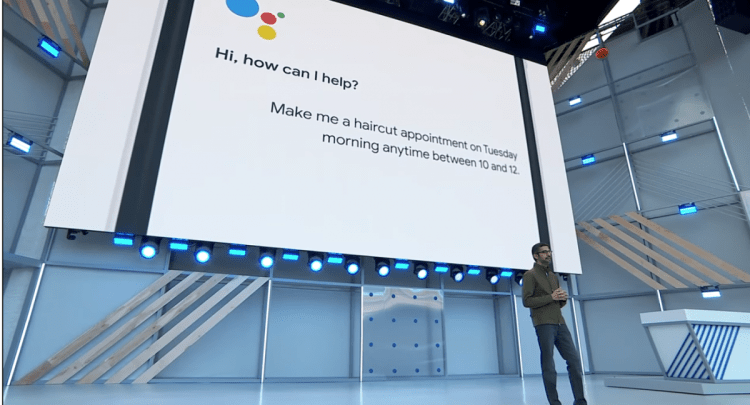

Earlier this week, Google introduced the AI-powered Google Duplex to the world at their Google I/O conference. The company describes this as a “new technology for conducting natural conversations to carry out ‘real world’ tasks over the phone.” The scenarios demonstrated during the keynote by Google CEO Sundar Pichai — making an appointment at a hair salon and booking a restaurant reservation — floored the audience. The natural language breakthrough is significant, and the technical advances are truly impressive. Still, it’s going to be quite some time before personal bots and virtual assistants are routinely negotiating with the world on our behalf.

What Google has achieved with Duplex is remarkable on many fronts, but especially with the voice response technology. It is easily the most natural-sounding computer generated and fully autonomous conversational bot yet.

It takes a lot to build a bot

While the front-end speech recognition and response is remarkable, there’s a lot more that goes into making an effective bot. For one thing, bots today do well so long as the customer stays on the “happy path,” the ideal scenario where the customer says all the right things. That is, when question A is well understood and leads naturally and predictably to question B and so forth. Challenges begin when the questions deviate from this path — for example, if, while making a restaurant reservation, the customer deviates from routine questions by asking if their favorite waitress will be working, or if they indicate that this is a special occasion and ask if flowers could be brought to the table. Given the technology available today, the bot most likely won’t be able to perform these tasks. Currently, it doesn’t appear that Duplex is able to handle these side tracks better than other available bot technologies.

This also raises the question of how easy it will be to train Duplex on new domains. Right now, it appears Duplex’s functionality is quite narrow. Make a reservation, make an appointment — these are relatively easy interactions. Will it get to the point of being able to answer any question or address any need, no matter how complex the interaction may be? Perhaps, but that brings along other questions, such as how Google plans to amass data that is relevant to address all domains. How easy will it be to train up the model for different scenarios?

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Perhaps an even more significant challenge in bot execution is the integration with back-end, legacy systems. This may not be as much of an issue when interacting with a small business to make an appointment, but it can be a challenge for larger enterprises. Calling a bank for a financial transaction — say, transferring funds between accounts — and then asking whether you qualify for a car loan involves many bank systems. It is quite likely they are not integrated among themselves, let alone with the bot. It’s very challenging to develop and deploy these enterprise bots.

Another challenge is cross-channel pollination. Google Duplex is designed for the phone, but a comprehensive approach requires web, mobile, text, and social channels as well. Consumers today are touching enterprises across a growing sea of channels. It could be that Duplex will only address voice communication, but if so, there needs to be an ability to integrate across all interaction channels.

A long path still lies ahead

While the issues I have raised may sound like criticisms of Duplex, they are not. This is simply to point out that we’re a long way from having personal virtual assistants that can seamlessly do our bidding. When might that be? Probably not in the next three to five years, though perhaps not too far into the future. What Duplex does provide is a major technical next step toward natural human and machine interaction. As such, it is likely a very useful platform for companies to build on for the next generation of bot applications. And why not? When developing its app, Uber chose to leverage Google’s underlying navigation technology rather than inventing its own. It makes no difference to end users, and it freed up capital and other resources that Uber could then spend innovating in the right areas.

This approach makes sense: utilizing different technologies to solve a larger challenge. For example, enterprise data will be exposed to AI systems through APIs and microservices. Feedback loops will tie everything together, enabling each component to learn from the others and improve over time. This will lead to a robust set of bot platform services to move beyond addressing simple matters and evolve into fully transactional virtual assistants. Doing this effectively and economically requires a technology stack that has high degrees of reusability, extensibility, and interoperability across all channels and business processes.

Self-service experiences become conversational, intelligent, and universal by leveraging the same natural language, business rules and decision making, application flow, and back-end integration that feed into all self-service apps. This enables us to design business logic for self-service once and deploy it across all customer touchpoints. Eventually, people will have a consistent, conversational experience regardless of which channel they use. For now, Google Duplex is an exciting development for voice and provides a compelling path forward.

Scott Horn is the chief marketing officer for [24]7.ai, a leader in intent-driven customer experience solutions.