Watch all the Transform 2020 sessions on-demand here.

A key component of many autonomous driving systems is lidar (a portmanteau of light and radar), which bounces light — usually in the form of ultraviolet, visible, or near-infrared — off of objects to map them digitally in three dimensions. But while lidar systems are great for identifying potential obstacles, they don’t always spot those obstacles quickly. At a speed of 70 miles per hour, for instance, targeting an object 60 meters away doesn’t do much good if it takes the car 100 meters to come to a stop. Post-processing introduces another delay.

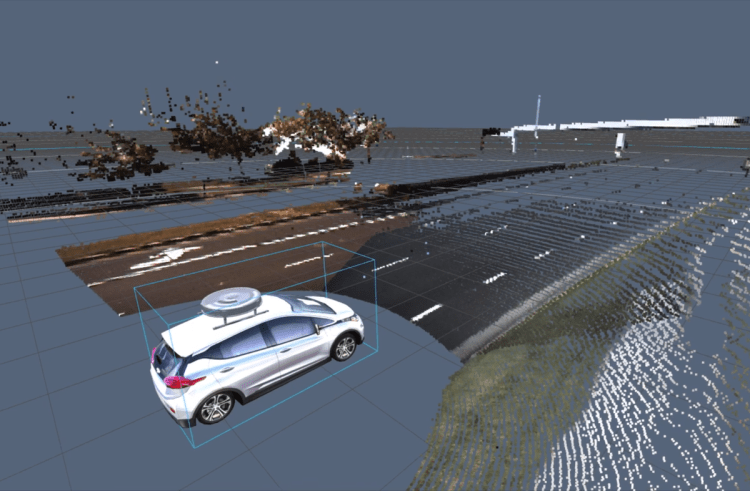

That is why the new sensor from startup AEye — the iDar — is built for speed first and foremost. The intelligent detection and ranging sensor merges 300 meters of 360-degree depth data with camera data to create a dynamic, manipulable point cloud that AEye calls True Color Lidar. When the sensor, which will cost less than $3,000, begins shipping to original equipment manufacturers in July, it will be the first on the market to combine lidar and camera data mechanically at the hardware level, AEye says.

“There is an ongoing argument about whether camera-based vision systems or lidar-based sensor systems are better,” said Luis Dussan, Founder and CEO of AEye. “Our answer is that both are required.”

The research has been spearheaded by Dr. Allan Steinhardt, whose impressive career includes a stint at DARPA, where he conducted research on space, terrestrial, and naval-based radar systems, and at Booz Allen Hamilton, where he served as a vice president and chief scientist. Steinhardt also headed radar search at MIT’s Lincoln Laboratory and Cornell University.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

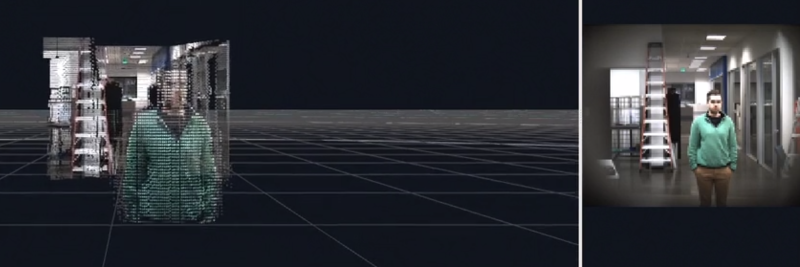

Steinhardt and the rest of the engineering team at the San Francisco-based company call the point cloud data “dynamic vixels,” a cheeky reference to the fact that they comprise both cameras pixels and lidar voxels. (Voxels represent a value on a grid pattern in three-dimensional space, and they’re the elements that make up lidar sensor depth maps.) Dynamic vixels are the first ingredient in AEye’s secret sauce, the other being intelligence. Once the iDar generates the point cloud, AEye’s AI takes over, assessing the surroundings to track targets and flag objects of interest.

Above: A picture-like point cloud capture from within AEye’s HQ.

“If you look at the lidar-only systems that exist today, they’re discrete systems that operate on a fixed pattern,” AEye chief of staff Blair LaCorte told VentureBeat in a phone interview. “They bring in data, but there’s no feedback loop for intelligence.”

LaCorte, the former CEO of aviation charter company XoJet and an operating partner at private equity firm TPG, said the system builds on decades of research in lidar and imaging systems. “Lidar has been around for 50 years, and the military has done a lot of iterations,” he said. “It has gotten better over the years, but hasn’t been done like this in the commercial area.”

The deluge of data collected by the lidar and camera in the iDar — in addition to feedback loops at the sensor and perception layers and in path planning software — enables the system to perceive things that conventional sensors might miss. It can identify objects with minimal structure, like a bike, brake light, or signage, and distinguish between two objects of the same color. And it is able to perceive contextual changes in the environment, like when a child turns their head before stepping out onto the street.

“We can get three [important] measurements in one [frame]: velocity, acceleration, and deceleration,” LaCorte said. “Systems with a separate radar and camera have to reinterrogate, which adds a delay.”

The iDar system also affords flexibility in environment mapping. Through a software API, car manufacturers can customize the thousands of embedded machine learning algorithms in the sensor to adjust the resolution of the point cloud on the fly, depending on which objects are in range of the sensor. They can decrease the resolution of the sky while bumping up the dynamic vixel count in a pedestrian or bicyclist, for example, or lock focus on a building as the car approaches.

Altogether, AEye claims that the iDar system has 16 times greater coverage and10 times faster frame rate and is 8 to 10 times more power-efficient than competing sensors. It is also modular. Though iDar uses a custom-designed solid-state lidar system, its other components are off-the-shelf and “built for cost,” LaCorte said.

“We designed a commercial system with individual sensors,” he said. “It’s plug and play with better lasers.”

AEye, which first demonstrated its lidar technology in 2013, raised $16 million in June 2017 from Kleiner Perkins, Airbus Ventures, Intel Capital, and others. Earlier this year, AEye launched the iDAR Development Partner Program for universities and OEMs.