Watch all the Transform 2020 sessions on-demand here.

There’s more to artificial intelligence than voice assistants and self-driving cars. Researchers at Auburn University, Harvard, Oxford, the University of Minnesota, and the University of Wyoming have developed a machine learning algorithm that can identify, describe, and count wildlife with 96.6 percent accuracy.

The paper, which was written in November 2017, was accepted in the Proceedings of the National Academy of Sciences (PNAS) this week.

“This technology lets us accurately, unobtrusively and inexpensively collect wildlife data, which could help catalyze the transformation of many fields of ecology, wildlife biology, zoology, conservation biology and animal behavior into ‘big data’ sciences,” Jeff Clune — associate professor at the the University of Wyoming, senior research manager at Uber’s Artificial Intelligence Labs, and senior author of the paper — said in a statement. “This will dramatically improve our ability to both study and conserve wildlife and precious ecosystems.”

The researchers trained the computer vision algorithm on 3.2 million images from Snapshot Serengeti, a citizen science project on Zooniverse.org that recruits volunteers to collect images of elephants, giraffes, gazelles, lions, cheetahs, and other animals in their natural habitats. More than 50,000 people with 225 camera traps contributed to the project’s corpus.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

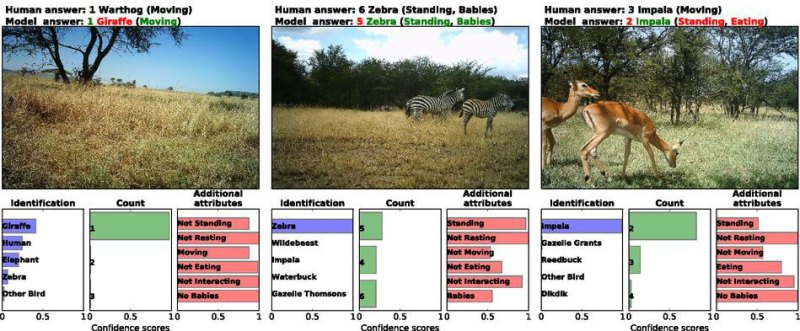

Above: The algorithm analyzes species type and count, as well as behavior.

Here’s how it works: Motion-sensing cameras automatically snap photographs, which are fed into a convolutional neural network — a type of deep neural network that mimics the connectivity pattern between neurons in the human visual cortex — that annotates the photos with text and numbers. It’s capable of describing which species and how many of each species are present, and the activities in which they’re engaged, like eating or sleeping.

The system can label a six-month batch of images in just a few hours — a fraction of the 2-3 month lead time human volunteers need, on average. Margaret Kosmala, a team leader at Snapshot Serengeti, says the system could save more than eight years of human labeling effort for each additional 3 million images.

“That is a lot of valuable volunteer time that can be redeployed to help other projects,” she told Phys.org.

The work builds on a growing field of study in artificial intelligence: animal detection. In November 2016, scientists at Queensland University used Google’s TensorFlow machine learning framework to train an algorithm that can automatically detect sea cows in ocean images. And in March, startup iNaturalist, a crowdsourced collection of animal and plant photos, launched Seek, an AI-enabled app that can automatically identify species.