Watch all the Transform 2020 sessions on-demand here.

The naked eye can connect a picture of a cat and a psychedelic, tricked-out version of the same picture with relative ease, but that isn’t always true of off-the-shelf computer vision APIs. At the Conference on Computer Vision and Pattern Recognition in Salt Lake City, Utah this week, researchers from UnifyID demonstrated that stylized photos of felines trip up Watson’s object recognition tool more than 97.5 percent of the time

The researchers used a neural network — in this case Magenta, an open source TensorFlow research project built by the Google Brain team that generates songs, images, and drawings — to transform pictures of cats into cubist, Picasso-esque creations.

In the beginning, the computer vision algorithm had no trouble recognizing cats of different breeds, fur lengths, and colors. With the style transfer algorithm’s interpolation weight set between 0 (the raw image) and 0.1 (a lightly stylized image), the machine learning algorithm classified images as “cats” with a high degree of confidence (97 – 99 percent). But as the researchers cranked up the interpolation and the pictures became increasingly post-impressionistic, the algorithm’s guesses changed from “feline,” “cat,” and “carnivore” to “cellophane,” “moth,” and “invertebrate.”

“While the two images are virtually indistinguishable for the naked eye … the labels assigned for the two images by the … classifier turn out to be wildly different,” Vinay Prabhu, principal machine learning scientist at UnifyID, wrote in a Medium post.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

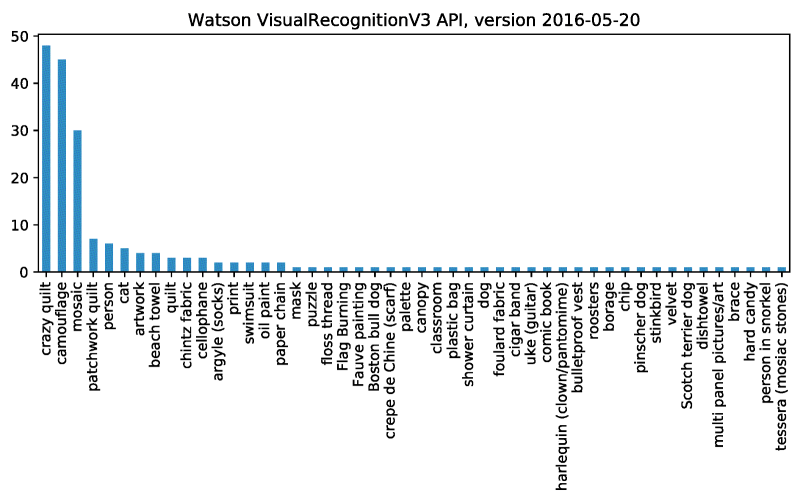

In a second, larger-scale test with 200 randomly chosen cat images from the Kaggle Dogs and Cats dataset, the researchers applied a style transfer before running them through the Watson Visual Recognition API. The result? The algorithm overwhelming classified the cats as “crazy quilt,” “camouflage,” “mosaic,” and “patchwork.”

“The goal is not to proclaim a new black box attack recipe or to berate the commercial API used,” Prabhu said. “Besides showcasing the potential of looking at style transfer as an adversarial example generating technique, we also wanted to draw attention to the inherent fuzziness that surrounds the definition of what constitutes an image class/category or ‘tags’ in the case of such APIs and what entails an image misclassification.”

It’s not the first example of what researchers refer to as “adversarial perturbations,” or changes to objects designed to fool computer vision algorithms into mistaking them for something else. In October 2017, researchers from Kyushu University and MIT demonstrated an algorithm that alters a single pixel in an image, resulting in an AI misclassifying objects. And in December, students at MIT successfully tricked Google’s Cloud Vision service into identifying images of dogs as “skiers.”