testsetset

Artificial intelligence that can colorize black and white photos is nothing new, but researchers at Google have taken it a step further. They’ve created a machine learning system that not only adds color to black and white videos, but that can constrain those colors to particular objects, people, and pets in a given frame, like a child coloring within the lines of a flip book.

“Tracking objects in video is a fundamental problem in computer vision, essential to applications such as activity recognition, object interaction, or video stylization,” Carl Vondrick, a lead researcher on the project, wrote in a blog post. “However, teaching a machine to visually track objects is challenging partly because it requires large, labeled tracking datasets for training, which are impractical to annotate at scale.”

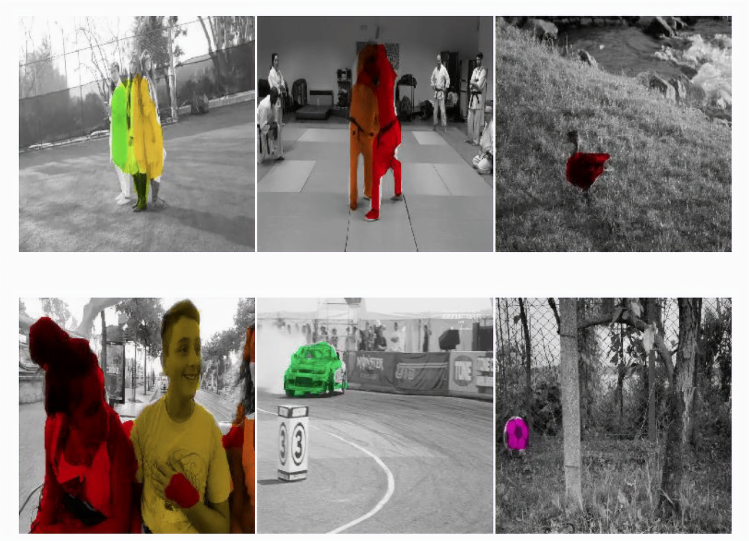

Above: Google’s model learns to track objects through colorization.

In a paper titled “Tracking Emerges by Colorizing Videos,” the scientists describe a convolutional neural network — a kind of neural network that’s architecturally well-suited to object tracking and video stabilization — that learns to follow multiple objects through occlusions (i.e., foreground terrain and other barriers), and that “remain[s] robust” without requiring any labeled training data.

The first step was teaching the algorithm to colorize grayscale movies. The researchers grabbed clips from the Kinetics dataset — a collection of YouTube videos covering “a diverse range of human-focused actions” — and converted the first frames to black-and-white. They then trained the neural network to predict the original colors in subsequent frames, which turned out to be the eureka moment: Because the model had to recolor moving objects and regions, it was effectively forced to learn how to track those objects and regions.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Above: From left to right: the reference frame, input video, and predicted colors.

“Learning to copy colors from the single reference frame requires the model to learn to internally point to the right region in order to copy the right colors,” Vondrick wrote. “This forces the model to learn an explicit mechanism that we can use for tracking.”

The resulting model can keep tabs on any region specified in the first frame of the video and, if given points of reference, can even track human poses. (The researchers used it to model a human joint skeleton.) All the more impressive, it outperforms several state-of-the-art colorization techniques.

Above: Google’s model performing skeletal tracking.

“Our results show that video colorization provides a signal that can be used for learning to track objects in videos without supervision,” Vondrick wrote. “Moreover, we found that the failures from our system are correlated with failures to colorize the video, which suggests that further improving the video colorization model can advance progress in self-supervised tracking.”