Watch all the Transform 2020 sessions on-demand here.

Apple’s first iPhone launched in 2007, decades after the concept of machine learning — a subset of artificial intelligence (AI) that employs mathematical techniques that “teach” software to make sense of complicated datasets — rose to prominence. But it was only recently that the two collided.

Apple launched Core ML, a framework designed to speed up machine learning tasks, alongside iOS 11 in May 2017. The Cupertino company shipped its first chip purpose-built for AI, the A11 Bionic, in last year’s iPhone X. And at the 2018 Worldwide Developers Conference (WWDC), it took the wraps off Core ML 2, a new and improved version of Core ML; and Create ML, a GPU-accelerated tool for native AI model training on Macs.

That’s impressive progress in a year, but the fact remains that smartphone AI is in its infancy; there are plenty of challenges to overcome. To get a better sense of them, VentureBeat spoke to iOS developers using Core ML today for language translation, object detection, and style transfer. Here’s what they had to say.

Digital Masterpieces

https://www.youtube.com/watch?v=2F7OFH1H81k

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Digital Masterpieces — a spinoff of the Hasso Plattner Institute in Potsdam, Germany, funded in part by German incubator Deep Tech — didn’t set out to adopt Core ML. It was more or less coincidental, chief technology officer Frank Schlegel told VentureBeat.

“We were using techniques developed at the Institute,” he said — specifically for style transfer, a technique of recomposing paintings, pictures, or sketches in the style of other images. “We already had implementations for these neural networks and artificial intelligence techniques [on desktop], so we thought it would be cool to port them to the iPhone.”

These implementations came to form the foundation of BeCasso, an app that, as Schlegel put it, “transforms pictures into artwork.” (It was featured onstage at WWDC this year.)

He described the porting process as “a bit like the Wild West.” In the months following Core ML’s launch, the developer tools were “very much” in development, Schlegel said. “The problem was achieving sufficient speed without compromising accuracy.”

In machine learning, neural networks — computing architectures loosely based on the physiology of the human brain — consist of layers that contain nodes. Nodes are akin to neurons — they’re where data fed into the network is combined with coefficients called weights, which assign significance to the inputs by dampening or amplifying them. Deep neural networks consist of multiple layers and multiple nodes, and the term generally speaking (but not always) is a corollary for accuracy.

“For style transfer, models can be trained up to an arbitrary depth,” Schlegel said. “Deeper models can perform more operations, but require more computing power.”

They also require more memory — specifically RAM. In fact, style transfer algorithms have particularly high memory requirements because the input data — images — is encoded as long strings of bits (ones and zeros).

“If you have an input image that’s too large, usually your RAM explodes,” he explained.

Digital Masterpieces’ solution was to ship two versions of the same model — one for older devices with less RAM and weaker processors (like the iPhone 6S, 7, and 7S) and a model for newer devices (like the iPhone 8, 8S, and X). It worked: Style transfer takes less than a second to complete on the latest-generation iPad.

“We’re quite pleased with the results, all considered,” Schlegel said.

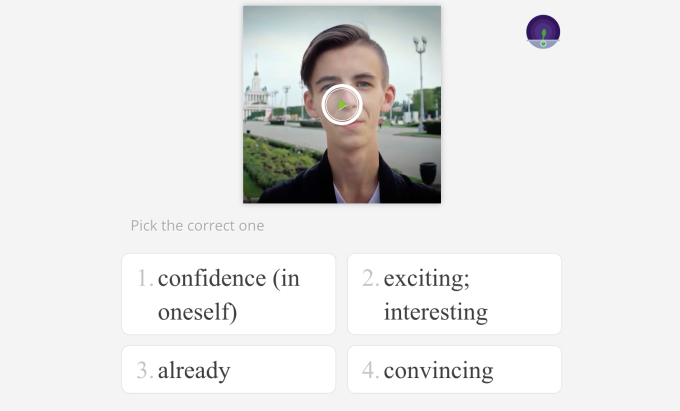

Memrise

Memrise — the team beyond the eponymous Memrise app, which offers flashcard-based lesson plans specializing in language learning — credits a hackathon with jump-starting its AI efforts.

“One of our iOS developers managed to get a prototype up and running in a couple of days,” James Salter, engineering manager at Memrise, said. “We sort of went from there.”

The prototypical model identified objects and told users how to say it in their language. It wasn’t part of the core Memrise experience, and it could only recognize a few hundred knickknacks (Memrise’s engineers trained it on ImageNet, a database of public images from Stanford University). But it “got Apple’s attention,” Salter said.

So they kept at it. Salter used Keras, an open source neural networks library, to retrain the model on tens of thousands of images from Google. Soon it was able to recognize 20 new things, then 160 new things.

The only problem? As the model’s accuracy increased, so too did its size.

The Memrise team found a solution in Create ML, which not only reduced model training time from 24 hours to 48 minutes, but shrank its footprint from 90MB to 3MB.

“It’s kind of a narrow use case, so we were able to achieve pretty great results,” Salter said.

Polarr

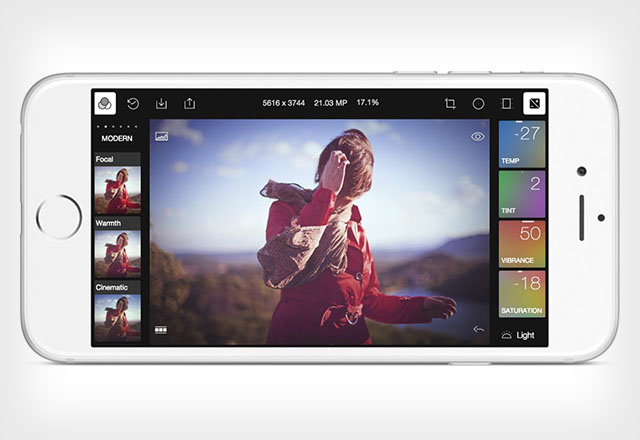

Above: Polarr’s app for iOS.

For Polarr‘s team of about 20 people, Core ML’s appeal was its ability to get “big, complex” algorithms running on phones, CEO Borui Wang said.

But it hasn’t been all sunshine and rainbows. Working within the iOS ecosystem requires “a ton of compromises,” Wang said, chiefly because the iPhone’s RAM — 3GB in the iPhone 8’s case — doesn’t provide much headroom for complex neural networks.

That’s not to say the Polarr team hasn’t tried. It has pushed iOS devices to the limit in the lab, with models that ramp up processor and graphics chip usage to 100 percent within the first five seconds of run time. But the drawbacks have been severe — the entire operating system becomes unresponsive; the screen and back panel become uncomfortably hot; and the battery life drains quickly.

“If you want to extract names from a business card, that’s easy enough, but we’re trying to make machine learning useful for consumers,” he said. “I think there’s a long way to go.”

It’s not all bad news, Wang said. The team has used compression techniques such as quantization to great effect, achieving up to a “five times” reduction in model size, and has gotten object recognition algorithms to run at 20 frames per second.

And Wang is optimistic about the future. He expects that in one to three years, phone hardware will improve to the point where the battery, not the processor or memory, is the hard limit.

“As the chipsets become faster and faster, it becomes a power consumption trade-off,” he said. “Right now, that’s not the case.”

What Core ML needs: More pretrained models and benchmarking tools

In fairness to Apple, Core ML 2 introduces a number of improvements. It’s 30 percent faster thanks to a technique called batch prediction. It supports 16-bit floating point and all levels of quantization down to 1 bit. And it ships with a model converter that works with Facebook’s Caffe and Caffe2, Keras, scikit-learn, XGBoost, LibSVM, and Google’s TensorFlow Lite frameworks.

Schlegel thinks that’s more than enough. “Our use cases are completely covered by the framework so far,” he said.

Salter agreed, but believes that Core ML has more room to grow.

“For the future, it would make sense for Apple to start releasing more pretrained models based on their own big data,” he wrote in an email. “Smaller developers don’t have access to large amounts of data or the resources to do long training runs, so there are some things that are impractical even if you have the expertise. For example, it would be nice if there was a way to build an image classifier that didn’t require you to bring your own dataset — ideally you’d just declare the objects you want it to recognize and it just works — Apple’s cloud builds the model for you, using their data.”

As for Wang, he hopes that future versions of Core ML offer improved benchmarking tools.

“The thing most on top of my mind is to understand GPU/CPU and thermal throttling,” he wrote in an email. “For example, you know [how] a lot of PC games … tell you the FPS and plot a curve for extended gameplay? As a developer, it would be amazing if CoreML could do that for models. You’d load it up, it would tell you when the GPU starts to throttle, and based on that produce a list of performance reports for other devices (iPhone 5, 6, 7, 8, X). If Apple doesn’t do this, I’m sure some startup will be formed just for this sort of thing.”