Watch all the Transform 2020 sessions on-demand here.

Three months after the American Civil Liberties Union revealed that Amazon provided facial recognition technology to local law enforcement, a new report shows that IBM collaborated with the New York City Police Department to develop a system that allowed officials to search for people by skin color, hair color, gender, age, and various facial features.

The Intercept and the National Institute’s nonprofit Investigative Fund, citing “confidential corporate documents” and interviews with engineers involved with the project, write that IBM began developing the analytics platform roughly 10 years ago in partnership with New York’s Lower Manhattan Security Initiative counterterrorist center, following an earlier experiment with the city of Chicago. At the time, IBM was a subcontractor to Microsoft subsidary Vexcel.

Using “thousands” of photographs from roughly 50 cameras provided by the NYPD, its computer vision system learned from 16,000 points to identify clothing color and other bodily characteristics, in addition to potential threats like unattended packages, people entering off-limits areas, and cars speeding up against the flow of traffic.

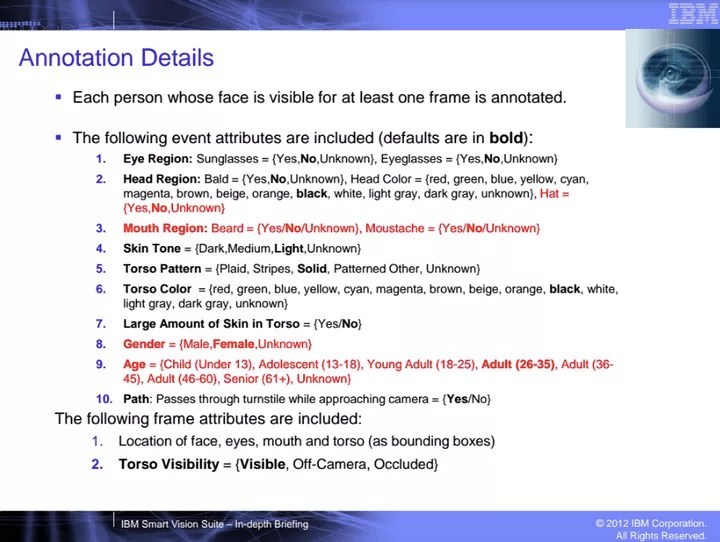

Above: An internal document listing the filters supported by IBM’s system.

NYPD officials were reportedly testing the software as early as 2012, but spokesperson Peter Donald told the Intercept its filtering tools were used only for “evaluation purposes” and that officers were instructed to exclude skin tone from searches. Various “elected leaders and stakeholders” were informed of the department’s efforts, according to Donald, but the details weren’t disclosed publicly.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Donald also confirmed that the department opted not to deploy the program, ultimately, and that it explicitly rejected an improved version that could search for people based on ethnicity. (The Intercept notes that IBM’s Intelligent Video Analytics 2.0, which was released in 2017, offers a body camera surveillance feature that automatically labels people by tags such as “Asian,” “Black,” and “White.”)

“Video, from time to time, was provided to IBM to ensure that the product they were developing would work in the crowded urban NYC environment and help us protect the city. There is nothing in the NYPD’s agreement with IBM that prohibits sharing data with IBM for system development purposes. Further, all vendors who enter into contractual agreements with the NYPD have the absolute requirement to keep all data furnished by the NYPD confidential during the term of the agreement, after the completion of the agreement, and in the event that the agreement is terminated.”

“IBM is committed to responsibly advancing and using new technologies and is recognized for policies that promote diversity and inclusion,” IBM wrote in a statement provided to VentureBeat. “These values are why we are making publicly available to other companies a dataset of annotations for more than a million images to help solve one of the biggest issues in facial analysis — the lack of diverse data to train AI systems.”

Controversy

Facial recognition systems have recently come under increased scrutiny, and with good reason.

In July, the ACLU demonstrated that Amazon’s Recognition could, when calibrated a certain way, misidentify 28 sitting members of Congress as criminals. A study in 2012, meanwhile, showed that facial algorithms from vendor Cognitec performed 5 to 10 percent worse on African-Americans than on Caucasians. More recently, it was revealed that a system deployed by London’s Metropolitan Police produces as many as 49 false matches for every hit.

Rick Smith, CEO of Axon, one of the largest suppliers of body cameras in the U.S., was earlier this summer quoted as saying that facial recognition isn’t yet accurate enough for law enforcement applications.

“[They aren’t] where they need to be to be making operational decisions off the facial recognition,” he said. “This is one where we think you don’t want to be premature and end up either where you have technical failures with disastrous outcomes or … there’s some unintended use case where it ends up being unacceptable publicly in terms of long-term use of the technology.”

In a blog post in August, AWS general manager Matt Wood wrote that “there have always been and will always be risks” with new technological capabilities and that it’s up to organizations to “act responsibly.” And in a statement today, IBM pointed out that it’s “one of the first companies anywhere to adopt a set of principles for trust and transparency for new technologies, including AI systems.”

However, critics — including Microsoft president Brad Smith, who in July called on lawmakers to investigate face-detecting algorithms and craft policies guiding their usage — argue their efforts don’t go far enough.

“Imagine a government tracking everywhere you walked over the past month without your permission or knowledge. Imagine a database of everyone who attended a political rally that constitutes the very essence of free speech. Imagine the stores of a shopping mall using facial recognition to share information with each other about each shelf that you browse and product you buy, without asking you first. This has long been the stuff of science fiction and popular movies — like Minority Report, Enemy of the State, and even 1984 — but now it’s on the verge of becoming possible,” Smith wrote.