Watch all the Transform 2020 sessions on-demand here.

In congressional hearings over the past year, Facebook executives including CEO Mark Zuckerberg have cited Facebook’s success in using artificial intelligence and machine learning to take down terrorist-related content as an example of how it hopes to use tech to proactively take down other types of content that violate its policies, like hate speech. Now, the company in a blog post today shed some light on some of the new tools it’s been using.

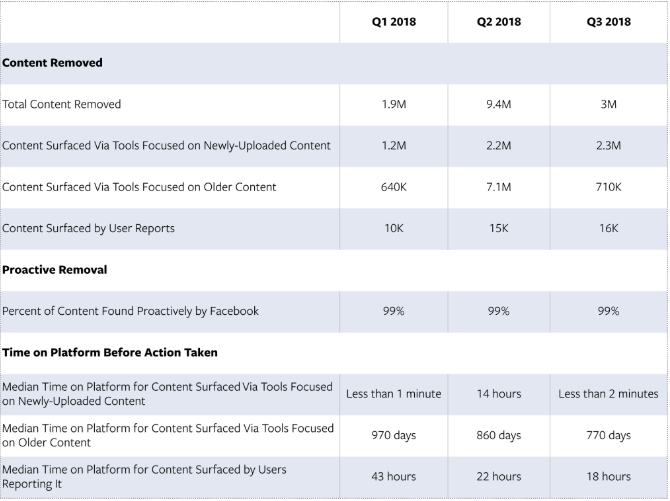

In the post, attributed to Facebook vice president of global policy management Monika Bickert, Facebook said that it took down 9.4 million pieces of terrorist-related content in Q2 2018, and 3 million pieces of content in Q3. That’s compared to 1.9 million pieces of content removed in Q1.

It’s important to note that Facebook is defining terrorist-related content in this report as “pieces of content related to ISIS, Al Qaeda and their affiliates,” and doesn’t address any takedown efforts regarding content from other hate groups. Facebook’s own internal guidelines define a terrorist organization more broadly, describing it as “any non-governmental organization that engages in premeditated acts of violence against persons or property to intimidate a civilian population, government, or international organization in order to achieve a political, religious, or ideological aim.”

That increase in the amount of content Facebook had to take down from Q1 to Q2 might immediately seem concerning, but that’s because Facebook said during Q2 it was taking more action on older content. For the past three quarters, Facebook said that it has proactively found and removed 99 percent of terrorist-related content, but the amount of content surfaced by user reports continues to rise — from 10,000 in Q1 to 16,000 in Q3. More statistics on how much terrorist-related content Facebook has removed in recent quarters, and how old it is, is below:

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

More importantly, Facebook also gave some new details on what tools it’s using, and how it decides when to take action. Facebook says it now uses machine learning to give posts a “score” indicating how likely it is that that post signals support for the Islamic State group (aka ISIS), al-Qaida, or other affiliated groups. Facebook’s team of reviewers then prioritizes posts with the highest scores. If the score is high enough, sometimes Facebook will remove the content even before human reviewers can look at it.

Facebook also said that it recently started using audio and text-hashing techniques — previously it just used image and video-hashing — to detect terrorist content. It’s also now experimenting with algorithms to identify posts whose text violates its terrorist policies across 19 languages.

Facebook hasn’t said what other types of content it may soon use these systems to detect, though it acknowledges that “terrorists come in many ideological stripes.” But it’s clear that — if Facebook is using machine learning to determine whether or not a post expresses support for a certain group — those same systems could likely be trained in the future to spot support for other well-known hate groups, such as white nationalists.

It’s also worth noting that even though Facebook is viewing the decrease in the amount of time terrorist-related content has spent on the platform as a success, the company itself acknowledges that that’s not the best metric.

“Our analysis indicates that time-to-take-action is a less meaningful measure of harm than metrics that focus more explicitly on exposure content actually receives,” Bickert wrote. “Focusing narrowly on the wrong metrics may disincentives [sic] or prevent us from doing our most effective work for the community.”