Watch all the Transform 2020 sessions on-demand here.

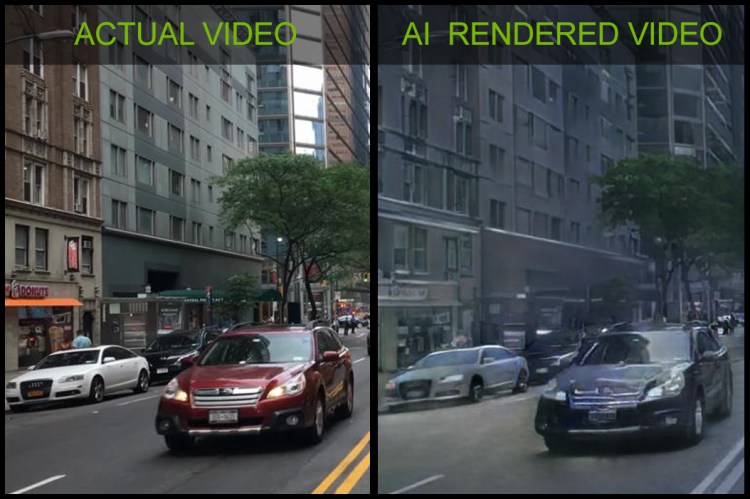

Nvidia researchers have made a generative model that can create virtual environments using real-world videos from sources like YouTube — a way of generating graphics that could have implications for the future of gaming and AI.

“It’s a new kind of rendering technology, where the input is basically just a sketch, a high-level representation of objects and how they are interacting in a virtual environment. Then the model actually takes care of the details, elaborating the textures, and the lighting, and so forth, in order to make a fully rendered image,” Nvidia VP of applied deep learning Bryan Catanzaro told VentureBeat in a phone interview.

The system was trained using Apolloscape video from Baidu’s autonomous driving project. Sketches of where to find things — like trees, buildings, cars, and pedestrians — are input to the model.

The method is detailed in a paper titled “Video-to-Video Synthesis” authored by Catanzaro, other Nvidia researchers, and an MIT CSAIL student.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The model could make it possible to quickly generate synthetic environments for training reinforcement learning agents or help people who are playing games feel like they’re in the same location.

“Imagine if you could play as yourself in a video game. And just from a cell phone video that you took, that was enough information to add your character as yourself, as the knight in shining armor that’s going off to do some great deed,” he said. “Nvidia’s been working on graphics for a long time, so we’re really excited to bring graphics into the AI era and use AI to generate graphics, where we learn how things work from videos of the real world, and then can synthesize content based on that knowledge.”

The system is able to take into account things like the location of objects, relationships between objects, and maps to define the edges of objects.

Nvidia will demo videos and share additional details of the technique at the Neural Information Processing Systems conference (NeurIPS, formerly NIPS) being held this week in Montreal.

The team at Nvidia was initially inspired to take this approach by the work of Alexei Efros and other researchers at University of California, Berkeley, and by their creation of the Pix2Pix system. Nvidia created Pix2PixHD in response, working in tandem with AI practitioners from UC Berkeley.

Earlier this year, UC Berkeley researchers also produced models that were able to dance and do flips and 20 other acrobatic moves.

“I think this is the first interactive AI rendering, and we’re really proud of the progress we’ve made. But it is early stage, and I think there’s going to be a lot of progress to make the output higher quality and more general so that we can handle more kinds of scenes. And so I’m really excited about where this is going to go in the future,” Catanzaro said.