Watch all the Transform 2020 sessions on-demand here.

Wilderness trail traversal is a useful skill for rescuers and outdoor enthusiasts alike, but one that’s difficult to teach to robots. It’s not that the mechanical beasts aren’t capable of learning to hike up hillsides while avoiding fallen tree branches — state-of-the-art machine learning algorithms can accomplish that much. The trouble lies in their lack of generalizability: AI-driven robots often struggle in environments they haven’t seen before.

Researchers at the University of Colorado recently proposed a solution in a paper (“Virtual-to-Real-World Transfer Learning for Robots on Wilderness Trails“) published on the preprint server Arxiv.org. Their approach leverages a variety of deep learning models — i.e., layers of neuron-simulating mathematical functions — to help robots figure out the direction of hiking trails from camera footage.

“Robots hold promise in many scenarios involving outdoor use, such as search-and-rescue, wildlife management, and collecting data to improve environment, climate, and weather forecasting,” the researchers wrote. “However, autonomous navigation of outdoor trails remains a challenging problem … Collecting and curating training datasets may not be feasible or practical in many situations, especially as trail conditions may change due to seasonal weather variations, storms, and natural erosion.”

As the paper’s authors point out, there’s a lot of variability in outdoor trails. They’re defined by any number of materials (like gravel, dirt, and mulch) and they span different biomes such as forests, meadows, and mountains. Moreover, their appearance changes with the season, weather, and time of day, and they’re often partially obscured by dense vegetation.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

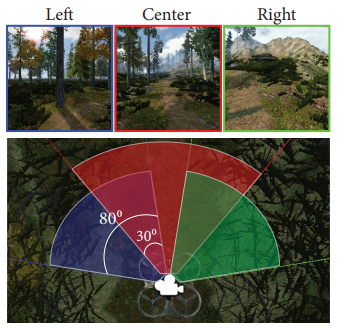

Above: Top-down view of camera configuration for data

capture in Unity environment

Collecting and labeling the real-world data necessary to train a robust system would be prohibitively time-consuming, so instead, the researchers sourced synthetic images of virtual outdoor trails. To produce them, they assembled a scene of an alpine mountain with dirt trails in Unity and dotted it with 3D models of trees, rocks, and grass from the Unity Asset Store. They then set loose a virtual robot with three cameras — each with a 400-by-400-pixel resolution at and an 80-degree field of view — to collect 20,269 images of the landscape.

The team resized the images to a resolution of 100-by-100-by-3-pixels, to allow for faster processing and lower memory consumption. They then split the collection into three separate sets: one for training, one for validation, and a third for testing.

The training dataset was fed to three different neural networks with distinct architectures: a deep neural network (DNN), a convolutional neural network (CNN), and a recurrent neural network (RNN). In experiments where AI was given complete control of the virtual robot, one model — the RNN — managed to predict the correct trail direction with 95.02 percent accuracy. Importantly, in a separate test where the researchers trained all three AI algorithms on a dataset of 4,000 real-world images, the DNN model scored 58.41 percent accuracy, exceeding the baseline and indicating that virtual-to-real-world transfer learning has the potential to outperform some conventional computer vision methods.

“[W]e observed that the robot was largely successful in navigating trails, including those with tight turns and obstacles such as large boulders,” the researchers wrote. “Moreover, we observed several instances of ‘intelligent’ decision-making; in one trial, the robot briefly navigates off the trail after colliding with a large obstruction, but then navigates back to the trail and resumes its travel.”

None of the three neural networks performed perfectly, that said. The robot occasionally mistook particular terrain regions as trail features, which caused it to veer off course. And researchers conceded that Unity’s low-quality terrain packs didn’t allow for “sufficient variance” among objects. (They leave to future work exploration of techniques to improve generalizability, like procedurally generating terrain with different conditions.)

But they contend that their work lays the foundation for methods that might speed up the training of terrain-navigating AI in robots.

“Robot battery life, human fatigue, and safety considerations present major challenges for manual data collection; however, with our approach, these issues may be circumvented as labeled data generation can be performed rapidly and efficiently within a virtual setting,” the researchers wrote. “Robots may then be virtually trained to navigate terrain that is hard to access and/or dangerous, including novel terrains that are currently impossible to access and collect real data from (e.g., Mars) without ever being first exposed to these environments.”