Watch all the Transform 2020 sessions on-demand here.

Teaching robots to walk is one thing, but what about helping them learn how to recover from a stumble? That’s the not-so-easy feat researchers at ETH Zurich set out to accomplish with the help of artificial intelligence (AI). In a newly published paper (“Robust Recovery Controller for a Quadrupedal Robot using Deep Reinforcement Learning“) submitted to the preprint server Arxiv.org, they describe an AI system that can aid robots in getting up from the ground after they trip and fall.

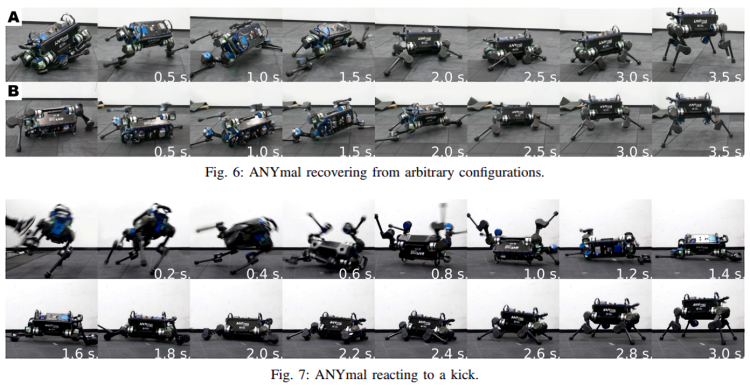

“In case of a fall, animals show the remarkable ability to recover from any posture by pushing against their surroundings and swinging limbs to gain momentum,” the researchers wrote. “Having similar abilities in legged robots would significantly improve their robustness against failure and extend their applicability in harsh environments. We address this topic in the present work by developing a control strategy for the robust recovery maneuver of quadrupedal robots.”

Their model taps an AI training technique known as deep reinforcement learning, which uses a system of rewards to drive agents toward certain goals and to control the robots’ recovery maneuvers. Four neural network policies (i.e., strategies) were trained individually in a simulated environment and then deployed on ANYmal, a four-legged, dog-sized robot with 12 degrees of freedom.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

A controller component selected one of three behaviors — locomotion, standing up, or self-righting — for the robot’s given situation, depending on factors like recent observations, commands, previously chosen behavior types, and previous actions. An additional module — a height estimator — gauged its base height to prevent the robot from drifting off course.

The AI-driven policies obviated the need to handcraft recovery rules and sequences, the researchers said, providing an advantage over a number of popular control schemes. Additionally, they proved to be remarkably robust, because they were able to handle unpredictable scenarios, such as when the robot’s legs become stuck below its base.

The preliminary results are encouraging. Over the course of 50 tests in which the robot started lying down, the self-righting policy managed to recover within five seconds. Moreover, it found success even when the robot’s base was almost upside down and when its legs were stuck beneath it, sometimes by instructing the robot to flip to its side before having it try an upright post. In a second experiment in which the researchers kicked the ANYmal while it was walking or standing, the policy managed to recover 47 times, failing only when the mechanical quadruped’s joint position was unusually high.

The researchers concede that the system was only trained and tested on flat ground, meaning it would likely fail when faced with steep inclines or rough terrain. But in future work they plan to address that limitation by randomizing the simulation environment.

“The proposed controller exhibits dynamic recovery maneuvers involving multiple ground contacts, and the resulting motions are consistent with the simulated ones,” they wrote.