Watch all the Transform 2020 sessions on-demand here.

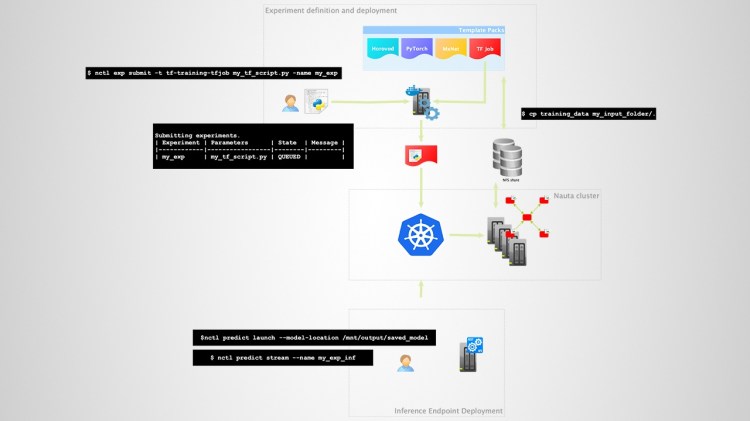

Intel today announced the open source release of Nauta, a platform for deep learning distributed across multiple servers using Kubernetes or Docker.

The platform can operate with a number of popular machine learning frameworks such as MXNet, TensorFlow, and PyTorch, and uses processing systems that can work with a cluster of Intel’s Xeon CPUs.

Results of deep learning experiments conducted with Nauta can be seen using TensorBoard, command line code, or a Nauta web user interface.

“Nauta is an enterprise-grade stack for teams who need to run DL workloads to train models that will be deployed in production. With Nauta, users can define and schedule containerized deep learning experiments using Kubernetes on single or multiple worker nodes, and check the status and results of those experiments to further adjust and run additional experiments, or prepare the trained model for deployment,” according to a blog post announcing the news today.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Nauta is the latest tool to be released that can use Kubernetes or Docker containers, an approach that allows for practitioners to choose between deployment of AI via on-premise servers or in the cloud.

In November, Google introduced the Kubeflow Pipeline, a Kubernetes workflow, while Microsoft introduced Azure Kubernetes Service in public preview last month.

The Linux Foundation’s LF Deep Learning Foundation also introduced the Acumos AI tool for deep learning with Docker or Kubernetes last fall.

Like other distributed deep learning platforms, Nauta also boasts that it can help teams of data scientists and AI practitioners collaborate and work together.

Premade tools, scripts, and model templates, which users are free to customize, are part of the platform, along with batch or streaming inference for testing models. Additional updates for the new platform will be introduced later this year.