testsetset

What do Montezuma’s Revenge, chess, and shogi have in common? They’re considered to be “grand challenges” in artificial intelligence (AI) research — i.e., games that involve elements of complex, nearly human-level problem-solving. But as AI continues to make gains in these and other benchmarks once considered beyond the reach of machines, scientists at Google Brain (Google’s AI research division) and Google subsidiary DeepMind are turning their attention to a new domain: the card game Hanabi.

In a paper published on the preprint server Arxiv.org (“The Hanabi Challenge: A New Frontier for AI Research“), the team of researchers describes an open source framework — the Hanabi Learning Environment — designed to evaluate algorithmic advances and assess the performance of current state-of-the-art techniques. Hanabi is deceptively complex, they explain: Its two-to-five-person setting necessitates not only cooperative gameplay, but the ability to reason with one’s own mental state about opponents’ intentions.

“We believe developing novel techniques capable of imbuing artificial agents with [a] theory of mind will not only be crucial for their success in Hanabi, but also in broader collaborative efforts,” they wrote. “Throughout human societies, people engage in a wide range of activities with a diversity of other people … With such complex … interactions playing a pivotal role in human lives, it is essential for artificially intelligent agents to be capable of cooperating effectively with other agents, particularly humans.”

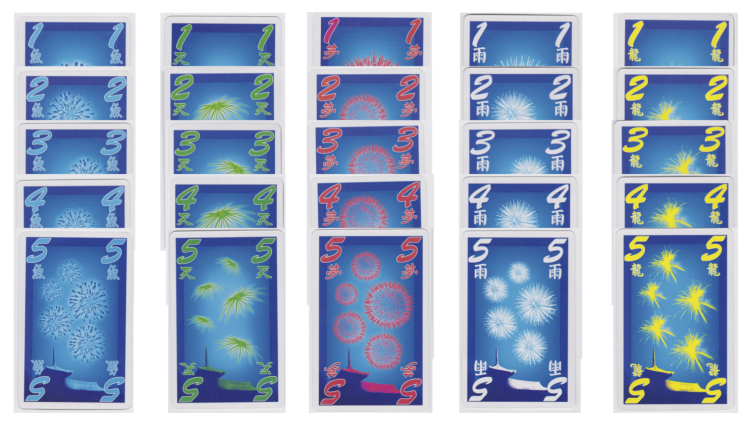

In Hanabi — which won the Spiel des Jahres, an award for board and card games that honors excellence in game design, in 2013 — players are dealt cards from a hand containing five cards in total. (Players can see each other’s cards, but their own are concealed from them.) Each turn, they must perform one of three actions: reveal the suit or number of the card in another player’s hand, discard a card, or play a card that’s either a “1” in a suit that hasn’t been played or the next number sequentially in a suit that has been played.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The goal is to achieve the top score by value of the highest cards in each suit played — a task more challenging than it sounds. Revealing information about cards consumes one of eight available information tokens, which can only be replenished with a discard or the successful play of a “5” of any suite. A failed card play, meanwhile, consumes one of three available fuse tokens.

“[P]erforming a hint action consumes the limited resource of information tokens, making it impossible to fully resolve each player’s uncertainty about the cards they hold based on this grounded information alone,” the paper’s authors point out. “To overcome this limitation, successful play involves communicating extra information implicitly through the choice of actions themselves, which are observable by all players … This challenge connects research from several communities, including reinforcement learning, game theory, and emergent communication.”

Toward that end, alongside the paper, the researchers made available the Hanabi Learning Environment, a Python and C++ interface that’s comparable architecturally to OpenAI’s eponymous OpenAI Gym. It includes two challenges: self-play learning, which is focused on finding a joint policy that achieves a high score without the aid of other AI-controlled players, and ad-hoc teams, which aims to test and train AI players capable of playing with other AI or human players

In experiments involving state-of-the-art reinforcement learning algorithms with deep neural networks — that is, algorithms trained using a system of rewards that have achieved superhuman performance in games like chess — the team found that most didn’t come close to beating hand-coded bots in self-play, and that they failed to collaborate in the ad-hoc team challenge. This, the team says, indicates there’s room for improvement.

“[N]ovel techniques will be required to close [the] gap,” the paper’s authors wrote. “Theory of mind appears to play an important role in Hanabi, [and our environment] hopefully [advances] our understanding of how our agents can develop something like [it].”