Watch all the Transform 2020 sessions on-demand here.

More than 8 million driverless cars will hit public roads by 2025, according to research firm ABI, and they’ll all have something in common: sensors, powerful PCs, and machine learning algorithms. Nvidia, Intel’s Mobileye, Baidu, and others offer tools that produce visual representations of what those cars are seeing (and have seen) in the real world and how they’re making (or have made) decisions, but these tools aren’t perfect — they’re inflexibly desktop-based and nonstandardized, and the files they ingest are of large sizes that aren’t particularly conducive to sharing.

Uber thinks it can do better.

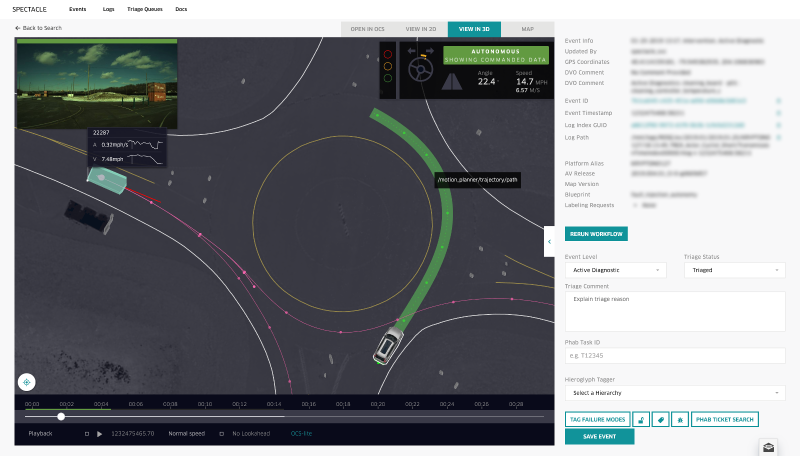

The ride-sharing company today made an open source contribution to the budding AV dev tool ecosystem: Autonomous Visualization System (AVS), a standalone web-based technology it describes as “a new way” to understand and share autonomous systems data. It’s the same suite tapped by Advanced Technologies Group (ATG), the Uber division charged with developing its autonomous car platform, and several companies — including Voyage and Applied Intuition — have already pledged to use it.

“With AVS abstracting visualization, developers can focus on core autonomy capabilities for drive systems, remote assistance, mapping, and simulation,” Uber wrote in a blog post. “AVS frees developers from having to build custom visualization software for their autonomous vehicles.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The AVS team used modularity as a guiding principle, Uber says, building in “layers” such that components in the autonomous stack can be evolved or tailored for a context independently, without necessitating system-wide changes. This has allowed Uber’s internal teams to use it to view logs and conduct mapping maintenance, for instance, while keeping it extensible enough for industries like drones, robotics, trucking, and fleet management.

AVS comprises two core pillars: XVIZ, a specification that provides, manages, and describes generated autonomous systems data, and streetscape.gl, a toolkit for building web apps that take in data in the XVIZ protocol.

According to Uber, XVIZ streams — series of discrete updates that occur at specific times with primitives, or objects that describe things like camera images, lidar point clouds, trajectories, and vehicle speed over time — incorporate a server-side encoder and builder, and on the client side a decoder, data buffer, and synchronizer. The schema-structured view and display system works across clients and enables engineers to explore and seek states and to tie separate stream updates together into a single object.

AVS’ UI bundles graphical panels for objects with the data — objects can be styled individually or assigned a style class, while streams can be organized through hierarchical naming with a metadata section listing their types, relative transforms, and more.

As for the streetscape.gl piece, it’s built on top of Vis.gl, Uber’s WebGL-powered collection of open source visualization frameworks, and it features “highly styleable” components for translating XVIZ data streams to 3D viewports, charts, tables, videos, and more. Additionally, it handles things like time synchronization across data streams, cameras, dynamic styling, and interaction with 3D objects, and it supports real-time playback and scenes with “hundreds of thousands” of geometries.

Uber says it plans to enhance AVS with “more data sources [and] specifications … performance optimizations, and richer features” in the future.

“[W]e created AVS to meet the needs of everyone in the autonomy ecosystem, including engineers, vehicle operators, analysts, and specialist developers. Autonomous engineers can describe their systems with XVIZ easily and then test and visualize their expectations with limited overhead,” Uber wrote. “Specialist developers can quickly build data source-agnostic applications with strong performance characteristics and simplified integration using streetscape.gl. Lastly, operators can view the data in standard visual formats, including videos, across multiple applications, leading to easier collaboration, knowledge understanding, deeper analysis, and overall trust in the data quality.”