Watch all the Transform 2020 sessions on-demand here.

One of the frustrations with machine learning, particularly in the area of image recognition, is that neural nets sometimes get things completely, laughably, inexplicably wrong. We question how an AI could be fed an image of an unmistakably dog-like dog and ascertain that it’s a pineapple. But new research from Johns Hopkins University, published in Nature Communications, demonstrates that there is a logic to these errors — one humans can intuitively understand, if pressed.

Researchers Zhenglong Zhou and Chaz Firestone conducted a series of experiments in which they presented human participants with adversarial image sets — images that contain tiny errors designed to deceive a machine learning model — and asked them to predict the labels certain Convolutional Neural Networks (CNNs) had applied to the images. In some cases, the CNNs had overcome the adversarial images and correctly applied labels, but in other instances they had whiffed. The researchers wanted to understand if humans would apply the same labels to each image, and — in the event the machines were tricked — surmise which incorrect labels had been applied. What the researchers found is that humans are quite good at intuiting a machine’s logic, even when that logic returns a seemingly ridiculous error.

“People have a good intuition for when a machine will misbehave,” Firestone told VentureBeat in a phone interview. “Machines that classify images are now very good — in fact, they’re better than you and me, on average. But they make mistakes that we usually don’t make.” He said that when he encountered some of those apparently silly errors himself, he noticed there actually seemed to be a logic behind it. “I thought, ‘Wait a second, is it really that mysterious?'” After looking at an image a CNN had misclassified as an armadillo, let’s say, he could understand why an AI may perceive it as “armadillo-ish.”

Looking closer at armadillo-ishness

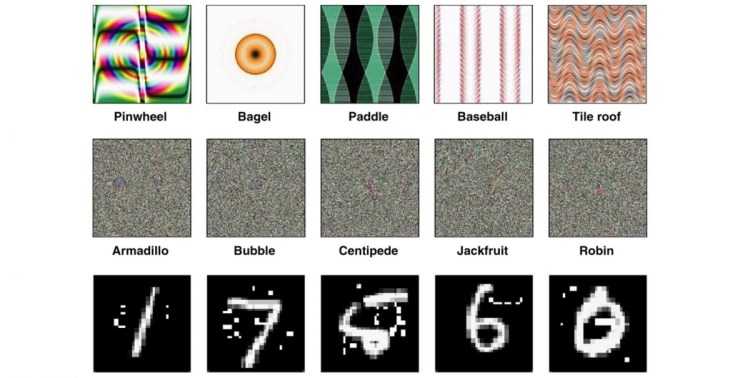

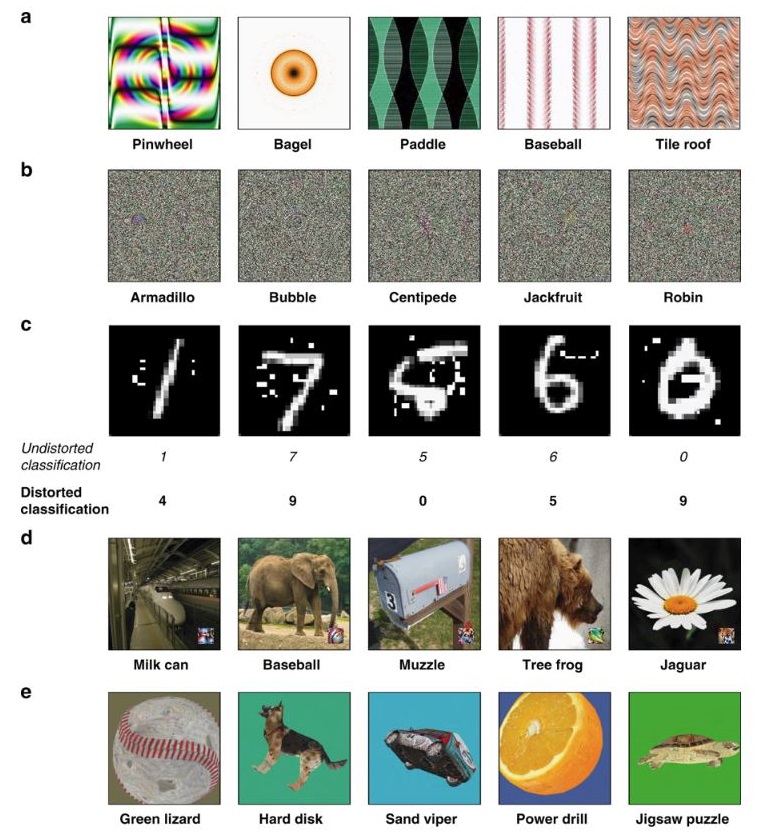

With this in mind, Zhou and Firestone designed a series of experiments to probe further. They collected 48 images that were “produced by several prominent adversarial attacks,” according to the paper, which is to say that although the sample size of images is relatively small, the images were selected for their ability to defeat CNNs. In the various experiments, the researchers ran the image set against CNNs like AlexNet and Inception V3.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Around 1,800 people participated in the study, recruited through Amazon’s Mechanical Turk to ensure strong diversity among the participants, compared to a sample consisting entirely of university students, for instance. Each of the eight experiments in the study contained 200 individuals, save for one that had 400. This means the results of each experiment are from completely different sets of test subjects.

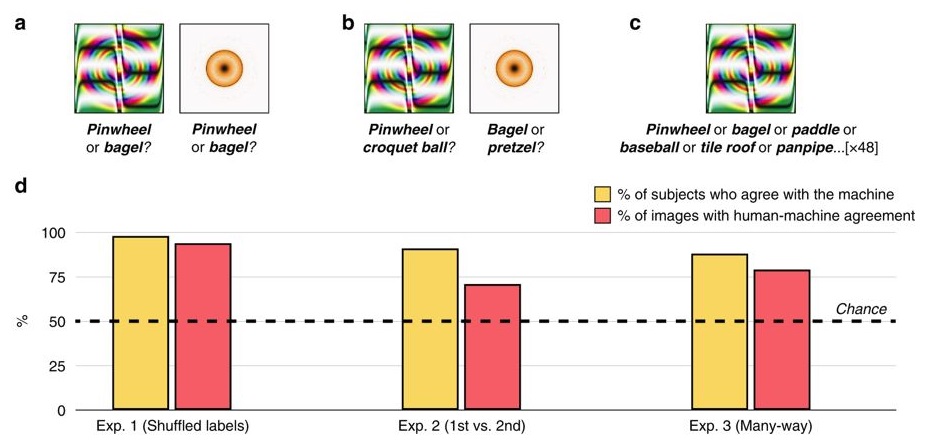

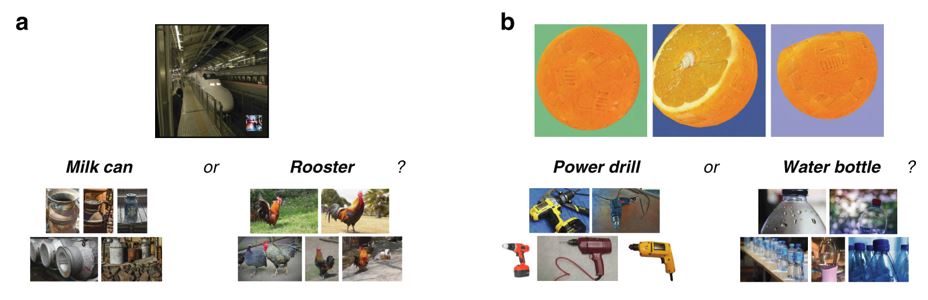

In the most basic of the tests, the humans were presented with one image (48 total), and two labels and asked to choose which label they thought a machine had applied to the image. One of the two was the label the machine had picked for that image, and the other was a label pulled randomly from one of the 47 other images in the set.

In the more complex second experiment, humans were asked to rank two labels for each image. The idea, the researchers wrote, was to neutralize some of the more obvious characteristics that would make the selection too easy. For example, a round, brownish object with a hole in the middle could be a bagel, or it could be a pretzel. The researchers describe these as “superficial commonalities.” They took AlexNet’s first and second label choices for each image and presented them to the humans, who then had to decide which label was the CNN’s first choice and which the second.

But the first two experiments are fairly simple, and having only two choices makes the whole thing pretty easy. That’s why the third experiment threw a proverbial wrench in the works by offering up all 48 possible labels for each image. This is called “many-way classification.” The authors of the study acknowledged that although it’s not an especially strong simulation of how CNNs work — ImageNet has 1,000 labels, which a human can’t feasibly sort through in one sitting — they at least “stepped closer to these conditions by displaying the labels of all 48 images at once.”

In a related test, the researchers did not ask participants to guess what label the machine had given each image, but instead asked what label they would assign to each one. They still had the 48 labels to choose from, but within those constraints, they were asked to select the one that most accurately reflected each image.

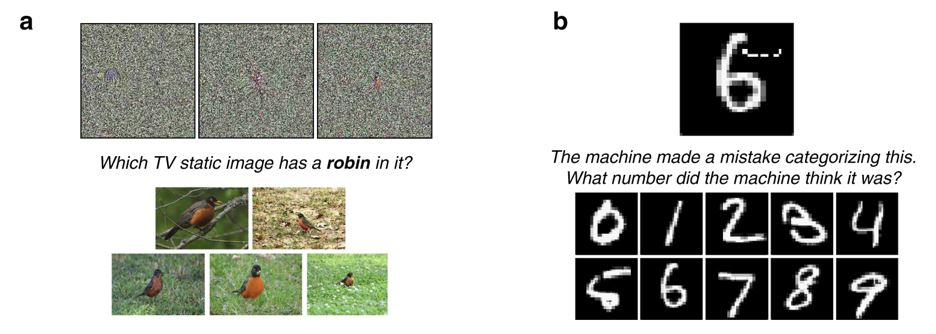

Another experiment used “television static” images, which are pictures that look like CRT TV static, but with some colors and a vague subject. Given a prompt (“robin”) and a set of images of a robin, subjects had to choose which of three television-static images showed the bird.

The “perturbed digits” experiment, in which an otherwise obvious image is purposely defaced to make a CNN think it’s a different object, illustrates some of the more consequential implications of the study. “One could imagine, for example, a malicious actor altering a speed limit sign in this way, which might fool an autonomous vehicle into recognizing a ‘Speed Limit 45’ sign as a ‘Speed Limit 75’ sign and then dangerously accelerating as a result,” the authors noted in the paper.

What’s particularly tricky about this test for humans is that we can often see right through the ruse. In the paper’s example, we can see that a handwritten “6” just has a few dashes added next to it. No human would think it’s anything other than a 6, but lo and behold, the CNN misclassified this perturbed image. The true task for the human subjects, then, was to overcome what was obvious to them and figure out what the machine could have possibly misunderstood. The results show that we’re capable of thinking like a machine, even when the machine is confused.

That finding held even when the researchers simulated a localized adversarial attack that perturbed far fewer pixels than the digits experiment but did so with images of more natural objects, like a train or an orange. The Inception V3 CNN had misclassified the 22 (slightly perturbed) test images, and human subjects had to determine which of two seemingly bizarre and unrelated mis-labels the CNN ascribed to each image. In other words, given a picture of a train, did the machine think it saw a rooster or an old-timey milk can?

The results, the researchers wrote, were remarkable. For lack of a better idiom, humans were on the same page with the machines most of the time, far above any measure of chance. “We conclude that human intuition is a more reliable guide to machine (mis) classification than has typically been imagined,” reads the paper.

Know your machines

The broad conclusion of the study is that humans are pretty adept at deciphering adversarial images, even from a machine learning model’s point of view. “The present results suggest that human intuition is a reliable source of information about how machines will classify images — even for adversarial images that have been specifically designed to fool the machine,” it reads.

But in speaking with Firestone, it’s clear he has a broader and more nuanced take on what the research is uncovering. “Any time you interact with a new piece of technology, one thing that you need to do is not only know how to use it in the cases where you’re supposed to use it, but you also need to know about when it will fail.” He gave the example of AI assistants like Siri or Alexa; we know we can’t always use colloquial phrases to communicate with them, or perform voice commands with a mouthful of food.

“The same thing is going to have to be true for machine vision technology,” Firestone said. “If you’re a radiologist who uses one of these machines to help you screen for cancer, or if you’re passively operating a car that’s driving itself, you are going to have to know [when] you’re in one of those situations when the machine doesn’t work the way it’s supposed to.” Lives may depend on your knowledge and awareness.

He said that the results of this study are actually hopeful, an early indicator that humans can learn how and when our machines will fail or screw up, like understanding that at sunset your autonomous car is dealing with problematic lighting conditions different from those in its training environment.

Though hopeful, Firestone is frank about the challenges involved: “You’re going to have to start developing an intuition for when your machines succeed and for when they fail.”

The good news, as the study showed, is that we’re capable of doing so.