Watch all the Transform 2020 sessions on-demand here.

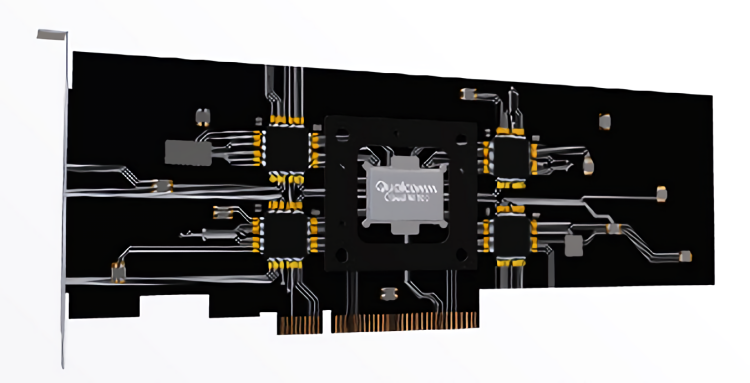

Qualcomm kicked off its annual AI Day conference in San Francisco with a bang. It took the wraps off of three new system-on-chips bound for smartphones, tablets, and other mobile devices, and if that weren’t enough, it announced a product tailor-made for edge computing: the Qualcomm Cloud AI 100.

“It’s a whole new signal processor that we designed specifically for AI inference processing,” said senior vice president of product management Keith Kressin, adding that sampling will begin in the second half of this year ahead of production next year. “[We’re not] just reusing a mobile chip in the datacenter.”

The Cloud AI 100 — which will be available in a number of different modules, form factors, and power levels from original device manufacturers — integrates a full range of developer tools, including compilers, debuggers, profilers, monitors, servicing, chip debuggers, and quantizers. Additionally, it supports runtimes including ONNX, Glow, and XLA, as well as machine learning frameworks like Google’s TensorFlow, Facebook’s PyTorch, Keras, MXNet, Baidu’s PaddlePaddle, and Microsoft’s Cognitive Toolkit.

Qualcomm estimates peak performance at 3 to 50 times that of the Snapdragon 855 and Snapdragon 820, and it’s claiming that, compared with traditional field-programmable gate arrays (FPGA) — integrated circuits designed to be configured after manufacturing — it’s about 10 times faster on average in inferencing tasks. Moreover, measured in tera operations per second (TOPs) — a common performance metric used for high-performance chips — the Cloud AI 100 can hit “far greater” than 100 TOPs. (For comparison’s sake, the Snapdragon 855 maxes out at around 7 TOPs.)

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“FPGA or GPUs [can often do] AI inference processing more efficiently … [because] a GPU is a much more parallel machine, [while] the CPU is more serial machine, [and] the parallel machines are better for AI processing,” Kressin explained. “But still, a GPU is more so designed for graphics, and you can get a significant improvement if you design a chip from the ground up for AI acceleration. There’s about an order of magnitude improvement for a CPU to FPGA or GPU. There’s another order of magnitude improvement opportunity for custom-built AI accelerator.”

Qualcomm’s foray into cloud inferencing comes after a chief rival, Huawei, unveiled what it said was the industry’s highest-performance Arm-based processor, dubbed Kunpeng 920. In SPECint — a benchmark suite of 12 programs designed to test integer performance — that chip scored over 930, or almost 25 percent higher than the industry benchmark, while drawing 30 percent less power than “that offered by industry incumbents.”

It’s hardly the only one.

In January at the Consumer Electronics Show in Las Vegas, Intel detailed its forthcoming Nervana Neural Network Processor (NNP-I), which will reportedly deliver up to 10 times the AI training performance of competing graphics cards. Google last year debuted Edge TPU, a purpose-built ASIC for inferencing, and Alibaba announced in December that it aimed to launch its first self-developed AI inference chip in the second half of this year.

On the FPGA side of the equation, Amazon recently took the wraps off of its own AI cloud accelerator chip — AWS Inferentia — and Microsoft previewed a comparable platform in Project Brainwave. Facebook in March open-sourced Kings Canyon, a server chip for AI inference, and just this month, Intel announced a family of chipsets — Agilex — optimized for AI and big data workloads.

But Qualcomm is confident that the Cloud AI 100’s performance advantage will give it a leg up in a deep learning chipset market forecast to reach $66.3 million by 2025.

“So many are putting network hardware on the cloud edge, like a content delivery network for different types of processing, whether it’s cloud gaming, or AI processing. So this is really another key trend. And Qualcomm has the opportunity to participate all the way from the end user input technology, all the way until the cloud edge,” Kressin said.

Its other potential advantage? Ecosystem support. In November, Qualcomm pledged $100 million toward a startup fund focused on edge and on-device AI, specifically in autonomous cars, robotics, computer vision, and internet of things domains. And last May, it partnered with Microsoft to create a vision AI developer kit for the AI accelerators embedded within many of its system-on-chips.

“In terms of market size, inferencing [is] becoming a significant-sized market for silicon,” Kressin said. “[As] time progresses, [we expect that] inference [will become a] bigger part of it — over 2018 to 2025, about 10 times growth. We’re pretty confident we’ll be in a position to be the power performance leader or AI processing and the data.”