Watch all the Transform 2020 sessions on-demand here.

Humans have scribbled likenesses of people, places, and things for tens of thousands of years, as evidenced by the walls and ceilings of Chauvet Cave in France and subterranean passageways in Borneo. But AI is catching up. In a newly published preprint paper on Arxiv.org (“Teaching GANs to Sketch in Vector Format“), researchers propose a neural network — layers of mathematical functions modeled after biological neurons — that can generate stroke-based sketches in vector format (i.e., lightweight files that don’t degrade when transformations are applied to them).

The key turned out to be GANs, or two-part neural networks consisting of generators that produce samples and discriminators that attempt to distinguish between the generated samples and real-world samples. The team first devised a novel GAN-based architecture — SkeGAN — which they pretrained on 50% of a publicly available data set for 73500 iterations (38,500 iterations for the generator and 35,000 for the descriminator). Then, they created a second AI system dubbed VASkeGAN, a combination of variational autoencoders (deep learning technique for learning latent representations) and GANs, which served as a baseline.

They next sourced Google’s Quick Draw Dataset — which comprises over 75,000 samples across 345 different domains — to compile a training data set with cat, firetruck, mosquito, yoga poses, and other sketch categories selected for their “diversity.” (On a PC with an Nvidia GeForce GTX 1080 Ti, the total time to train SkeGAN was over 55 hours.) In an attempt to quantify the generated drawings’ quality, the researchers devised a metric — Ske-score — defined as the ratio of number of times the pen is lifted to number of times it touches the paper while the sketch is being drawn. And in a separate test, they tasked 45 human subjects with rating the sketches’ clarity, drawing skill, and “naturalness” on a scale of 1 to 5.

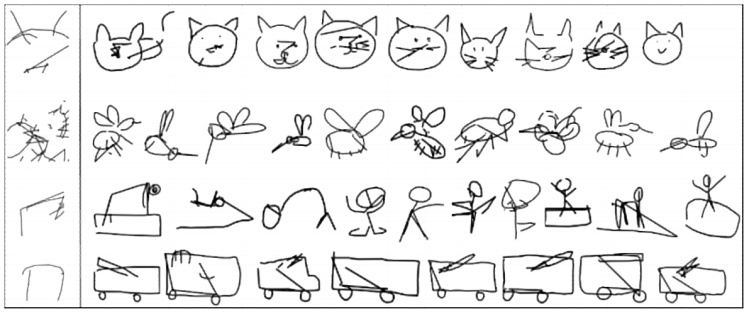

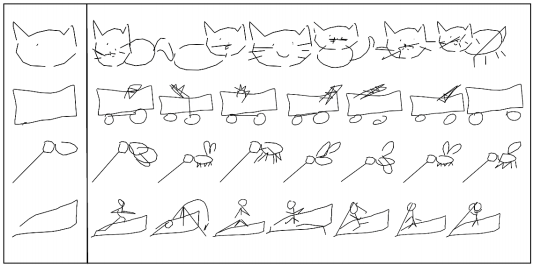

Above: Sketches generated by the model.

The team reports that SkeGAN’s sketches were better both qualitatively and quantitatively than those generated by VASkeGAN, and that it produced artwork that was both “clearer” and more natural compared with those from the training data set. Additionally, they observed that by varying the temperature — a variable that controls the randomness of the model’s predictions — they could optimize for “extra visually appealing” features not present in the input images, like changes in position of whiskers of generated cat sketches.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“[Importantly], SkeGAN overcome[s] the ‘scribble effect’ … wherein the pen is not lifted to draw at another location, but stays on the paper and continues to scribble,” the researchers wrote. “Future directions include generalizing this work to larger vector-art datasets, including cartoons.”