testsetset

Machine learning algorithms have a fatal flaw: They’re costly to fine-tune (in terms of time and resources) from scratch for specific apps. Some automated approaches attempt to expedite the process by searching for suitable existing models, but researchers at Google’s AI research division have a better idea.

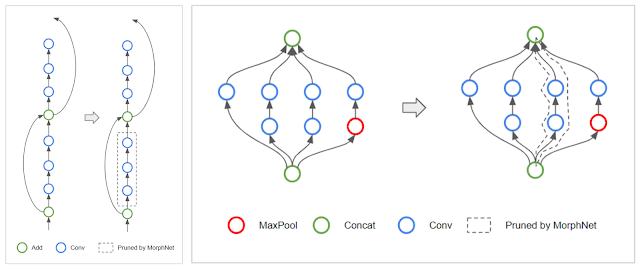

In a blog post published this afternoon and accompanying paper (“MorphNet: Fast & Simple Resource-Constrained Structure Learning of Deep Networks“), they describe a technique — MorphNet, which is available in open source on GitHub — that takes as input AI systems and simplifies their architectures. The result is smaller, faster, and better-performing models that can be applied to “Google-scale” problems, said senior software engineer Andrew Poon and Google AI Perception product manager Dhyanesh Narayanan.

So how’s it work? Well, MorphNet runs machine learning algorithms through a cycle of shrinking and expanding phases. In the former, it picks out inefficient neurons — the fundamental building blocks of most modern models — and prunes them, using a mechanism to calculate the cost of each neuron (and by extension its efficiency) with respect to the task at hand. During the expanding phase, meanwhile, MorphNet uses a width multipler to expand layers of neurons within the model uniformly, such that computational resources from less efficient parts are reallocated where they might be “more useful.”

Above: MorphNet optimizing an AI model.

MorphNet is customizable, to a certain extent. Poon and Narayanan point out that users can halt it after the shrinking phase to yield a more efficient (but slightly less accurate) network, for instance, or laser-target its sparsification at the reduction of particular resources, such as floating point operations per second (FLOPs) per inference or model size. “When optimizing for computation cost, higher-resolution neurons in the lower layers of the network tend to be pruned more than lower-resolution neurons in the upper layers,” they explain. “When targeting smaller model size, the pruning tradeoff is the opposite.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

MorphNet confers other advantages. Specifically, it’s able to learn the structure of an AI system in a single training run and can be applied directly to data sets, and it accounts for the number of neurons (and their placement) in a model automatically, intelligently removing them so as not to cripple performance. Perhaps best of all, the optimized models it produces can be trained as per usual, without the need to follow special training recipes.

Poon and Narayanan say that, applied to popular computer vision algorithm InceptionV2 trained on the open source ImageNet data set, MorphNet reduced FLOP cost by 11% to 15% without sacrificing accuracy. Repeating the shrink/expand cycle led to an accuracy gain of 1.1%.

“We’ve applied MorphNet to several production-scale image processing models at Google. Using MorphNet resulted in significant reduction in model-size/FLOPs with little to no loss in quality,” they said.