Watch all the Transform 2020 sessions on-demand here.

The unemployment rate for people with disabilities is more than double that of those without, according to the U.S. Department of Labor Statistics. Just as sobering, it’s estimated that only one in 10 members of the disability community have access to devices adapted to their needs.

There’s no silver bullet. But if you ask Microsoft, the seeds of a solution lie in AI and machine learning.

“We’ve come to know that AI-powered experiences can get a lot of information to a person with a disability, which ultimately empowers their independence,” Mary Bellard, senior accessibility architect at Microsoft, told VentureBeat in an interview ahead of the company’s annual Build developer conference in Seattle. “[We’re working] to make sure we’re not just developing tech for tech’s sake, [but] working on technology that a particular disability community wants and is interested in driving with us.”

Bellard, who has worked in accessibility for 11 years, joined Microsoft in 2014 and played a pivotal role in launching the Disability Answer Desk, a support line that customers can call to resolve accessibility issues, get answers to usability questions, and learn about the assistive technologies in Microsoft’s consumer products. Under the purview of chief accessibility officer Jenny Lay-Flurrie, Bellard oversees accessibility work within the company’s Windows, Office, and Azure product divisions, and she and colleagues collaborate with nongovernmental organizations that serve people with disabilities around the world.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“We pay very close attention to what that community wants and needs, and we collaborate with them on a variety of different projects,” said Bellard. “It’s pretty amazing how people and organizations … are looking to [Microsoft’s] platforms and our tools and saying, ‘I want to use this for our solution.'”

AI for Accessibility

According to Bellard, strong customer demand for assistive technologies development platforms catalyzed the launch of AI for Accessibility, Microsoft’s second AI for Good initiative. It followed on the heels of — and was largely modeled after — the company’s AI for Earth program, which provides training and resources to organizations looking to tackle problems relating to climate, water, agriculture, and biodiversity.

“We wondered if a similar [approach] would work for the disability community and also the innovation community that works on the technology for people,” said Bellard.

Through AI for Accessibility, which was announced in May 2018, Microsoft pledged $25 million over the following five years for universities, philanthropic organizations, and others developing AI tools for those with disabilities. The program is overseen by Lay-Flurrie, Bellard, and others and rewards the most promising cohort of candidates in three categories — work, life, and human connections — with seed grants and follow-on financing each fiscal quarter.

Proposals are accepted on a rolling basis and are evaluated “on their scientific merit,” Bellard says, in addition to their innovativeness and scalability. Selected applicants receive compute credits for Microsoft’s Azure AI Platform in increments of $10,000, $15,000, or $20,000, depending on their project’s scope and needs, and additional funds to cover costs related to collecting or labeling data, refining models, or other engineering-related work. They also gain access to Microsoft engineers, who work with them to accelerate development and incorporate their innovations into “platform-level” partner services.

It’s still early days, Bellard says. But nearly a year in, AI for Accessibility has already borne fruit.

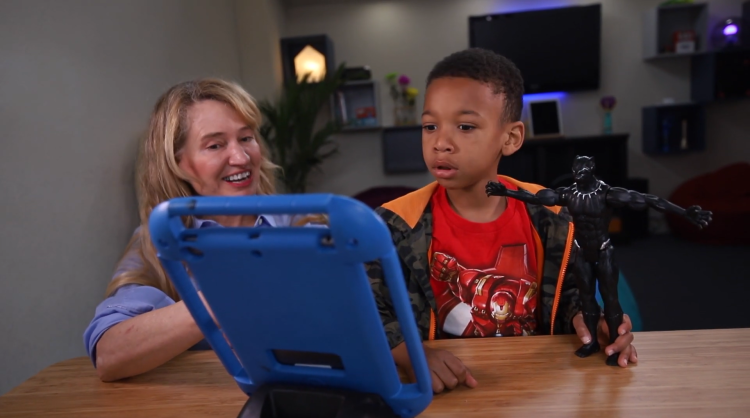

California-based iTherapy was one of the first to receive a grant, which it used to add chatbots and 3D avatars to its communication skills app, InnerVoice. Cofounders Lois Brady and Matthew Guggemos, both of whom have backgrounds in speech pathology and specializations in assistive technologies and autism, say there’s evidence the newly implemented AI features are boosting engagement.

“Motivation is one of the biggest factors in learning [communication skills],” said Guggemos. “You have to be really motivated to learn any language. And so creating something that’s entertaining [and] motivating is a huge advantage. Having AI chatbots [and] algorithms that can apply the use of language [to] content [is] a big game-changer for kids with autism [and] other communication challenges.”

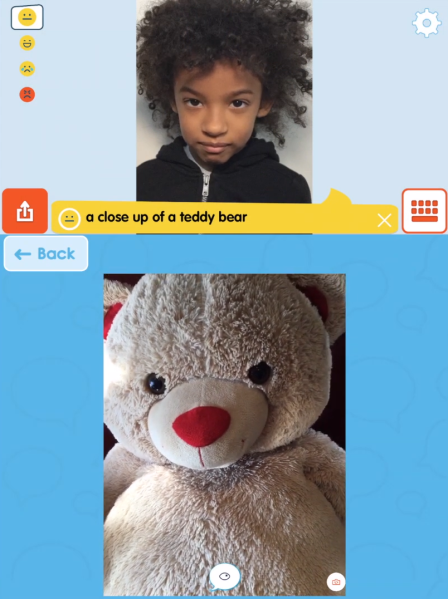

To that end, InnerVoice combines avatars with written text, pictures, and video to create experiences that help learners identify the connections between speech and language. Its videos abstract concepts, while the avatars label what’s happening using facial expressions and emotional tone of voice, and users practice conversations with the avatars — a superhero, drawing, or photograph of a loved one — and learn words by taking pictures that machine learning algorithms detect and label.

InnerVoice is being piloted with nonverbal children in a Missouri school district of 18,000 students, and recent end-of-the-year tests showed an improvement in language skills among those who used it regularly. Clinical studies are forthcoming, but if all goes well, Brady believes InnerVoice could become an invaluable tool for the estimated 6 million to 8 million Americans who experience some form of language impairment.

“I think, traditionally, many [speech pathology] devices and apps that get recommended to people get abandoned,” she said. “They’re often too hard to work with, or they’re cumbersome. When you add in AI, they become much easier to use — you can almost instantly customize them.'”

AI throughout

Microsoft’s AI accessibility efforts extend beyond AI for Accessibility, of course.

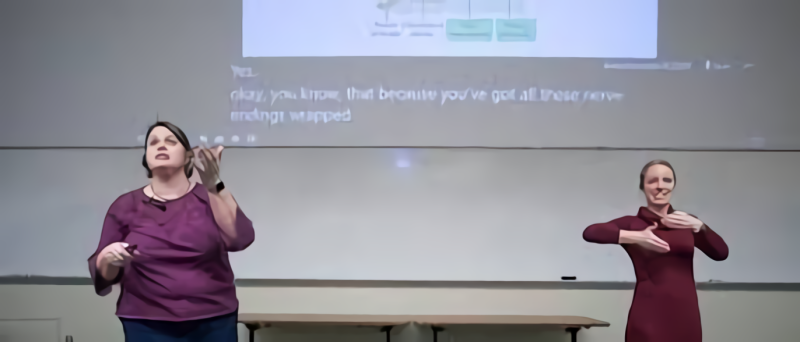

The company recently partnered with the Rochester Institute of Technology to supply Microsoft Translator, its AI-powered translation tool, to transcribe classroom lectures in real time for the more than 1,500 enrolled students who are deaf and hard of hearing. The system converts speech into fluent, punctuated text and learns technical vocabulary through materials fed through Microsoft’s Custom Speech Service (CSS). Closed captions are projected onto lecture hall screens via an add-in to Microsoft PowerPoint, called Presentation Translator, and students can access the captions via a laptop, phone, or tablet or translate them into more than 60 languages.

Above: Closed captions in several RIT classrooms are powered by Microsoft AI technologies.

“This is really changing the inclusive classroom experiences that these college students are having,” Bellard said. “More and more organizations, startups, and researchers want to do this kind of thing.”

Another of Microsoft’s accessibility partners — Toulouse, France-based Equadex — developed a tool that provides a visual representation of language to children with autism, facilitating social interaction between them and parents, teachers, and clinicians. Using Microsoft’s Cognitive Services and Azure tools, it converts speech to sentences in real time, determines the command’s intent, and serves up relevant images from a database.

Microsoft has fostered internal accessibility efforts, too, in part through company-wide hackathons. Bellard was part of the team that architected Seeing AI, a mobile app designed to help low- and impaired-vision users navigate the world around them. It taps computer vision and text-to-speech systems to “read” sentences placed in front of a smartphone’s camera, digitize printed pages while retaining their original formatting, recognize friends and people (and their emotions), describe colors, identify currency bills, and generate audible tones corresponding to ambient brightness.

Above: The Eye Controlled Wheelchair.

In 2014, a Microsoft Enable team participating in the company’s One Week dev jam developed the Eye Controlled Wheelchair, a tool that enables wheelchair users to drive with eye movements alone. The prototype — which was inspired by an email from former NFL player Steve Gleason, who suffers from the neurodegenerative disease amyotrophic lateral sclerosis (ALS) — won the top prize out of 3,000 projects from across Microsoft, and it spurred the creation of a dedicated eye-tracking support team within the company’s Windows division.

Eye control was that team’s first product. It’s a Windows 10 feature that works in tandem with a compatible eye tracker to let people with disabilities operate a mouse and keyboard with gaze, courtesy of a custom-designed taskbar that’s an extension of the on-screen keyboard, mouse emulation, and new scrolling interfaces.

This work, in turn, informed a Microsoft experiment involving a suite of eye-controlled music composition tools dubbed Sound Jam and Sound Machine. Sound Jam — an environment for loop-based recording — features design paradigms found in commercial music production software that have been adapted for eye control, while Sound Machine, a 16-step sound sequencer, supports output to physical instruments, as well as stage effects.

Above: A woman using Microsoft’s Seeing AI app.

Last September saw the launch of Microsoft’s Soundscape, a navigation app that uses binaural audio — sound recorded with two microphones arranged to create a 3D stereo sound sensation — to help visually impaired users build mental maps and make personal route choices in unfamiliar spaces. Its algorithms generate audio cues by ingesting data from GPS data, open source mapping database OpenStreetMap, and activity sensors, which indicate when a self-set audio beacon is within walking distance.

More recently, Microsoft brought Accessibility Checker — which analyzes content and provides recommendations to ensure it can be consumed by people with varying levels of vision, hearing, cognition, and mobility — to Word, Excel, PowerPoint, OneNote, Outlook, and Visio.

Data shortage

AI models “learn” to perform tasks by ingesting massive data sets, some of which comprise millions of samples. Emerging techniques like semi-supervised learning and transfer learning promise to reduce the need for large corpora, but conventional methods exacerbate the challenge for accessibility researchers, who are often forced to source training data from the relatively small data sets collected from the disability community.

“There is not enough data today from people with disabilities in the data sets available, and when data is collected from people with disabilities, it is often not enough. It often results in it being an edge case within a data set and ultimately within a model, and so it doesn’t really offer great value.” said Ballard. “We think that we have a unique opportunity to do our part in increasing valuable, clean, and labeled data for people with disabilities in very practical scenarios.”

She gave a concrete example: sign language. It’s used by approximately 2 million people in the U.S. who can’t understand standard speech, roughly 500,000 of whom are deaf. But one of the largest open source sign language data sets contains only 31,000 samples, compared with the hundreds of thousands of samples in speech corpora like Mozilla’s Common Voice.

“If you think about traditional natural language processing … you have to have some sort of language model. And in almost every case, that language model is built off of textual representation of a language,” said Ballard. “In the deaf community, it is a common experience to use a visual language like sign language, and these languages are not gestural representations of spoken languages, but unique linguistic languages. There’s no cohesive written representation of a sign language.”

Fortunately, thanks in part to Microsoft’s efforts, data collection in other accessibility domains is gathering steam.

Microsoft researchers last year worked with a professor at RIT to build a custom language model that could better recognize users whose speech was impacted by deafness. And in January, Kyle Rector, a University of Iowa assistant professor of computer science (and another grant recipient) collaborated with scientists at the State University of New York-Brockport to create a labeled data set of different outdoor walking and jogging tracks, which he used to develop a mobile app that alerts visually impaired users when they veer from a lane.

“[These examples] really speak to the importance of including people with disabilities in data collection,” said Bellard. “People with disabilities play a very pivotal role in the future of AI-powered technologies.”