Today Google introduced new features for Lens, its visual search and computer vision tool that can recognize plants, animals, text, celebrities, and over a billion shopping items.

Google Lens will soon be able to highlight top meals at a restaurant simply by pointing your camera at the menu. Dish ratings and reviews will also be available from online reviews.

The news was shared today at Google’s I/O developer conference at the Shoreline Amphitheater in Mountain View, California.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Also coming soon:

- Split a bill or calculate a tip after a meal by pointing your camera at your receipt

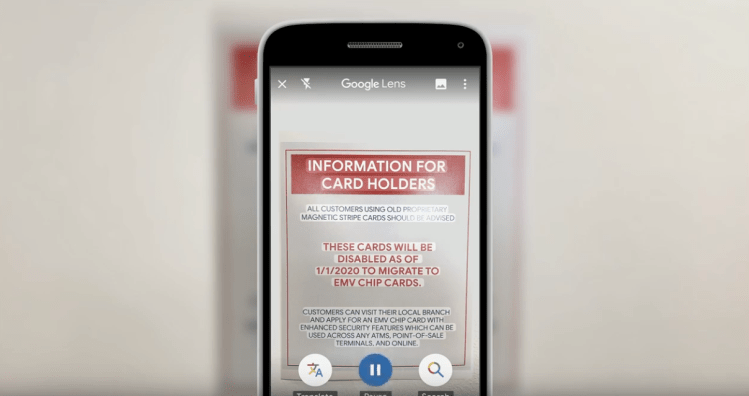

- Read signs and other text for people who can’t read or don’t understand the printed language. Visual readings and translations are available in more than 100 languages.

- Video visible in the physical world usingLens, starting with the deYoung Museum in San Francisco next month and the next issue of Bon Appetit magazine.

Google Assistant has gradually been integrated not just into Google Home speakers and the Android operating system but also a number of Google native apps like Maps and Android Auto.

Lens began as a feature exclusive to Pixel smartphones, but it has since spread to Google Photos and become a native feature in flagship smartphones from companies like Sony.

As cool as that may sound, it’s not perfect. Text recognition can be pretty accurate, but its object recognition can occasionally mistake weeds for trees or cats as caterpillars.

Google introduced a Lens redesign was last December.