testsetset

Microsoft AI researchers today said they’ve created a Multi-Task Deep Neural Network (MT-DNN) that incorporates Google’s BERT AI to achieve state-of-the-art results. The MT-DNN was able to set new high performance standards in 7 of 9 NLP tasks from the General Language Understanding Evaluation (GLUE) benchmarks.

The MT-DNN model, which also uses BERT, was first introduced by Microsoft AI researchers in January and also achieved state-of-the-art performance on several natural language tasks and set new GLUE benchmarks.

The approach to achieve state-of-the-art results uses multi-task learning and a knowledge distillation method first introduced in 2015 by Google’s Geoffrey Hinton and AI chief Jeff Dean. Microsoft plans to open-source the MT-DNN model for learning text representations on GitHub in June, according to a blog post published today.

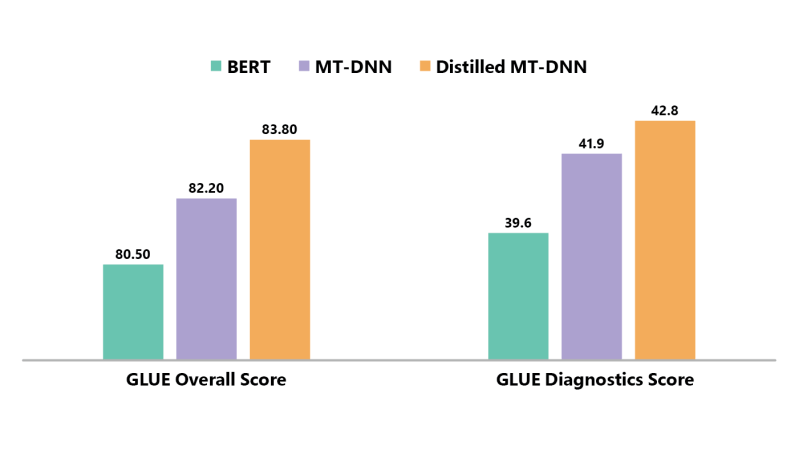

The new distilled MT-DNN model saw better performance on GLUE tests than BERT and MT-DNN.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“For each task, we train an ensemble of different MT-DNNs (teacher) that outperforms any single model, and then train a single MT-DNN (student) via multi-task learning to distill knowledge from these ensemble teachers,” reads a summary of the paper “Improving Multi-Task Deep Neural Networks via Knowledge Distillation for Natural Language Understanding.”

Bidirectional Encoder Representations from Transformers (BERT) was open-sourced by Google last fall. Google claims a state-of-the-art language model can be made with BERT and a single cloud TPU in 30 minutes.

The news comes a day after Microsoft open-sourced an algorithm behind its Bing search engine and Google introduced Translatotron, an end-to-end translation tool that can adopt the tone of the original speaker’s voice.

A series of new features and hints about plans for the future were shared earlier this month at Microsoft’s annual Build developer conference and Google’s I/O developer conference.

At Build, Microsoft showcased how businesses can create AI assistants for their employees with Semantic Machines tech, the Bot Framework got an upgrade for more multi-turn dialogue, and Azure Cognitive Service and Azure Machine Learning services got upgrades. A new AI and robotics platform also launched in limited preview, and the ONNX partnership for interoperable AI introduced Nvidia and Intel hardware optimization for faster inference.

At I/O, Google showcased what it’s like to use its on-device machine learning powered Google Assistant and rolled out tools for Android app developers to connect with Google Assistant. Upgrades for ML Kit and its cloud TPU service were also announced.