Watch all the Transform 2020 sessions on-demand here.

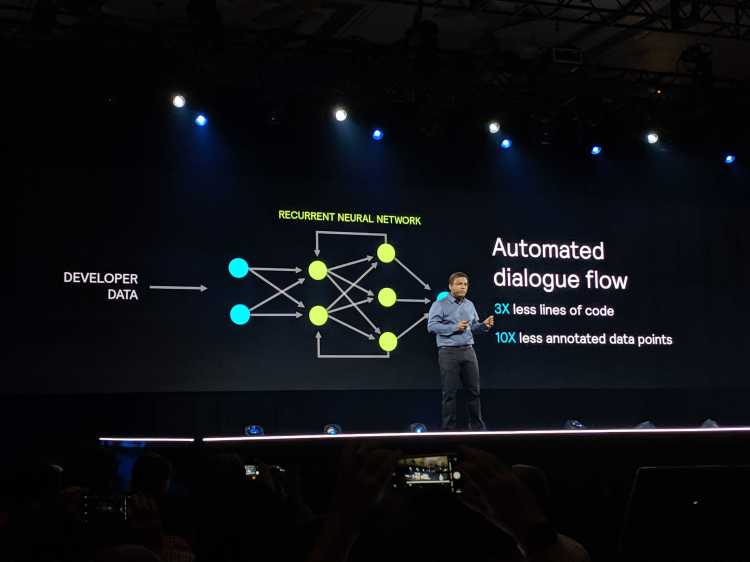

Amazon today introduced Alexa Conversations in developer preview, a deep learning-based way to make Alexa skills with multi-turn dialogue that can interconnect with other Alexa skills. Conversations shrinks the lines of code necessary to create voice apps down from 5,500 to 1,700 lines of code, Amazon VP and head scientist for Alexa Rohit Prasad said today at the Amazon re:Mars conference in Las Vegas.

This reduces a series of interactions that may have taken 40 individual exchanges with Alexa skills down to about a dozen. Developers supply things like API access and a list of entities the API has access to, like movie or restaurant names, and then Conversations can handle the rest.

Multi-turn conversations with Alexa have been available for some time, but Alexa Conversations is designed to help people get more done quickly with the AI assistant’s more than 90,000 Alexa skills. The experience is intended at launch to help people make purchases, so if you order a movie ticket, follow-up questions may put you in touch with the OpenTable skill to make dinner reservations or Uber skill to get a ride. Both Uber and OpenTable are early adopters of Alexa Conversations, along with the Atom Tickets skill for the sale of movie tickets.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The ability to string together Alexa skills begins with a night-out use case, Amazon VP of devices David Limp told a gathering of reporters. Additional use cases are in the works.

“It has been sort of the holy grail of voice science, which is how can you make a conversation string together when you didn’t actually programatically think about it end-to-end. We feel like we’re on a path now where I think a year or two ago I would have said we didn’t see a way out of that tunnel, but now I think the science is showing us that it will take us years to get more and more conversational, but this breakthrough is very big for us, tip of the iceberg,” Limp said.

Alexa Conversations builds upon Amazon’s work to match words with voice apps, and it incorporates advances like the ability to say “Alexa, start cleaning” for smart home appliances like a Roomba robot to begin cleaning floors. It also draws on proactive features introduced in recent months, such as Hunches and Alexa Guard for home security.

The Alexa Presentation Language was introduced in public beta last fall to help Alexa skills developers make more visual experiences.

Alexa Conversations will use AI to better understand user intents so that developers making voice apps will not need to include all intents or ways a person may ask a question, Prasad said.

The approach appears similar to the kind Microsoft is developing with Cortana and Semantic Machines, and that which Google Assistant wants to achieve with Continued Conversations.

The elimination of the need to say a wake word like “Alexa” or “Hey, Google” can lead to more natural interactions and the ability to get more done intuitively, the way you might get things done with a human assistant.

Updated 1:13 p.m. to include comments by Amazon VP of devices David Limp.