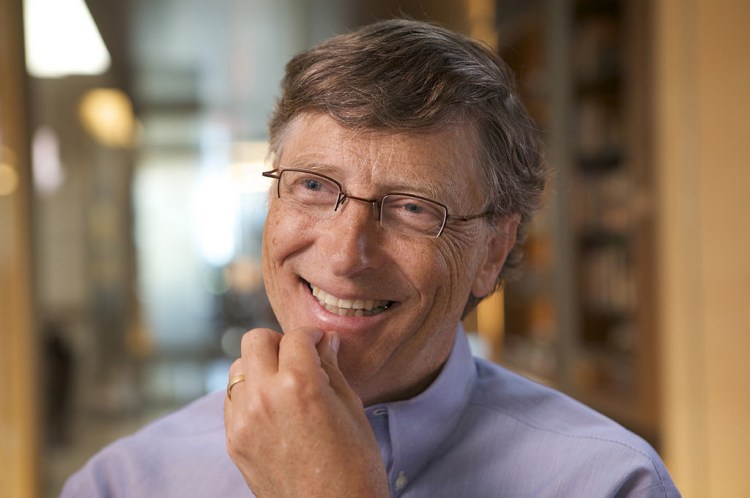

A pair of Facebook AI researchers used TED Talks and other data to make AI that closely mimics music and the voices of famous people, including Bill Gates. MelNet is a generative model that uses spectrogram visuals of audio for training data instead of waveforms. Doing so allows for the capture of multiple seconds of timesteps from audio, then creates models that can generate end-to-end text-to-speech, unconditional speech, and solo piano music. MelNet was also trained to generate multi-speaker speech models.

Using spectrograms instead of waveforms allows for the capture of timesteps for several seconds. Well-known synthesizers of voices like Google’s WaveNet rely on waveforms instead of spectrograms for training AI systems.

“The temporal axis of a spectrogram is orders of magnitude more compact than that of a waveform, meaning dependencies that span tens of thousands of timesteps in waveforms only span hundreds of timesteps in spectrograms,” Facebook AI researchers said in a paper explaining how MelNet was created. “Combining these representational and modelling techniques yields a highly expressive, broadly applicable, and fully end-to-end generative model of audio.”

To demonstrate MelNet’s prowess, researchers created a website with music and voice samples made by the AI system. Facebook AI research scientist Mike Lewis and AI resident Sean Vasquez published a MelNet paper on arXiv earlier this month.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

In order to generate AI that sounds like George Takei, Jane Goodall, and luminary AI scholars like Daphne Koller and Dr. Fei-Fei Li, researchers trained MelNet using a number of data sets including voice recordings of more than 2,000 TED Talks.

The Blizzard 2013 data set of 140 hours of audiobooks trains MelNet’s single speaker-speech skills, and the VoxCeleb2 data set of more than 2,000 hours of speech with more than 100 nationalities and a variety of accents, ethnicities, and other attributes hones the model’s multi-speaker speech function.

Creating MelNet also meant solving for other challenges such as producing high fidelity audio and the reduction of information loss.