Watch all the Transform 2020 sessions on-demand here.

If the winners of last year’s international RobotArt competition are any indication, algorithms aren’t half bad at painting, sketching, and sculpting with humanlike precision. A newly published paper (“LPaintB: Learning to Paint from Self-Supervision“) on Arxiv.org lends credence to this: In it, researchers at the University of Maryland and Adobe Research describe a novel machine learning system — LPaintB — that can reproduce handpainted canvases in the style of Leonardo da Vinci, Vincent van Gogh, and Johannes Vermeer in less than a minute.

“With the development of non-photorealistic rendering techniques, including stroke-based rendering and painterly rendering, specially-designed, or hand-engineered methods can increasingly simulate the painting process by applying heuristics,” wrote the coauthors. “[T]hese algorithms can generate compelling results, but it is difficult to extend them to new or unseen styles … In this paper, we focus on building an intelligent painting agent that can reproduce a reference image in an identical or transformed style with a sequence of painting actions.”

The researchers’ approach leveraged self-supervised learning, in which unlabeled data is used in conjunction with small amounts of labeled data to produce an improvement in learning accuracy, to train an agent from scratch on a limited number of reference images. By modeling the system’s action states (i.e., paintbrush configurations like length, orientation, and brush size) mathematically and by replacing the goal state of failures with its final state, the team generated a paired corpus with positive rewards, which they supplied to the AI model such that it learned to paint reference images in the desired artistic style.

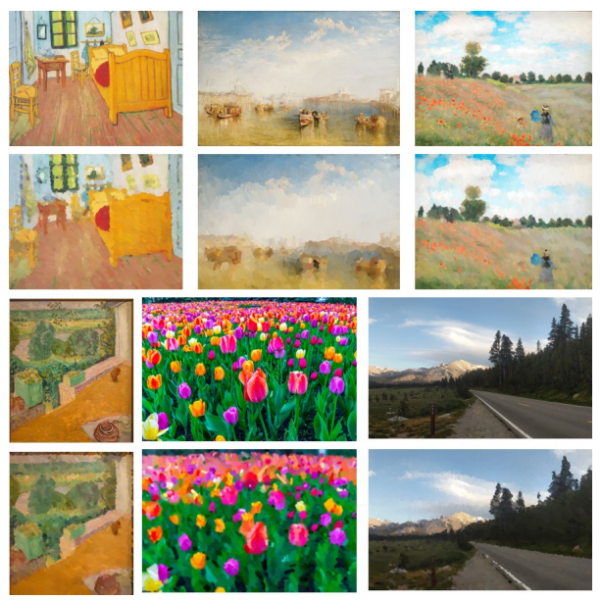

Above: For each pair, the top row is the reference image and bottom row is the AI agent’s result.

It wasn’t smooth sailing — at least not at first. The researchers note that typically, only a small portion of actions sampled by the system had positive rewards, a problem they addressed with a reinforcement learning technique that used the goal state as paired data to train a policy (a set of actions in response to states). But the generated policy wasn’t particularly robust, because the paired data used to train it consisted only of actions with positive rewards (which made it difficult to recover from undesirable actions that returned negative rewards) and states that were the result of successive series of actions. Solving this required reinforcement learning once again: It added noise to the action that helped to generalize the model, and it optimized the model’s actions with rewards.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The end result? An AI framework that could execute painting actions with parameters describing stroke size, color, and positional information and update a canvas accordingly, with a reward function that evaluated the distance between the current state and the goal state. To compile a training data set, the team drew random patches from reference images in a specific style at varying scales and sampled the patches to a fixed size. They fed these to the model, which after an hour of training was able to reproduce a 1,000 x 800 image with 20,000 strokes on a PC with a 16-core processor and an Nvidia GTX 1080 graphics chip in less than a minute.

The researchers note that the trained model’s generalization is highly dependent on the training data and that their method is based on a fairly basic painting environment, but they say that the combination of self-supervised and reinforcement learning greatly improves the policy’s efficiency and performance. The team leaves to future work incorporating stroke parameters like brush size, color, and position, as well as building a model-based reinforcement learning framework that can be built into a painting simulator.