Watch all the Transform 2020 sessions on-demand here.

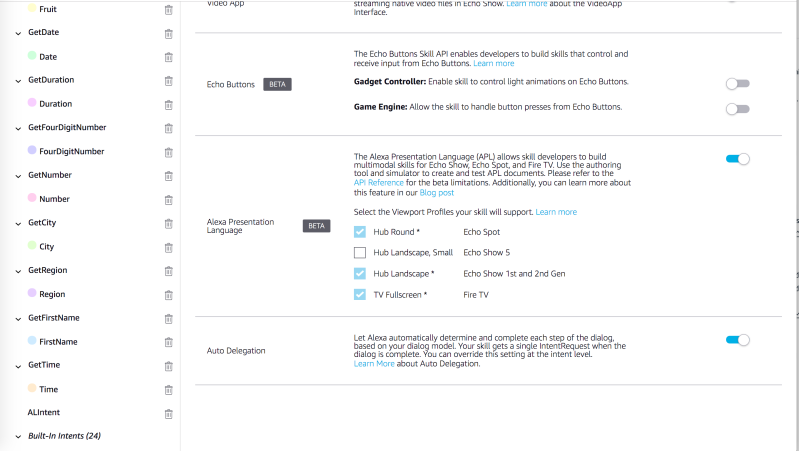

Just ahead of the July 4 holiday, Amazon released the latest version of Alexa Presentation Language (APL), the suite of tools designed to make it easier for developers to create “visually rich” skills for Alexa devices with screens. APL 1.1 — which is now in beta — comes after APL 1.0’s beta launch in October 2018.

APL 1.1 is available to Alexa developers in all locales and can be used on Echo Show, Echo Spot, and the new Echo Show 5 starting today. In the coming days, it’ll hit additional devices, including Fire TVs and Fire tablets.

So what’s new in APL 1.1? Well, it supports a novel command — AnimateItem — that developers can use to animate the position, scale, rotation, or opacity of any APL component or layout. AnimateItem can also be combined with Alexa Vector Graphics (a subset of the Scalable Vector Graphics standard) to create a range of interactive and “visually engaging” experiences. Devices that only support APL 1.0 will show static text instead.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

APL 1.1 ships with an enhanced Alexa Design System, which Amazon says was re-engineered to better accommodate Alexa-enabled devices of varying screen sizes. It’s intended first and foremost to accelerate design work — for instance, if a developer imports Alexa-Styles into an APL document and uses the style textStyleBody to style text, the size of the text will automatically adapt based on the viewing distance typical for a device. Amazon says these sizes came out of “user experience research.”

Additionally in tow with the refreshed Alexa Design System are responsive components and templates that respond based on device mode, size, and shape. Examples include AlexaButton, a touchable and selectable element that has the relevant states needed for touch and TV devices, and AlexaImage, which presents an image. As for responsive templates, they combine responsive components and APL UI elements (like ScrollView and Pager) to present a pattern that takes up the entire viewport. Among them are AlexaTextList, which can be used to display a scrolling list of text items, and AlexaBackground, which can be used to render splash screens and backgrounds.

Amazon says it plans to continue releasing new responsive components and templates and to add support for new viewport profiles as new Alexa devices hit the market.

On the authoring and tooling side of things, APL 1.1 introduces the ability to select elements in the design surface and see them highlighted in the component hierarchy, theoretically making it easier to associate what’s visible in the design view with the corresponding component and update its properties. Amazon says that in the coming weeks, it plans to release another update to enable dragging and dropping of APL components in the APL authoring experience.

The APL 1.1 authoring tool now shares rendering, data binding, and expression evaluation code with the runtime that runs on Alexa devices, which Amazon says has “significantly” lowered the number of discrepancies between the authoring experience and what’s seen on actual devices. And both the APL authoring experience and skill test simulator have a new viewport profile called Small Landscape Hub that supports the recently released Echo Show 5. It’ll automatically scale APL responses to fit on its screen, according to Amazon; alternatively, developers can manually optimize and test Alexa skills in the Small Landscape Hub on the simulator or with an Echo Show 5.

Amazon says that skills certified before July 3 will need to be resubmitted after checking the box to enable automatic scaling, even if the visuals of said skill had been optimized.

Lastly, APL 1.1 expands transformers that can be used with the SpeakItem and SpeakList commands to have Alexa highlight and speak text blocks and lists of items. There’s a new textToSpeech transformer that transforms text into speech; starting today, the existing ssmlToSpeech transformer will only accept valid Speech Synthesis Markup Language (SSML) inputs.

Skills using APL include a CNBC stock organizer, Big Sky’s weather forecast app, public transit schedule tracker NextThere, travel app Kayak, and Food Network’s recipe sorter.

Notably, Facebook’s Portal and Portal+ devices incorporate elements of APL for hands-free visual content; their weather forecasts, shopping lists, and calendar events screens were designed with Amazon’s toolkit. And Sony smart TVs and Lenovo tablets support APL through the Alexa Smart Screen and TV Device SDK.

Last October, Adobe introduced a new user interface kit for APL in Adobe XD.