Watch all the Transform 2020 sessions on-demand here.

I’ve been an early adopter for as long as I can recall: At the age of five, I had an Atari 800 computer in my bedroom, and since then, I’ve reviewed thousands of new consumer tech products, often before they became widely available to the public. Yet I’ve largely passed on buying smart home devices for myself, initially because the components weren’t particularly “smart” and didn’t seem likely to be integrated into one unified system.

Now I’m wondering whether some of these products are becoming too smart and too integrated. Thanks to Amazon’s Ring and Key initiatives, there are now miniature networks of video doorbells recording small neighborhoods’ worth of surveillance footage, and locks that can be opened by Amazon contractors to deliver packages. Users have no idea who’s actually looking at these videos, but earlier this year, it was reported that Amazon granted Ukrainian staffers access to “every video recorded by every Ring camera around the world,” plus consumer-identifying details.

I’m generally not an alarmist, but I’m deeply uncomfortable with giving strangers access to either my house or my security video feeds. As much as I appreciate what Amazon has accomplished as a company, there’s no way I’m giving its employees — say nothing of its temporary contractors — permission to let themselves into my home or monitor it, either from the inside or outside. Moreover, I have no desire to pay a monthly fee to send that data to Amazon’s servers for live or later review.

Yet after my recent move back to Southern California, where post-delivery package theft has become commonplace, I wanted to try a non-Amazon video doorbell. Having had positive experiences with Apple accessory maker Anker in the past, I purchased the company’s brand new Eufy Security Doorbell, which packs modern video and AI technologies into a $160 package that I could install on my own.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Having skipped the first generations of video doorbells, I’ve been floored by the Eufy solution’s performance. Installation wasn’t completely trouble-free, as it turned out to require a slightly more powerful doorbell power transformer than the cobwebbed one that was here before, but it wasn’t horribly difficult, either. And what I’ve gained from the installation is incredible.

Anker uses on-device AI to identify every person who walks near the Security Doorbell, and in my experience, its facial and human body recognition software has been spot-on. I set up the software with a deliberately limited motion detection area, enabling me to reduce unwanted alerts triggered by people walking past my door. Without final calibration, the Eufy camera picked up people just outside the necessary area, so I restricted it a little more, and now only get alerts when a person is actually approaching the door. Cars, animals, and other passersby don’t trigger it.

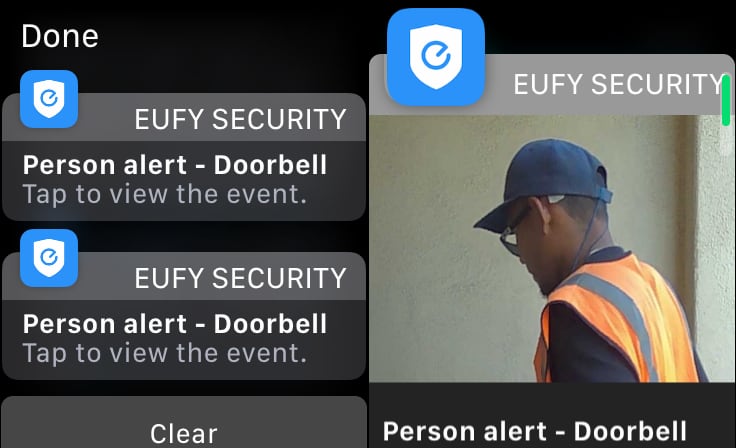

The alerts are amazing. I generally see a photo — a completely clear photo, thanks to either HDR or night vision — of the face of each person who approaches the door, whether it’s someone delivering a package, a family member, or a solicitor. These photos appear instantly on my iPhone and Apple Watch whether I’m at home or a hundred miles away, so while the Security Doorbell includes a chiming bell, I typically get the alert before anyone even presses the button to ring it.

Better yet, if I want more information on the person who’s there, I can get it. Tapping the notification on a phone lets me see either recorded video of the person’s approach and departure, or live video of what’s currently happening at the door. Without manual intervention, Anker’s AI instantly begins recording at up to 2,560 by 1,920 resolution, including integrated storage within the Security Doorbell itself, so you can zoom in on and review fine details to an extent that was impossible when video doorbells first hit stores years ago.

Anker says that your Eufy data is kept private with a combination of AES-256 encryption and 4GB local storage, which sounds a lot better to me than broadcasting everything to god knows where and who. But it seems like there’s still a lot of data being transmitted by the Security Doorbell over Wi-Fi, and Anker’s website doesn’t explain why. When I went to the Eufy site to look for FAQs and couldn’t find them, I was ironically directed to its AI-powered service robot, which as shown below wasn’t any help at all.

As a consumer, I’m thrilled by what Anker’s camera delivers for the price. But as a longtime technology journalist, I’m increasingly concerned about the privacy implications of these “security” technologies, and the double-edged sword of relying on AI to power consumer innovations that could be used for less positive purposes.

It’s one thing if on-device AI alerts me to someone who’s approaching my front door for whatever reason — a family member returning home, a person delivering a package, or a person trying to steal a package. I’m comfortable with this AI technology being used by individuals for at-home security purposes, and footage that’s stored locally. The per-incident option to share individual videos with the police to help catch criminals is fine, too.

My concern starts when the same personal AI technology is aggregated so that multiple feeds can be simultaneously accessed by multiple people — or companies, or government agencies — at any time, with no specific need for that access. This is how entire neighborhoods will start to get monitored, then city blocks, then cities, then states and countries.

It goes without saying that aggregation of personal content could quickly become dangerous. Between unread “terms of service” and customers who are willing to opt-in to automatically sharing their “security camera” videos without considering the ramifications, I can easily imagine friendly neighbors accidentally empowering the next George Zimmerman to confront the next Trayvon Martin just because he’s walking through a “monitored” neighborhood at night.

In a prior generation, the sheer amount of video data from 10 or 20 cameras would have been too staggering for any individual or “neighborhood watch” group to process. Now, AI will be doing most of the monitoring work to determine what “needs” to be seen and recorded at a given moment. Recent history suggests that there are already far too many dumb vigilantes with guns just waiting for excuses to use them. AI-assisted aggregated “security” footage may present them with the opportunities they’ve been looking for.

For now, I’m content to have my Security Doorbell’s AI watching my front door for family, friends, and strangers. But I’m not sure that the same artificial intelligence is safe to put in the hands of users with limited real intelligence, or people whose surveillance motives aren’t personally focused. There may be no easy answer to regulating this, but in the interest of protecting society as a whole from individual bad actors, limiting the corporate aggregation of public video might be a good place to start.