Watch all the Transform 2020 sessions on-demand here.

Weeks ago, a brief Twitter spat ensued between Rep. Rashida Tlaib (D-MI) and the Detroit Police Department over the latter’s use of facial recognition software, ending with an invitation for Tlaib to visit the Real Time Crime Center headquarters. I predicted here that if the congresswoman took them up on the offer, it would make for a fine bit of political theater. This week she did, and it was.

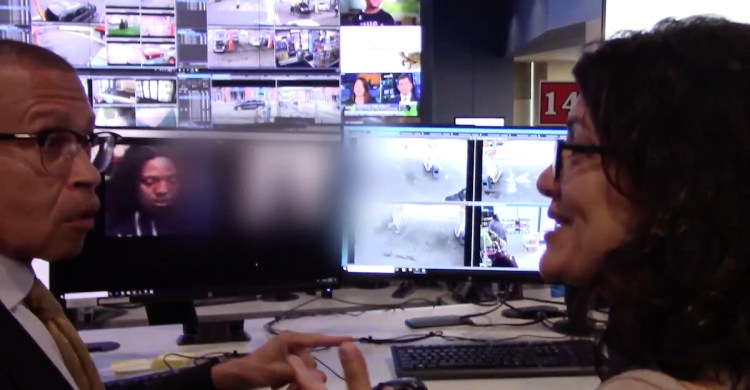

But the rather bizarre visit, which was filmed by George Hunter of the Detroit News, smacked of a missed opportunity on Tlaib’s part. In a tour personally hosted by Detroit police chief James Craig, she had a chance to get more details about how Detroit police are using facial recognition, ask them difficult questions about the program, and take it all back with her to Washington, D.C.

Instead, she square-pegged a few important talking points into the conversation and appeared uninterested in a dialogue.

The visit lasted over an hour, according to a source who was present. The Detroit News‘ video, which was edited down for length, begins with Tlaib chatting with Chief Craig in a busy hallway at the Real Time Crime Center. She spots the camera and says, “Are you facial recognizing me right now?”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

It seems intended as a half joke, but she appears genuinely nervous about the camera’s presence. “Who are you and why are you videotaping me?,” she asks. She’s smiling, but it appears to be a serious question. The videographer identifies himself as a journalist. There are no other journalists present, and his is the only camera aside from the Detroit PD’s, but her initial question is oddly aggressive. She comes off as nervous and defensive.

Craig shows her the wall of live camera feeds — traffic, grocery stores, gas stations, and the like — and assures her that there is no facial recognition occurring on any of them. Then the video cuts to a desk with a series of monitors, where a crime analysis supervisor stands ready to show how the facial recognition is applied. Craig begins to expound on the supervisor’s credentials when Tlaib cuts in — “So you are doing facial recognition,” she says. “Absolutely we are,” responds Craig. “I’m going to show you.”

Quickly, instead of getting the promised demonstration, Tlaib is (slightly and politely) off-topic, bringing up past interactions she and Craig have had and voicing concerns about the whole program that, for her, have only grown over time. He’s impatient to show her the analysis. They talk over each other. He seems annoyed.

The scene cuts to Craig showing Tlaib a side-by-side of two images that the system flagged using its facial recognition software. He appears to be using this example to purposefully illustrate how the automated system can get things wrong and why it needs trained human workers to intervene. Craig begins to explain how the system made an obvious error, and Tlaib interrupts him to bring up the issue of error rates for people of color, and especially women of color, which is the very thing he’s trying to illustrate. He was in the process of telling her that the system has a problem with misidentifying people, by using an example that includes two people of color, one of whom is a woman.

Then Tlaib tosses in a non-sequitur, saying “See if you can give us some of our money back until you fix it,” apparently referring to federal funding of the program. “Nnno,” Craig replies.

The video continues, and Craig is able to explain further that the department knows the computer isn’t 100% accurate, which is why they have trained human analysts, and facial recognition is just one tool they use to help find suspects. In response to Tlaib’s question — “Is this solely what’s used to prosecute someone?” — he answers an emphatic “No. Absolutely not. Never.”

Then she seems to lose the narrative: “And also, analysts need to be African Americans. Not people that are not.” At best, the optics are poor, given that she’s speaking directly to two black men. Craig was clearly offended by the remark, as he made clear later in a TV interview, calling it racist.

Regardless, that’s the angle numerous publications ran with after the Detroit News posted its story and video.

Tlaib apparently didn’t come to listen, which is too bad, because there was a lot for everyone to learn. Instead, we got awkward exchanges, political gaffes, and acrimony after the fact.

To Tlaib’s credit, she did represent the concerns of her majority-African American district by emphatically pointing out that facial recognition software has endemic racial problems and is ripe for abuse beyond law enforcement in areas such as housing and by expressing concerns about analyst bias, given that people of color already have to suffer the indignity of being frequently misidentified by people of other races. (Her anecdote about people on the House floor confusing Rep. Elijah Cummins D-MD and Rep. John Lewis D-GA for one another should be deeply upsetting to everyone.)

Tlaib later took to Twitter to share links to research she said she was alluding to during the visit. The whole thread is worth a look. Links she shared include research on facial recognition systems inaccurately recognizing people who are not white males, how face recognition predictions are more accurate for people who are members of the same race, and how people tend to less accurately identify and less accurately remember faces of people of other races.

But in the moment, instead of challenging Craig with this startling research, she fumbled. She missed a chance to potentially make an impact, or at least learn more, around a critical issue for which she is legislating. We need our leaders and lawmakers to do better.

Detroit is one of two cities in the country known to be testing live facial recognition software. This is facial recognition that can track an individual from a network of cameras around the city and quickly identify them. This change from traditional search methods is a big part of the urgency building up around facial recognition worldwide.

The meeting between Tlaib and Craig could have been the kind of crucial discussion between opposing viewpoints that we’ll need to build bridges and avoid misapplications that negatively impact society, particularly the poor and underrepresented. Instead we got political theater that may have done more harm than good.