Watch all the Transform 2020 sessions on-demand here.

What’s the easiest way to teach a robot arm to stack blocks? That’s the nuanced question to which researchers at Google parent company Alphabet’s DeepMind sought answers during a recent study. AI solutions to the problem invariably require lots of data, which in robotics can be tough to come by. But even assuming there’s enough data to train a machine learning model, it’s nearly useless without human annotation.

The DeepMind scientists found a way to make it work, though, thanks to a system that learns from observation in a simulated environment (MuJoCo) and transfers its learnings to the real world. In a preprint paper detailing their research, they claim their approach can direct a vision-based agent to stack cubes with only five hours of robot data for adaptation.

The work follows on the heels of a related paper from OpenAI last week, which investigated methods of transferring skills from agents in simulation to a real-world robot in order to solve a Rubik’s cube task. Unlike that study, however, which employed a sophisticated robotic hand for object manipulation, the DeepMind team sources a basic Sawyer grasping arm.

The team proposes a two-step procedure for adaptation. In the first, a simulated environment is used to learn a policy that solves the cube-stacking task with synthetic images and proprioception (awareness of the position and movement). Two agents — a state-based agent with access to the simulator state and a vision-based agent that uses raw pixel observations — train simultaneously, with the state-based agent providing data for reinforcement learning of the vision-based agent.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

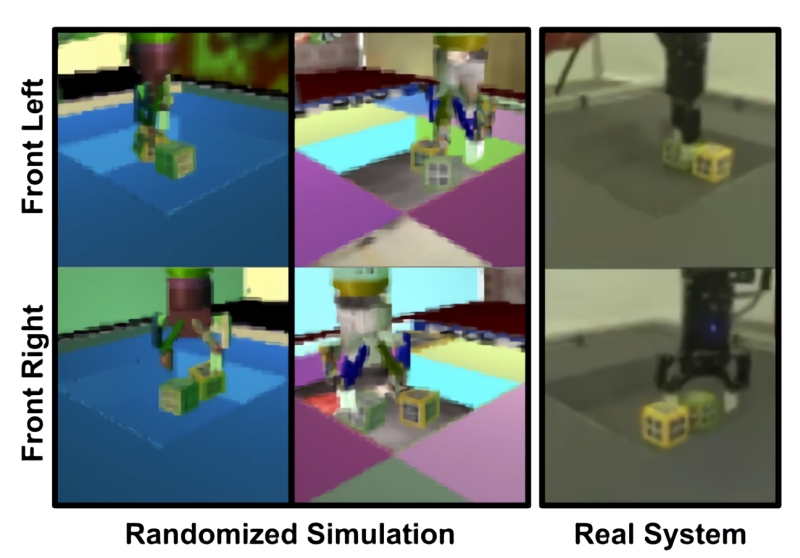

Above: Left and right pixel observations in both real and domain randomized simulated environments.

In the second step, unlabeled real image sequences help to adapt the state representation to the real domain, providing a common objective that applies in both simulation and reality. The researchers say it mitigates the negative effects of the gap between simulation and the real robot by leveraging unlabeled data collected by the simulation-trained agent.

In experiments, the researchers report that their “sim-to-real” transfer learning method yielded a “clear improvement” over domain randomization and other self-supervised adaptation techniques. On average, it successfully stacked the cube 62% of the time — far better than the baseline’s 12% success rate.

“Our agent interacts with the real world from vision which makes our method applicable to a large set of manipulation tasks,” wrote the coauthors of the paper. “The cube stacking task emphasizes the generality of our approach for long horizon manipulation tasks. Most importantly, [it’s] able to make better use of the available unlabeled real world data resulting in higher stacking performance.”