Intel has revealed its long-awaited graphics processing unit (GPU) architecture, which would be the heart of graphics chips designed to handle heavy data and artificial intelligence loads in datacenters.

The new Ponte Vecchio GPUs would compete with rival offerings from Nvidia and Advanced Micro Devices. And eventually, the Intel architecture could serve as the foundation for consumer GPU chips from Intel in the future. Intel made the announcement ahead of the Supercomputing 2019 event in Denver.

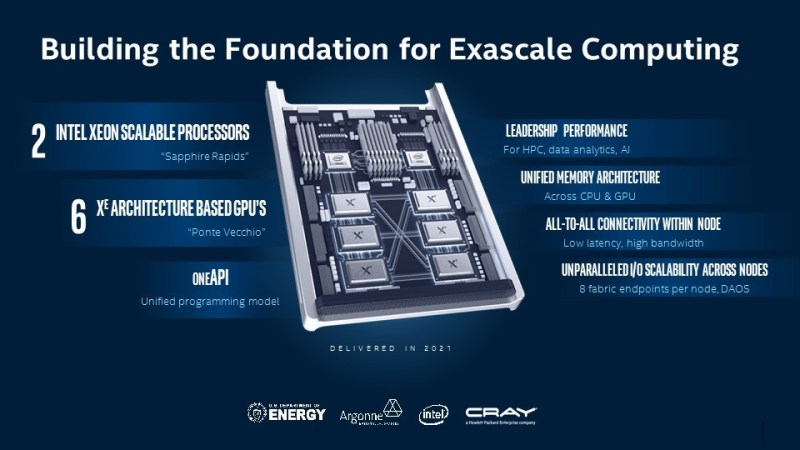

Intel is targeting the high-performance computing (HPC) and AI segments with both the GPU and a new software initiative that aims to shift away from “single-architecture, single-vendor” programming models. (That’s a veiled reference to Nvidia’s CUDA programming platform for getting GPUs to do non-graphics processing tasks). A new supercomputer design dubbed Aurora would have six Ponte Vecchio GPUs.

Asked about the leaks last week of Intel’s GPU plans, Jensen Huang, CEO of rival Nvidia, said in an interview with VentureBeat, “I’m anxious to see it, just like you probably are. I enjoy looking at other people’s products and learning from them. We take all of our competitors very seriously, as you know. You have to respect Intel. But we have our own tricks up our sleeves. We have a fair number of surprises for you guys as well. I look forward to seeing what they’ve got.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Intel describes oneAPI industry software initiative as delivering a unified and simplified programming model for application development across heterogeneous processing architectures, including CPUs, GPUs, FPGAs, and NNPs.

The launch of oneAPI represents millions of engineering hours in software development, and Intel says it marks a game-changing evolution from today’s proprietary programming approaches to an open, standards-based model for cross-architecture developer engagement and innovation.

“HPC [high-performance computing] and AI workloads demand diverse architectures, ranging from CPUs, general-purpose GPUs, and FPGAs, to more specialized deep learning NNPs which Intel demonstrated earlier this month,” said Raja Koduri, chief architect at Intel, in a statement. “Simplifying our customers’ ability to harness the power of diverse computing environments is paramount, and Intel is committed to taking a software-first approach that delivers unified and scalable abstraction for heterogeneous architectures.”

Patrick Moorhead, analyst at Moor Insights & Strategy, said in an email, “The industry wants more competition in data center GPUs so there’s no question about the need for it. Intel has never actually tried to build a high-end discrete GPU with a frame buffer, so while there may have been some previous failed attempts at datacenter graphic devices with MIC, those weren’t GPUs architecturally.”

Moorhead added, “OneAPI is designed to provide developers simplicity to program across Intel GPUs, CPUs, FPGAs, and Movidius accelerators. It’s an abstraction layer that enables reuse of code, minimizing the need for bespoke acceleration work as it is today. The industry wants this in circumstances when code reuse is needed more than different code for each accelerator. I am pleased to see until delivering on this as the market wants it.”

A developer-centric approach to heterogeneous computing

Above: Intel’s Aurora would have six Ponte Vecchio GPUs.

Intel said the oneAPI initiative will define programming for an increasingly AI-infused, multi-architecture world. The open programming experience lets developers build on the architecture of their choice without compromising performance and eliminating the complexity of separate codebases, multiple-programming languages, and different tools and workflows, Intel said. OneAPI preserves existing software investments with support for existing languages while delivering flexibility for developers.

OneAPI includes both an industry initiative based on open specifications and an Intel beta product. The oneAPI specification includes a direct programming language, powerful APIs, and a low-level hardware interface. Intel’s oneAPI beta software provides developers a portfolio of developer tools that include compilers, libraries, and analyzers, packaged into domain-focused toolkits.

The initial oneAPI beta release targets Intel Xeon Scalable processors, Intel Core processors with integrated graphics, and Intel FPGAs, with additional hardware support to follow in future releases. Developers can learn more about oneAPI at software.intel.com/oneAPI or download and test drive tools in the Intel oneAPI DevCloud.

Regarding the software barriers to adoption, Huang said, “In the area of training, the barrier to adoption is very high. People just don’t — why would they trust the solution? Why would they trust a system to scale out to 100 million, 200 million, sight unseen? It’s just too complicated, I think.”

Data-centric strategy for AI/HPC convergence

The foundation of Intel’s data-centric strategy is the Intel Xeon Scalable processor, which today powers over 90% of the world’s Top500 supercomputers. Intel asserts that Xeon Scalable processors are the only x86 CPUs with built-in AI acceleration optimized to analyze the massive data sets in HPC workloads.

At Supercomputing 2019, Intel unveiled a new category of general-purpose GPUs based on Intel’s X e architecture. Code-named Ponte Vecchio, this new high-performance, highly flexible discrete general-purpose GPU is architected for HPC modeling and simulation workloads and AI training.

Ponte Vecchio will be manufactured on Intel’s 7-nanometer technology and will be Intel’s first Xe-based GPU optimized for HPC and AI workloads. Ponte Vecchio will leverage Intel’s Foveros 3D and EMIB packaging innovations and feature multiple technologies in-package, including high-bandwidth memory, Compute Express Link interconnect, and other intellectual property.

Building the foundation for exascale computing

Intel’s data-centric silicon portfolio and oneAPI initiative lays the foundation for the convergence of HPC and AI workloads at exascale within the Aurora system at Argonne National Laboratory.

Aurora will be the first U.S. exascale system to leverage the full breadth of Intel’s data-centric technology portfolio, building upon the Intel Xeon Scalable platform and using Xe architecture-based GPUs, as well as Intel Optane DC Persistent Memory and connectivity technologies. The compute node architecture of Aurora will feature two 10-nanometer-based Intel Xeon Scalable processors (code-named Sapphire Rapids) and six Ponte Vecchio GPUs. Aurora will support over 10 petabytes of memory and over 230 petabytes of storage.

Aurora will leverage the Cray Slingshot fabric to connect nodes across more than 200 racks.