Four key events set the pace for each year’s smartphone camera innovations: Samsung’s February Galaxy Unpacked, Apple’s September iPhone event, Google’s October Made by Google event, and Qualcomm’s December Snapdragon Tech Summit. While the first three events focus entirely on products for the current year, Qualcomm’s is uniquely about what’s coming up next, though other announcements sometimes obscure the camera news.

That didn’t happen at this year’s Tech Summit, as camera innovations were some of the biggest tentpole features of the new flagship Snapdragon 865 processor. On day one, the company promised the chip will deliver “gigapixel image processing” and 8K video capture capabilities, and on day two, it promised real Snapdragon 865 devices in early 2020, including a 200-megapixel camera in actual smartphones at some point next year.

On stage and in subsequent demonstrations, the company’s point was clear: Cameras are going to be taking some very big steps forward next year, and since Qualcomm chips power most of the best Android phones, photography and videography are going to be a major focus of 2020’s Android devices. Since Apple is never far behind, we can expect big things from this year’s iPhones, as well.

The (great) status quo: hardware

Much like the ongoing battles between standalone camera makers Canon, Nikon, and Sony, there’s no single persistent “winner” in the smartphone camera business. DXOmark, a camera performance rating that is begrudgingly accepted by most of the major players, picks a new “best smartphone camera” so frequently that the award is all but meaningless, though there’s still plenty of competition for the bragging rights.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Every year, Apple’s flagship phone cameras have fewer megapixels than top rivals, emphasizing ease of use and pixel quality over quantity. This year’s iPhone 11 sports twin rear cameras (ultra-wide and wide), while iPhone 11 Pro models have three cameras, adding a 2X zoom lens to the others; each has 12-megapixel resolution. Android phones are spread across a wide variety of options ranging from high-megapixel cameras to more Apple-like or cheaper budget approaches. Some phones have one 48- or 64-megapixel sensor and up to four rear cameras; most use two or three lower-megapixel sensors, like Apple.

Above: Using a special photographer’s case, Moment’s new add-on 14mm Fisheye lens gives older iPhones the ability to snap wide-angle images like iPhone 11 models.

While iPhones and Android phones start with solid cameras, they’re increasingly capable of being upgraded after purchase with tools that bridge the gaps between consumer, prosumer, and professional applications. Moment’s add-on lenses, for example, give older and lower-end iPhones the ability to gain the ultra wide-angle perspective of new iPhone 11 models, as well as the 2X zoom capabilities of high-end iPhones. The company sells a $6 Pro Camera app that can be used to shoot quality-optimized photos and videos with its lenses, as well as to correct lens distortions.

AI-assisted image stabilization and subject tracking automation are also becoming critical features in both smartphone photography and videography. Some phones (and drones) use a combination of motion sensor data and jitter-resistant cameras to minimize the blur that comes from shaky hands and the bounces that can normally be seen as people move and walk when shooting videos. Smartphone cameras are also becoming increasingly capable of detecting multiple people and keeping the focus sharp on their faces.

Above: DJI’s Osmo Mobile 3.

These features can also be added — or dramatically upgraded — after the fact using gimbals and companion software. Drone maker DJI sells a $120 handheld gimbal called Osmo Mobile 3 (shown with optional $20 tripod) that uses its own balancing system and motion data to keep Android phones and iPhones stable when you’re walking and shooting video. A dedicated button spins your phone from landscape to portrait mode, while other controls place zoom toggles, an orientation joystick, and a record button within finger’s grasp.

Above: DJI Mimo app.

Available for both Android and iOS, DJI’s Mimo app adds a machine learning-powered feature that’s even more impressive: subject tracking. You can use your finger to draw a box around a subject (generally human), and the gimbal will automatically rotate your device to keep that subject in focus. If you use the feature with the front-facing camera, you can trust the software to film your own face as you move the phone around in 3D space.

Computational photography

It’s increasingly apparent that computational photography — the combination of multiple images and related sensor data — is going to be the next big thing in smartphone cameras. Google and Apple have spent the past several years leveraging their phones’ computer-like chips to produce real-time photo and video results that dedicated cameras can’t offer, and the results are increasingly impressive. Perhaps the most significant are Android’s Night Sight and iOS’s Night Mode photos, which look as if they were snapped in daylight.

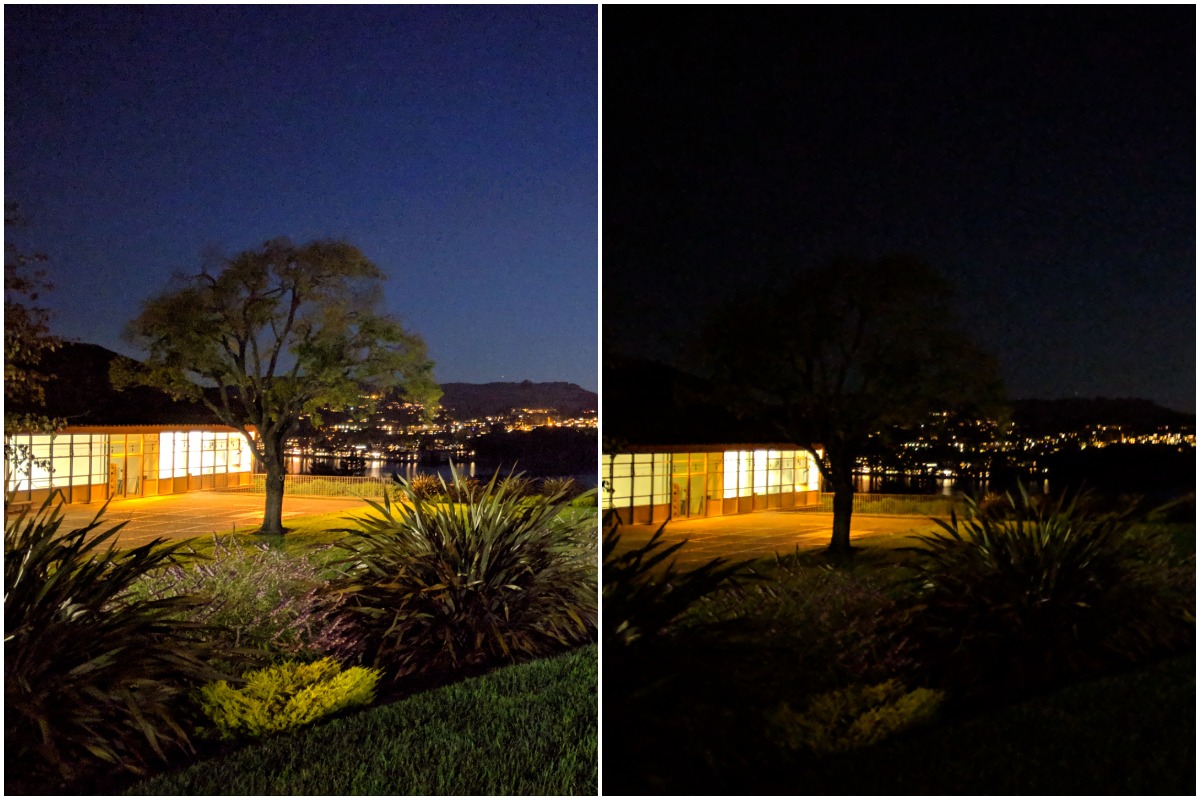

Above: Google’s Night Sight enabled on the left; disabled on the right.

Apple also added a feature to the iPhone 11 and iPhone 11 Pro called Deep Fusion, which automatically and instantly combines pieces from multiple pictures to create a single photo with pixel-level texture details that look as if they came off of heavy, expensive DSLRs. Skin, fabric, and other surfaces that might look soft are instead rendered with more precision.

Above: Apple’s Deep Fusion computational photography feature turned off and on.

The next round of machine learning-added computational photography will be seen in both photos and videos. At the Snapdragon Tech Summit, Qualcomm showed off a Snapdragon 865 AI-enabled “image segmentation” feature powered by Morpho software.

As illustrated by the photo above, next-generation smartphones will be able to distinguish between multiple types of facial hair, clothing, objects, and backgrounds in real time. Morpho’s technology enables filters to be differentially applied to “each object of interest” to either improve the image’s quality or add special effects. The result will be visual enhancements similar to Snapchat’s AR filters, only at much higher resolutions — and with more serious applications.

Coming soon: 200-megapixel cameras, 8K videos, and 3D scanning

Although I’m rarely excited by innovations that have no practical purpose or likelihood of becoming available soon, I’m extremely enthusiastic about new technologies that will actually move the needle for consumers. So when I say that I’m more than cautiously optimistic about some of the innovations that will be coming to smartphone cameras in 2020, that’s because I think we’re about to see some seismic changes this year.

Above: Xiaomi president Bin Lin walks in front of only a portion of a zoomed-in 108-megapixel image, displayed on a gigantic 4K screen.

One of the biggest changes will be a rise in ultra-high-resolution camera sensors. Speaking about next-generation Mi Mix phones at Qualcomm’s Snapdragon Tech Summit, Xiaomi president Bin Lin showed off the potential of an 108-megapixel sensor — nine times the resolution of current iPhone cameras — by walking in front of a fully zoomed-in section of a gigantic image of a Chinese city, then zooming out to reveal the whole picture. Even on an auditorium-sized 4K display, the blown-up image looked good: incredibly detailed, if not richly colored.

Above: Xiaomi president Bin Lin stands next to the zoomed-out 108-megapixel image.

Assuming that it only has a 1X optical zoom lens, this 108-megapixel sensor would capture more detail than a 10-megapixel sensor with a 10X lens. And Qualcomm is promising that there will be actual 200-megapixel sensors inside Snapdragon 865 phones in 2020 — nearly twice that quantity of detail.

Lens quality will be critical to making the most of these high-resolution sensors. With the right lens, even a lower-resolution sensor can capture beautiful, colorful images while rendering details somewhat impressively. As shown above, Moment’s 10X macro lens accessory illustrates how bringing something small into focus can reveal details that might not even be apparent to the naked eye.

This isn’t to say that every camera sensor’s pixels are created equal — they’re not — or that devices snapping 200-megapixel photos will offer anything close to the speed and other capabilities of devices (such as iPhones) with fewer pixels to handle. Moreover, we don’t know how many devices will include these super cameras, or whether Apple will finally raise its own megapixel counts in response. But the boost to the Snapdragon 865’s image processing pipeline points to continued advancements of various sorts over the next year.

Another broad category will be the transition of high-end smartphones into professional or near-professional video recording tools. Qualcomm has already said that Snapdragon 865-powered phones will be capable of recording videos at 8K resolutions — higher-res than almost any television, and higher than many movie theater projectors — as well as creating Dolby Vision-certified 4K HDR videos, firsts for any consumer device. The 865-series phones will be able to create 120fps 4K videos or 30fps 8K videos; midrange 765 phones will only be able to create 4K videos.

Above: An anamorphic lens enables videos to be recorded at a theatrical 2.40:1 aspect ratio, complete with cinematic lens flares.

The result will be smartphones that further leapfrog the capabilities of standalone camcorders, a category already in serious decline, and a greater use of pocket devices to shoot entire commercials and parts of TV shows and films — something Apple has emphasized over the past few years. Again, add-on lenses and gimbals will grow in importance for these cinematic endeavors, dramatically reducing the need for more expensive, heavy equipment — and enabling a much wider range of people to get in on creating professional-caliber videos.

One thing that no one’s exactly sure about yet is the value of depth-sensing cameras in future smartphones, but it’s clear that they’re going to play an important role in devices next year. If you’re unfamiliar with the concept, imagine a camera that focuses on 3D rather than 2D, rapidly calculating the device’s distance to multiple objects — and if possible, the background. That depth information can be used to scan real items into 3D versions, create 3D representations of scenes, and map real spaces for “augmented reality” insertion of digital items.

There are different ways to gather depth data, including Google’s latest computational photography tricks, which require only a single regular camera, but using a proper depth sensor (such as the time-of-flight sensors on some high-end Samsung and LG phones) makes a huge difference. Samsung has already shown off an app that can scan a real teddy bear and turn it into an animated 3D object a person can control.

Supply chain rumors have suggested that Apple will be adding a laser-powered depth-sensing camera to the backs of 2020 iPhones, enabling them to scan objects within a 15-foot range. While current iPhones (and iPad Pros) have depth-sensing front TrueDepth cameras, those only work at sub-2-foot distances, so the new design should dramatically expand the number and range of objects iPhones will be able to scan.

When I say that no one’s “exactly” sure how these cameras will pan out in 2020 devices, that’s just because there are questions about how quickly and broadly software will catch up. As Samsung’s teddy bear demo suggests, we’re not far off from using our phones to scan ourselves into realistic 3D avatars that can be easily used for messaging, social networks, and gaming. If depth-sensing cameras become common and useful enough in 2020, we may be on the cusp of something even more monumental than a big step forward for photography and videography. The big question is: Who will be first to introduce the end-to-end solution that makes it as simple and fun to use as the cameras of today?