testsetset

Deepfakes, or media that takes a person in an existing image, audio recording, or video and replaces them with someone else’s likeness using AI algorithms, are multiplying quickly. Amsterdam-based cybersecurity startup Deeptrace found 14,698 deepfake videos on the internet during its most recent tally in June and July, up from 7,964 last December — an 84% increase within only seven months. That’s troubling not only because deepfakes might be used to sway public opinion during, say, an election, or to implicate someone in a crime they didn’t commit, but because the technology has already generated pornographic material and swindled firms out of hundreds of millions of dollars.

In an effort to fight deepfakes’ spread, Facebook — along with Amazon Web Services (AWS), Microsoft, the Partnership on AI, Microsoft, and academics from Cornell Tech, MIT, University of Oxford, UC Berkeley; University of Maryland, College Park; and State University of New York at Albany — are spearheading the Deepfake Detection Challenge, which was announced in September. It’s launching globally at the NeurIPS 2019 conference in Vancouver this week, with the goal of catalyzing research to ensure the development of open source detection tools.

Facebook has dedicated more than $10 million to encourage participation in the competition. For its part, AWS is contributing up to $1 million in service credits and offering to host entrants’ models if they choose, and Google’s Kaggle data science and machine learning platform is hosting both the challenge and the leaderboard.

“‘Deepfake’ techniques, which present realistic AI-generated videos of real people doing and saying fictional things, have significant implications for determining the legitimacy of information presented online,” noted Facebook CTO Mike Schroepfer in a blog post. “Yet the industry doesn’t have a great data set or benchmark for detecting them. The [hope] is to produce technology that everyone can use to better detect when AI has been used to alter a video in order to mislead the viewer.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

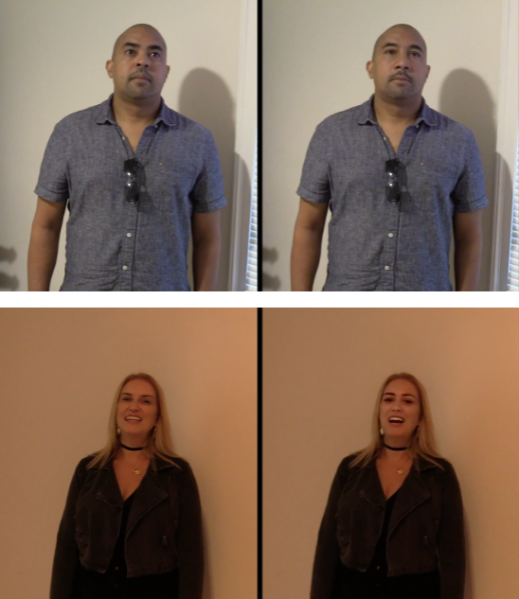

Above: These videos from the Deepfake Detection Challenge data set show an unaltered video (left) and a deepfake (right).

As previously detailed, the Deepfake Detection Challenge includes a data set — as well as grants and awards — to spur the creation of new ways of detecting and preventing AI-manipulated media. While several tools have been released to date, including one developed by researchers at the University of California, Berkeley and the University of Southern California that identifies manipulated videos with greater than 90% accuracy, deepfakes are a moving target. In a recent interview with The Verge, Pinscreen CEO Hao Li noted that synthesis techniques are constantly evolving such that at some point, it might become nearly impossible to distinguish AI fakes from reality.

The organizers of the Deepfake Detection Challenge retained the services of a vendor that hired a “diverse” group of actors (approximately 54% female and 46% male) to create videos depicting realistic scenarios in a variety of settings, poses, and backgrounds, with accompanying labels describing whether they were manipulated with AI. Afterward, tampered videos were created based on a subset of the original footage using a range of machine learning techniques. Faces were swapped and voice alterations were made from the videos, and some samples had augmentations applied to them intended to approximate degradations encountered in videos shared online.

The data set — which contains 100,000-plus videos in total — was tested through a targeted technical working session in October at the International Conference on Computer Vision, said Facebook AI Research Manager Christian Ferrer. It doesn’t include any user data and features only participants who’ve entered into usage agreements so as to avoid restrictions that could hamper research. Additionally, said Ferrer, access to the data set is gated so that only teams who’ve agreed to a license can access it.

“Ensuring that cutting-edge research can be used to detect deepfakes depends on large-scale, close-to-reality, useful, and freely available data sets. Since that resource didn’t exist, we’ve had to create it from scratch,” he added.

Staring today, competitors who register can download the corpus to train deepfake-detecting AI models. Once they’ve finalized their design, they submit code into a black box validation environment, which hosts a mechanism that scores the model’s effectiveness against test sets. Entrants don’t need to share their models in order to participate, but they must agree to open-source their work to be eligible for the challenge prizes. In any case, they retain rights to their systems trained on the training data set.

The Deepfake Detection Challenge is facilitated and overseen by the Partnership on AI’s Steering Committee on AI and Media Integrity, which is made up of a coalition of organizations including Facebook, human rights nonprofit Witness, Microsoft, and others in civil society and the technology, media, and academic communities. It’s currently slated run through the end of March 2020.

“It is inspiring to see the commitment from partners across multiple areas, including industry, academia, civil society, and media, and how they came together over many months to create the challenge,” said Irina Kofman, the Facebook AI director and business lead managing the challenge. “Each brought insights from their respective area and allowed us to consider a broad range of viewpoints … By bringing the community together and fostering collaboration, we hope that we can enable accelerated progress in this space.”

The challenge’s launch comes after the release of a large corpus of visual deepfakes produced in collaboration with Jigsaw, Google’s internal technology incubator, which was incorporated into a benchmark freely available to researchers for synthetic video detection system development. Earlier in the year, Google made public a data set of speech containing phrases spoken by the company’s text-to-speech models, as part of the AVspoof 2019 competition to develop systems that can distinguish between real and computer-generated speech.

“We know there will not be a simple and conclusive technical solution to these issues. I’m confident, however, that [an] open approach to research will help us build new tools to prevent people from using AI to manipulate videos in order to deceive others,” said Facebook VP of AI Jerome Pesenti.