Sitting in a press Q&A with Jensen Huang, the CEO of Nvidia, is like listening to a comedy routine. I appreciate that because so few tech CEOs are willing to engage in a dialogue and crack jokes with the media.

Huang’s sessions are unique because he’s one of those billionaires who doesn’t muzzle himself in the name of corporate propriety. He also has a unique command on today’s relevant technologies, including self-driving cars and the finer points of real-time raytracing and their applications in games like Battlefield V. He cracks a funny snide remark about rival Advanced Micro Devices’ “underwhelming” graphics chip, and gives a serious homily about chip designer and former Stanford University president John Hennessy in the next moment.

Huang engaged with the press again, as he did last year, at CES 2019, the big tech trade show in Las Vegas last week. It came after Nvidia’s press event where it disclosed the Nvidia GeForce RTX 2060 graphics chip and dozens of design wins for gaming laptops, as well as after rival AMD’s keynote talk at CES. He was at his bombastic best.

I was at the press Q&A and asked a couple of questions, but it was interesting to hear Huang field questions from the larger press corps at Morel’s restaurant in the Palazzo hotel in Las Vegas. He touched on the blockchain mining crash that shaved $22 billion from Nvidia’s market value and Nvidia’s new autopilot for Level 2+ autonomous vehicles.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Here’s an edited transcript of the Q&A session.

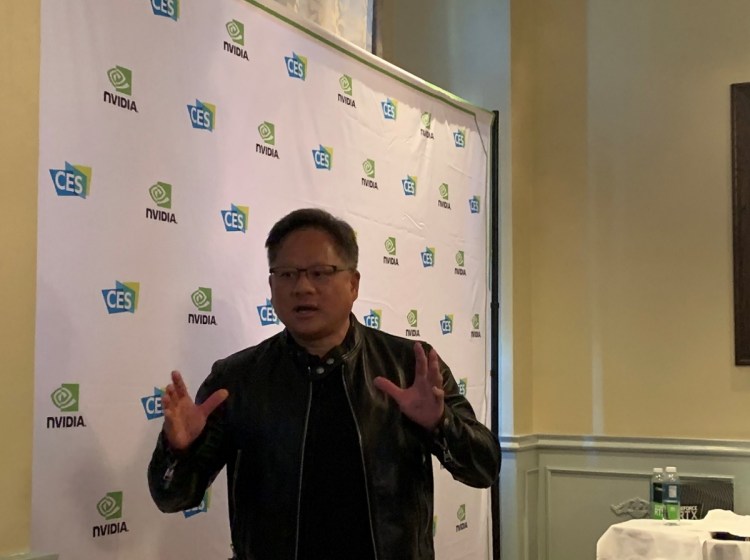

Above: Jensen Huang, CEO of Nvidia, answers questions at CES 2019.

Jensen Huang: We announced four things. The first thing we announced was that [the Nvidia GeForce] RTX 2060 is here. After so much anticipation, the 2060 is here. Raytracing for the masses is now available. That’s the first news that we announced. It’s available from every single add-in card manufacturer in the world. It’s going to be built into every single PC manufacturer in the world. It’ll be available in every country in the world. This is a gigantic launch. Next-generation gaming is on.

The graphics performance is obviously very good. It’s designed to address about a third of Nvidia’s installed base, a third of the gaming installed base. That part of the installed base has GPUs like 960s, 970s, and 1060s. The 2060 is a huge upgrade for them. It’s priced at $349, incredibly well-priced. We’ll do incredibly well with this. We announced that it’s also going to be bundled with either Battlefield V, with raytracing, or Anthem, which is about to come out.

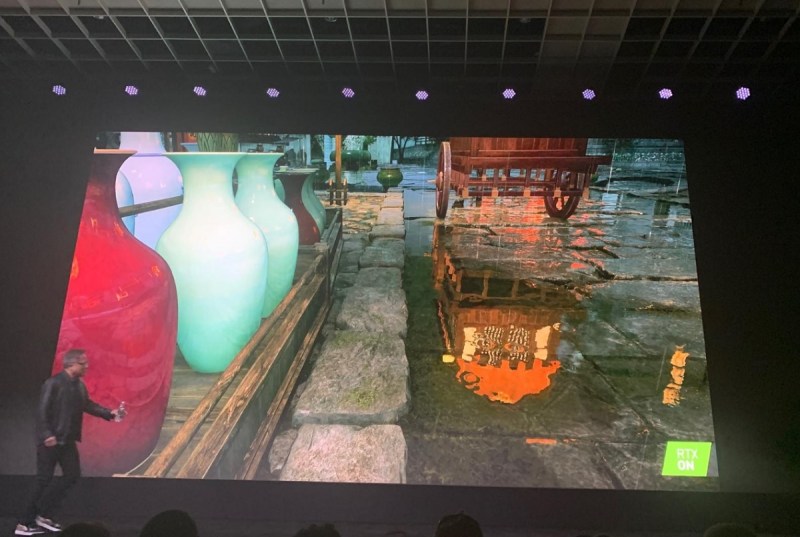

We showed, for the very first time, that it’s possible to play with raytracing turned on and not sacrifice any performance at all. That’s something nobody thought was possible. The reason for that is, when you turn on raytracing, the amount of computation that’s necessary goes way up. Instead of processing triangles, you’re now processing light rays. The amount of computation necessary for raytracing, we’ve known for a long time, is many orders of magnitude more intensive than rasterization.

Above: The Alienware Area-51m with Legend design.

The solution we created was to use AI to learn from a whole volume of wonderfully rendered images. We taught this AI that if you solve this image with fewer pixels completed, you can predict the remaining pixels with incredible fidelity. We showed this technology – it’s called DLSS, Deep Learning Super Sampling – rendering two images, and the image rendered by DLSS is just — you’re sitting there, 1440P, gigantic screen, and the beauty pops out. For the first time we can raytrace, turn on AI, and boost the performance at the same time.

As a result, when we turned on raytracing on Battlefield V, versus no raytracing, the performance was the same. You get beautiful images and you can still play at very high speeds. That was the magic of RTX, a brand new way of doing computer graphics. This is the beginning of the next generation for us.

The second thing we announced was a record number of notebooks built for RTX GPUs. 40 brand-new notebooks are available starting January 29. This cycle, we have designed into more laptops than at any time in the history of our company. Not only that, but we embedded this technology two years ago, called Max-Q. Max-Q is the point where you get maximum efficiency and maximum performance at the same time. It’s that knee of the curve.

It’s not exactly the knee in the curve in the sense that there’s some plot and we’re running at the knee of the curve. It simply means we’re optimizing for maximum performance and maximized efficiency at the same time. It’s a GPU architecture solution, chip design, system design, system software, and a connection to the cloud where every single game is perfectly optimized for every laptop to operate at the Max-Q point. The cloud service is called GeForce experience. It has Max-Q optimization.

Working with all the notebook vendors, we’ve now announced 17 Max-Q notebooks. Last year we had seven. Just in the first month of this year we have 17. The number of Max-Q notebooks coming out is incredible. What you get is essentially a very powerful notebook, but it’s also thin and light, 18mm thick. It’s a 15” bezel-less notebook with a 2080 inside. It’s kind of crazy. It’s twice the performance of a PlayStation 4 at one-third the volume, and you get a keyboard and a battery to go with it.

Max-Q has made it possible for gamers to basically have a powerful gaming platform they can take with them wherever they go. The notebook gaming market is one of the fastest growing game platforms in the world. It’s one of our fastest growing businesses. The vast majority of the world’s gamers don’t have gaming notebooks today. That’s going to change with RTX and Max-Q. It’s going to change starting January 29.

The third announcement we made yesterday — that was the gaming side. There are other announcements we made. For example, we think that building a gaming laptop is different from building a laptop for gamers. The reason for that is, a gamer is a human too. They want to do things like 3D design and video editing and photo editing. They want to do all the things we love to do, and they just happen to be a gamer. It turns out that a lot of gamers love design, love to create in digital. We made it possible for the RTX laptops to not only be great gaming machines, but to render like a workstation, to do pro video like a workstation. For the first time you can edit raw 6K video, cinematic video, in real time, on a notebook. Impossible at any time before now.

We talked about the work we did with OBS to integrate the professional quality video encoder inside the RTX and hook it up to the OBS gaming broadcast system. People love to broadcast their games to Twitch and YouTube. Eight million broadcasters around the world. We’ve made it possible for them to broadcast without having two large PCs. They can now broadcast on just one RTX card, or even on the notebook. They can use their laptop as a broadcasting workstation.

Above: Justice, a game in the works in China, shows off RTX.

That’s all the gaming stuff we announced on the first day. The second day we announced two things. We announced a commercially available Drive Autopilot level 2+ system, with fully integrated chip, computer, software stack, and partners in ZF and Continental who are going to take it to market. It will be available in cars starting in 2020. It’s powered by Xavier, the world’s first single-chip autonomous driving computer. It will be able to achieve level 2+. Level 2+ is all the functionality you would expect an autonomous car to have, except a person has to be in the loop. The driver is still responsible.

However, this car should be able to do things like — you’ll say, “Take me to work.” It pulls out of the garage, goes down the driveway, gets on the highway, stops at signs, stops at lights, does automatic lane changes, and brings you all the way to work. This should be able to do that. We also demonstrated — if you come to our booth you can see the demonstration. We drove 50 miles around Silicon Valley, the four highways that define Silicon Valley, a whole lot of lane changes, traffic everywhere, crazy on-ramps and off-ramps. Completely hands-off.

We’re taking that to market now. The Xavier chip is in full production. It’s the first auto-grade self-driving car system. The first customer for our autopilot system is Volvo, and there are others to be announced.

We also announced yesterday that Daimler has asked us to partner with them to build their next-generation car computing system. The car computing system will consist of two things: one computing system for autonomous driving and one computing system for the entire AI user experience and user interface system. This computer system, this computer architecture, will be unified in such a way that software can be easily updated. It means that future cars will be AI supercomputers. It will be software-defined. It will remove an enormous number of ECUs that are currently inside cars and unify it into just two computers. Software will define next-generation cars.

Future car companies are going to have to be software companies. They have to have access to great batteries. They have to be great at design. They have to understand the market. But from an engineering perspective, future car companies have to be software companies. Daimler knows this. They’re partnering with us to create their next-generation car computing architecture. We’ll work with them to ship it as soon as we can.

Question: To clarify on the Mercedes-Benz move, should we think of that as Drive plus another system, or is it going to be the next-generation of Drive plus a next-generation–

Huang: We’re already partnering with Daimler today on two things. We worked with them on the NBUX AI user experience, which is shipping today. We also partnered with them on robot taxis. We’re already working with them on AV and UX, the cockpit AI driving unit. What we announced is their next-generation architecture, which will likely have two computers, two processors in one unified computing architecture. It will be beyond Xavier, beyond what we’re currently doing. The next generation of that.

Question: What’s your reaction to AMD’s announcement on Radeon 7?

Huang: Underwhelming, huh? Wouldn’t you guys say?

Question: Why do you think so?

Huang: Because the performance is lousy. It’s nothing new. No raytracing, no AI. It’s a 7nm chip with HBM memory. That barely keeps up with a 2080. If we turn on DLSS, we’ll crush it. If we turn on raytracing we’ll crush it. It’s not even available yet. Our 2080 is all over the world already. RTX is offered by every AIC in the world. I think theirs is going to be available on their website? It’s weird. It’s a weird launch. Maybe they thought of it this morning. Okay, that was too — you know what? Can I take all that back?

Look. I’m not going to make any more jokes. You guys know how funny I can be. I make myself laugh. But it’s underwhelming.