Watch all the Transform 2020 sessions on-demand here.

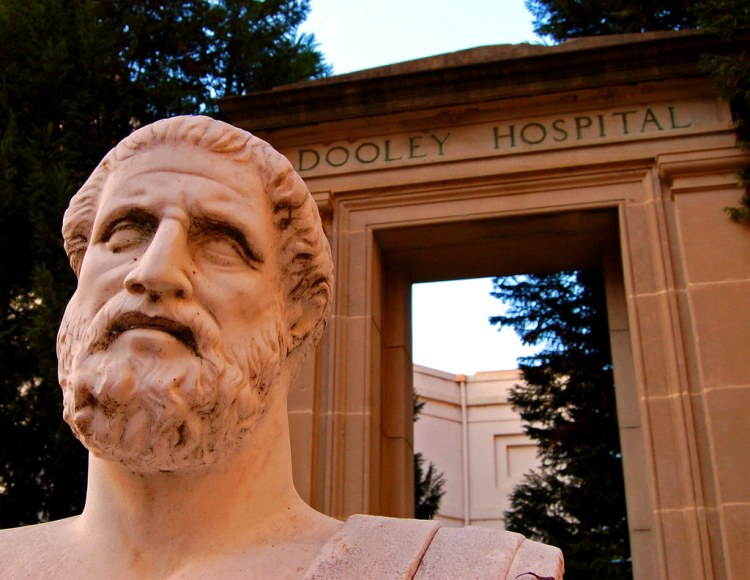

Ageōmétrētos mēdeìs eisítō. That’s Greek for “Let no one untrained in geometry enter” — the motto famously inscribed above the entrance to Plato’s Academy. It’s straightforward enough (if you’re fluent), but not every case is so clear-cut. Interpreting ancient languages involves guesswork about semantics, as well as polysemy (the coexistence of many possible meanings for a word or phrase), and context.

Researchers at the Alan Turing Institute, the University of Warwick, the University of Helsinki, and Amazon propose a novel solution in a newly published paper. The idea involves neural networks, or layered mathematical functions that model biological neurons. Dubbed Genre-Aware Semantic Change for Ancient Greek (GASC), it leverages categorical metadata about target texts’ genres to uncover the evolution of meanings in Ancient Greek data sets.

AI systems that model semantic change aren’t new, per se — researchers have employed a range of topic-based and graph-based natural language processing models for interpretation tasks. But the authors note that few focus on ancient languages and most don’t account for language variation features. By contrast, the team’s work goes beyond literary data sets and historical language data and directly addresses questions about genre — i.e., which genre is most likely associated with a given sense, what is an unusual sense for a genre, and which genres have the most similar senses.

“Over time, new words enter the lexicon, others become obsolete, and existing words acquire new senses,” the paper’s authors write. “For example, in Old English ‘thing’ meant ‘a public assembly’, and currently it more generally means ‘entity’ … The close relationship between innovation and variation is well known in historical linguistics, and critical to ancient languages, for which balanced corpora are not available due to the limited amount of data at our disposal; therefore models need to explicitly account for confounding variables, like genre.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The researchers first compiled a preprocessed corpus — the Diorises Annotated Ancient Greek Corpus — containing over 10 million words from 820 poems, dramas, oratories, philosophies, essays, narratives, atlases, religious scripts, and letters dated between the 8th century BC and the 5th century AD. Each was lemmatized (grouped together in the inflected forms) and part-of-speech tagged, and the model’s task was to detect the sense associated with target words in given contexts and describe their evolution over time.

“In technical texts, we expect polysemous words to have a technical sense,” the team explains. “On the other hand, in works more closely representing general language (comedy, oratory, historiography) we expect the words to appear in their more concrete and less metaphorical senses; in a number of genres, such as philosophy and tragedy, we cannot assume that this distribution holds.”

To evaluate the AI system’s performance, the researchers created a secondary data set and framework. They chose 50 target words in the corpus that could be identified as polysemous, including 17 words from Greek technical vocabulary and 33 words from the highest-frequency lemmas (forms chosen to represent the lexemes, or units of meaning) in the Diorisis corpus. For each word, they randomly divided the source corpora into training (80 percent) and test sets (20 percent) and devised a way to automatically match lists of words associated with each sense by the model to sense labels assigned by a group of expert human annotators.

In experiments, the researchers report that GASC was able to provide “interpretable representations” of the evolution of word sense and that it achieves improved predictive performance compared to the state of the art. “To our knowledge, no previous work has systematically compared the estimates from a statistical model to manual semantic annotations of ancient texts,” the coauthors wrote. “This work can be seen as a step toward the development of richer evaluation schemes and models that can embed expert judgments.”