As the iPhone and iPad have amply demonstrated, much of Apple’s current hardware depends on accurate detection of direct touch inputs — a finger resting against a screen, or in the Mac’s case, on a trackpad. But as people come to rely on augmented reality for work and entertainment, they’ll need to interact with digital objects that aren’t equipped with physical touch sensors. Today, Apple has patented a key technique to detect touch using depth-mapping cameras and machine learning.

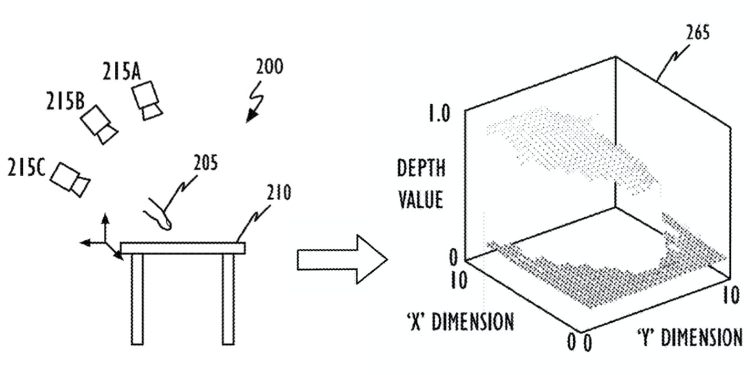

By patent standards, Apple’s depth-based touch detection system is fairly straightforward: External cameras work together in a live environment to create a three-dimensional depth map, measuring the distance of an object — say, a finger — from a touchable surface and then determining when the object touches that surface. Critically, the distance measurement is designed to be usable even when the cameras change position, relying in part on training from a machine learning model to discern touch inputs.

Illustrations of the technique show three external cameras working together to determine the relative position of a finger, a concept that might be somewhat familiar to users of Apple’s triple-camera iPhone 11 Pro models. Similar multi-camera arrays are expected to appear in future Apple devices, including new iPad Pros and dedicated AR glasses, enabling each to determine finger input simply by depth-mapping a scene and applying ML knowledge to judge the intent of changes in the finger’s position.

Armed with this technology, future AR glasses could eliminate the need for physical keyboards and trackpads, replacing them with digital versions only the user can see and properly interact with. They could also enable user interfaces to be anchored to other surfaces, such as walls, conceivably creating a secure elevator that could only be operated or brought to specific floors by AR buttons.

Apple was granted patent US10,572,072 today, based on technology invented by Sunnyvale-based Lejing Wang and Daniel Kurz. The patent was first filed at the end of September 2017 and — atypically for Apple — includes photos of actual testing of the technique. This indicates that the company’s AR and depth camera research isn’t merely theoretical. Apple CEO Tim Cook has suggested that AR will be a major business for the company going forward, and reports have offered various timetables for the release of dedicated Apple AR glasses.