According to the World Health Organization, 800,000 people lose their lives to suicide each year. This number has remained fairly consistent year over year, but innovations in artificial intelligence and machine learning could help change this.

AI researchers have had a big year in 2017 with the creation of multiple technologies intended to help prevent suicide. Facebook, the AI Buddy Project, Bark.us, and most recently a joint effort by Carnegie Mellon University and the University of Pittsburgh have all made technologies with the potential to decrease the number of suicide-related deaths. Although an AI will never replace the emotional connection and support humans provide in a time of crisis, the innovations we’ve seen this past year could help health care providers, friends, and family members detect suicidal tendencies in their patients and loved ones before it’s too late.

AI in mental health care

Last week, researchers at Carnegie Mellon University and the University of Pittsburgh published a paper in Nature Human Today describing their most recent innovation in the artificial intelligence space. The team created an AI that could successfully identify participants experiencing suicidal thoughts.

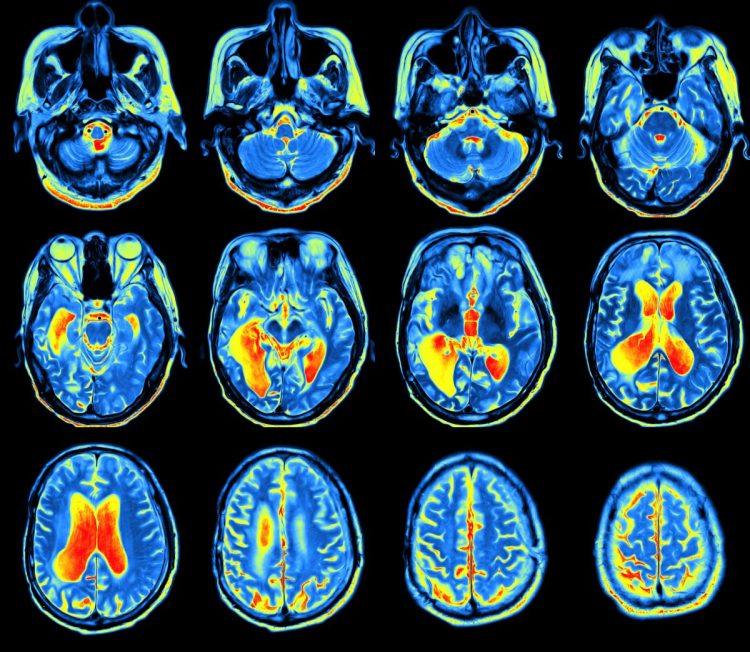

Researchers examined 34 young adults who were evenly split between participants with known suicidal tendencies and a control group. They guided each subject through a functional magnetic resonance imaging (fMRI) test where they were presented with three lists of 10 words. The word groups included suicide-related words like “death” and “distressed,” potentially positive effects like “carefree” and “kindness,” and potentially negative effects like “boredom” and “evil.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The team used brain scans from each test to identify five brain locations associated with six of the words that were able to distinguish suicidal participants from the control group. It was determined that the brain activity of suicidal participants differed significantly from the brain activity of participants in the control group when words like “death” or “trouble” were presented.

Researchers used these locations and words to train a machine learning classifier to identify suicidal subjects. The results were nearly spot on. The machine was able to identify 15 of the 17 suicidal participants and 16 of the 17 control subjects. Researchers achieved an even higher accuracy rate when they split the group into nine participants who had attempted suicide and eight who had not. When the classifier was trained on results from these subjects, it was able to correctly identify 16 of the 17 participants.

“Our latest work is unique insofar as it identifies concept alterations that are associated with suicidal ideation and behavior, using machine-learning algorithms to assess the neural representation of specific concepts related to suicide. This gives us a window into the brain and mind, shedding light on how suicidal individuals think about suicide and emotion related concepts,” said Marcel Just, the D.O. Hebb University Professor of Psychology in CMU’s Dietrich College of Humanities and Social Sciences.

The findings from this study are exciting for professionals in the psychology space as they offer hope for early detection of suicidal thoughts in at-risk patients. The technology must undergo further testing before it can be applied in standard mental health care, however. Lead researcher David Brent, from the University of Pittsburgh, says, “The most immediate need is to apply these findings to a much larger sample and then use it to predict future suicide attempts.”

The tech industry’s attempts to prevent suicide

Earlier this year, VentureBeat covered several consumer-facing AI-powered suicide prevention technologies created by tech companies. The first batch was tested by researchers at Facebook in March. Last spring, the social media giant announced a feature that would allow users to report live streams of their friends that included concerning messages. Facebook then used pattern-recognition technology from these reported posts to identify and flag when a post is “very likely to contain thoughts of suicide.” The Messenger app also received an update that makes it easier for individuals to contact professionals for help if they are having suicidal thoughts.

The AI Buddy Project is another suicide prevention technology that launched last spring. The product provides children of active duty military members (who are at higher risk for suicide) with a cartoon avatar they can speak with on their favorite digital platforms. The digital companion monitors the behavior of the child and sends progress reports to the primary guardian based on the words used in their conversations. The machine is trained to pick up on language that could be indicative of suicidal feelings and thoughts. This allows caretakers to more effectively monitor the mental health and wellbeing of children of currently deployed military members.

Bark.us is a tech company that uses machine learning to analyze user content via email, SMS, and social media platforms. The technology scans for language that might indicate a user is contemplating suicide and sends alerts to the individual’s guardian if it senses trouble based on their online correspondence. The company launched in 2015 and is already making a priceless impact on its consumers. In July, the company announced that its program had saved 25 lives after analyzing more than 500 million messages from teens. Though the technology is not a new innovation this year, July’s announcement of the number of lives the program has saved marked a major moment for the progression of suicide prevention technology.

A hopeful view of the future

The integration of AI-enabled solutions in suicide prevention is promising. As these solutions progress, it will be important to remember that the role of mental health professionals and caregivers will not become extinct. In fact, the role of emotional support systems and psychologists will only become more important. As AI technologies begin to provide clearer signs of suicidal thoughts in individuals earlier on, it will be imperative for friends, family members, and physicians to take action following warnings from technologies like those mentioned in this article.

If this story has raised concerns for yourself or someone you know, please contact a suicide crisis hotline for help.